create_run_config

Create a new dbnl RunConfig

dbnl.create_run_config(

*,

project: Project,

columns: list[dict[str, Any]],

scalars: Optional[list[dict[str, Any]]] = None,

description: Optional[str] = None,

display_name: Optional[str] = None,

row_id: Optional[list[str]] = None,

components_dag: Optional[dict[str, list[str]]] = None,

) -> RunConfig:Parameters

project

The Project this RunConfig is associated with.

columns

A list of column schema specs for the uploaded data, required keys name and type, optional key component, description and greater_is_better. type can be int, float, category, boolean, or string. component is a string that indicates the source of the data. e.g. "component" : "sentiment-classifier" or "component" : "fraud-predictor". Specified components must be present in the components_dag dictionary. greater_is_better is a boolean that indicates if larger values are better than smaller ones. False indicates smaller values are better. None indicates no preference.

Example:

columns=[{"name": "pred_proba", "type": "float", "component": "fraud-predictor"}, {"name": "decision", "type": "boolean", "component": "threshold-decision"}, {"name": "requests", "type": "string", "description": "curl request response msg"}]

See the column schema section below for more information.

scalars

NOTE: scalars is available in SDK v0.0.15 and above.

A list of scalar schema specs for the uploaded data, required keys name and type, optional key component, description and greater_is_better. type can be int, float, category, boolean, or string. component is a string that indicates the source of the data. e.g. "component" : "sentiment-classifier" or "component" : "fraud-predictor". Specified components must be present in the components_dag dictionary. greater_is_better is a boolean that indicates if larger values are better than smaller ones. False indicates smaller values are better. None indicates no preference. An example RunConfig scalars: scalars=[{"name": "accuracy", "type": "float", "component": "fraud-predictor"}, {"name": "error_type", "type": "category"}]

Scalar schema is identical to column schema.

description

An optional description of the RunConfig, defaults to None. Descriptions are limited to 255 characters.

display_name

An optional display name of the RunConfig, defaults to None. Display names do not have to be unique.

row_id

An optional list of the column names that can be used as unique identifiers, defaults to None.

components_dag

An optional dictionary representing the direct acyclic graph (DAG) of the specified components, defaults to None. Every component listed in the columns schema must be present in the components_dag. Example: components_dag={"fraud-predictor": ["threshold-decision"], 'threshold-decision': []}

See the components DAG section below for more information.

Column Schema

Column Names

Column names can only be alphanumeric characters and underscores.

Supported Types

The following type supported as type in column schema

float

int

boolean

string

Any arbitrary string values. Raw string type columns do not produce any histogram or scatterplot on the web UI.

category

Equivalent of pandas categorical data type. Currently only supports category of string values.

list

Currently only supports list of string values. List type columns do not produce any histogram or scatterplot on the web UI.

Components

The optional component key is for specifying the source of the data column in relationship to the AI/ML app subcomponents. Components are used in visualizing the components DAG.

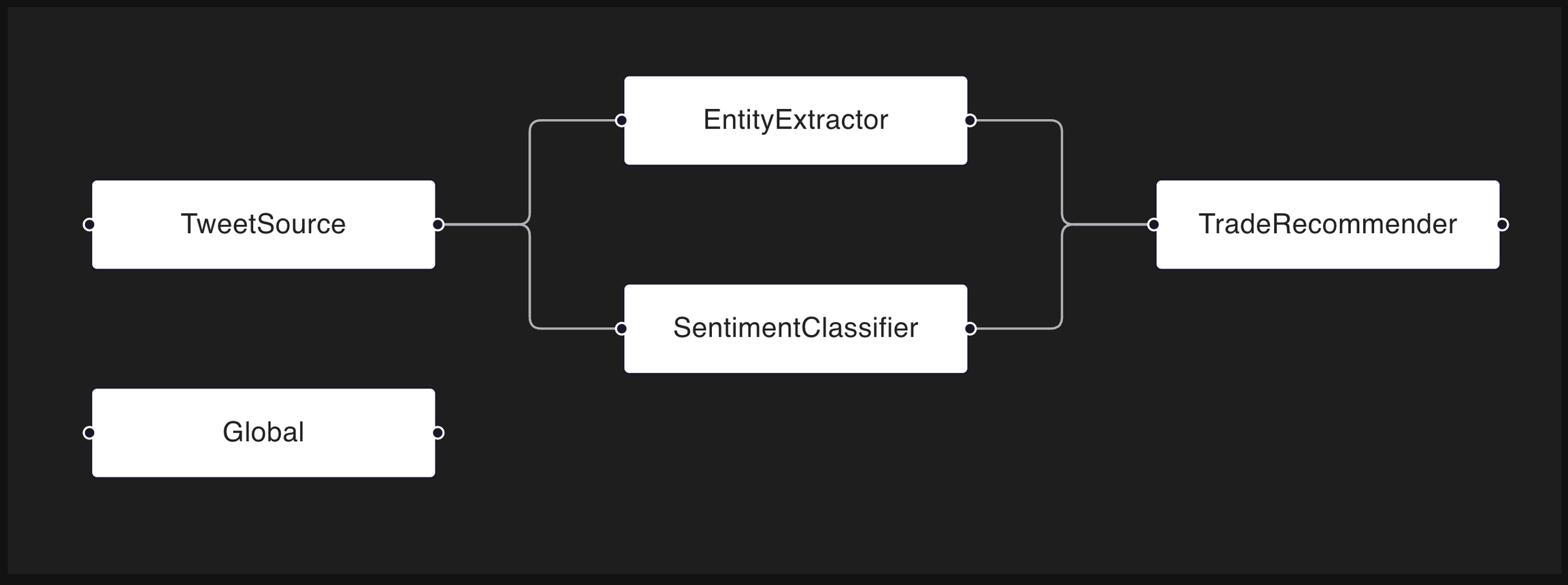

Components DAG

The components_dag dictionary specifies the topological layout of the AI/ML app. For each key-value pair, the key represents the source component, and the value is a list of the leaf components. The following code snippet describes the DAG shown above.

Returns

A new dbnl RunConfig

Examples

Basic Usage

RunConfig with DAG

RunConfig with row_id

RunConfig with scalars

Was this helpful?