The flow of data

Your data + dbnl testing == insights about your app's behavior

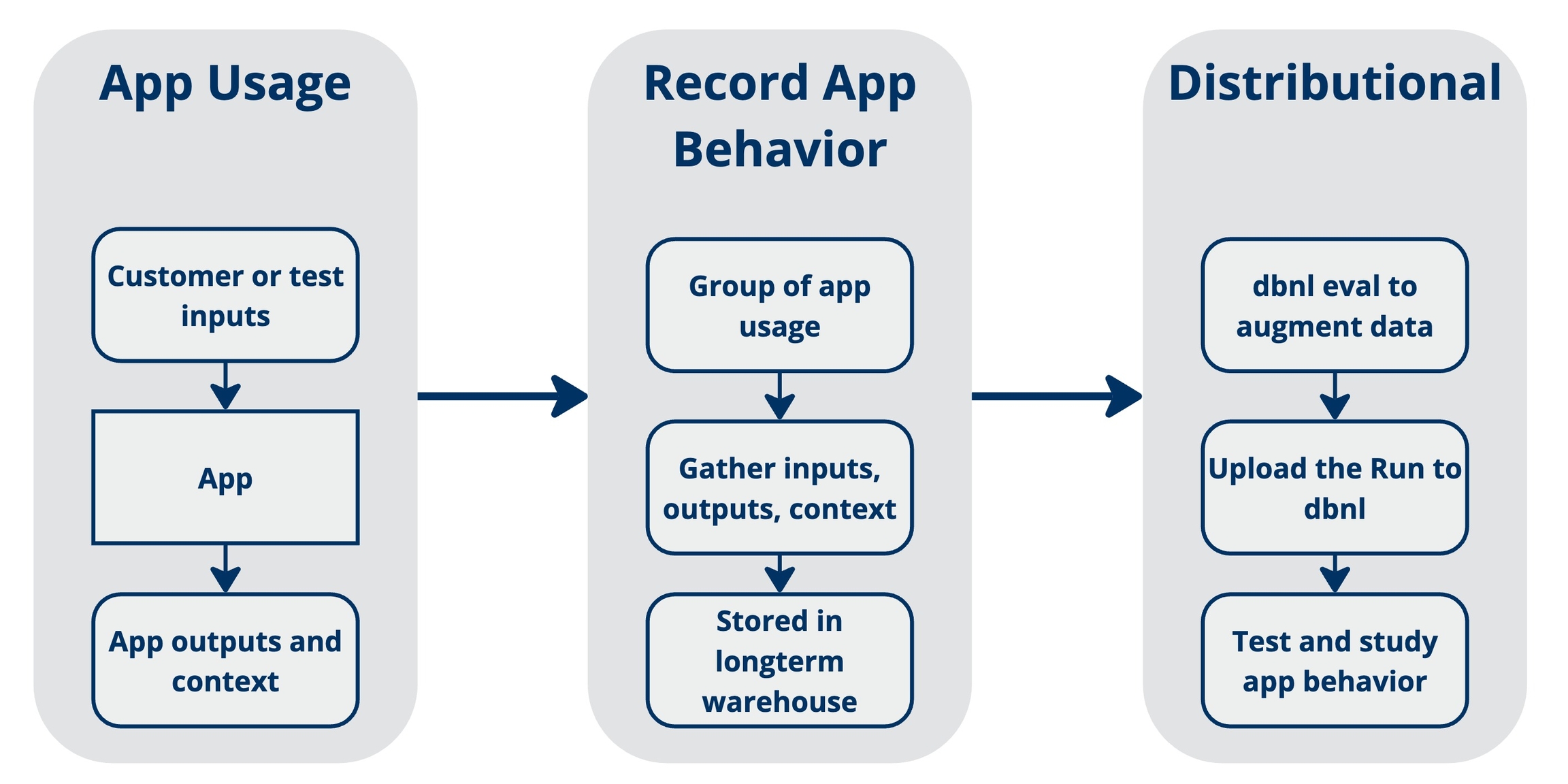

Distributional uses data generated by your AI-powered app to study its behavior and alert you to valuable insights or worrisome trends. The diagram below gives a quick summary of this process:

Each app usage involves input(s), the resulting output(s), and context about that usage

Example: Input is a question about the city of Toronto; Output is your app’s answer to that question; Context is the time/day that the question was asked.

As the app is used, you record and store the usage in a data warehouse for later review

Example: At 2am every morning, an airflow job parses all of the previous day’s app usages and sends that info to a data warehouse.

When data is moved to your data warehouse, it is also submitted to dbnl for testing.

Example: The 2am airflow job is amended to include data augmentation by dbnl Eval and uploading of the resulting dbnl Run to trigger automatic app testing.

You can read more about the dbnl specific terms earlier in the documentation. Simply stated, a dbnl Run contains all of the data which dbnl will use to test the behavior of your app – insights about your app’s behavior will be derived from this data.

A dbnl Run usually contains many (e.g., dozens or hundreds) rows of inputs + outputs + context, where each row was generated by an app usage. Our insights are statistically derived from the distributions estimated by these rows.

dbnl Eval is our library that provides access to common, well-tested GenAI evaluation strategies. You can use dbnl Eval to augment data in your app, such as the inputs and outputs. Doing so produces a broader range of tests that can be run, and it allows dbnl to produce more powerful insights.

Was this helpful?