Insights surfaced elsewhere on Distributional

Test Sessions are not the only place to learn about your app

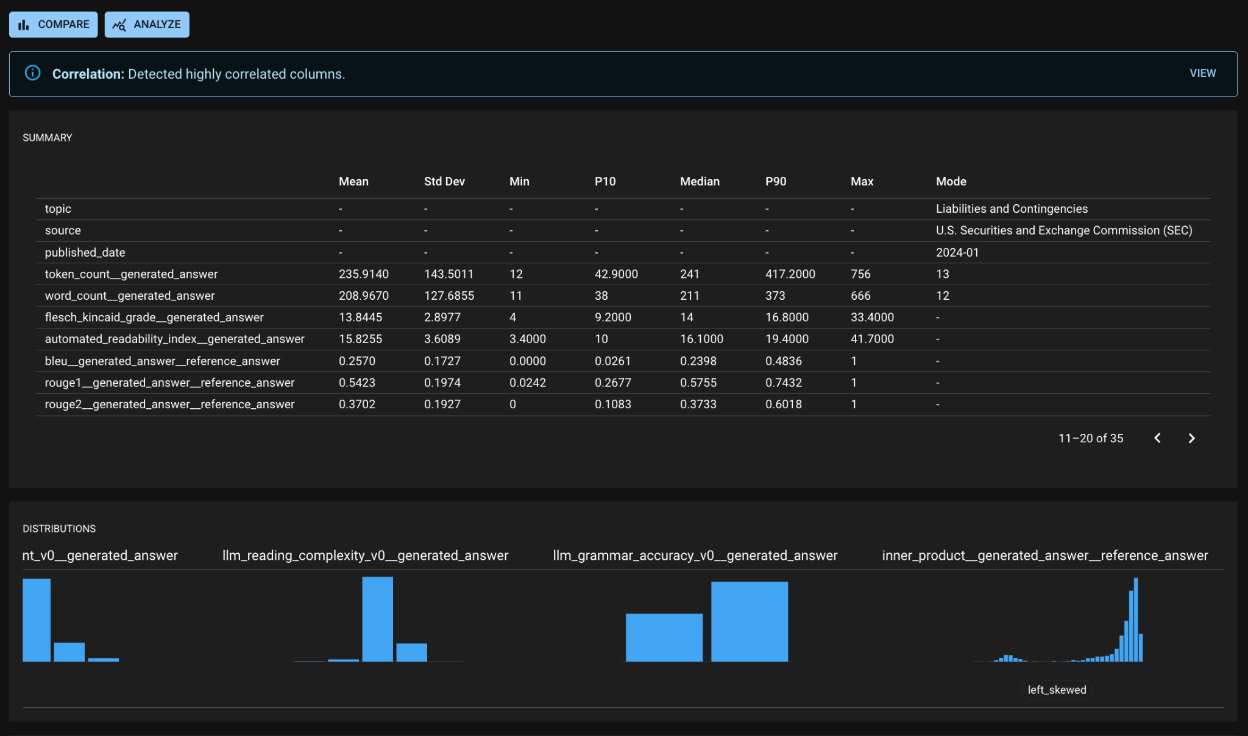

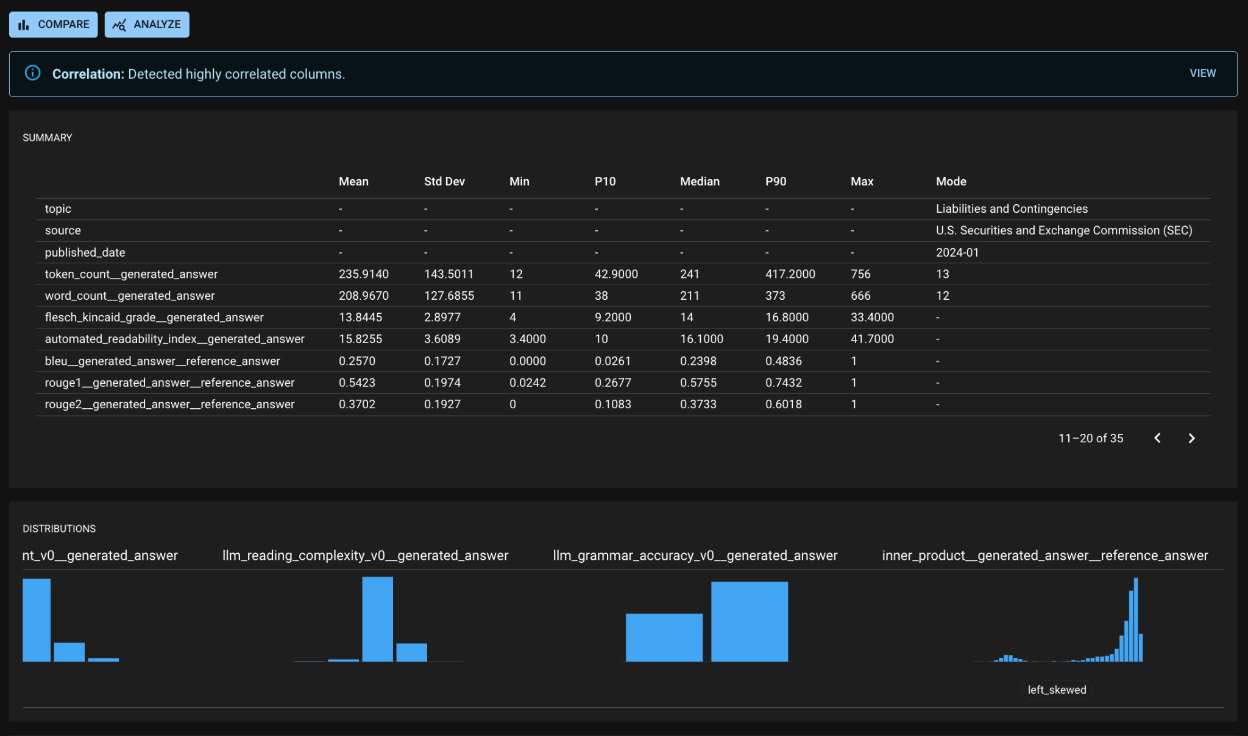

Run Detail page

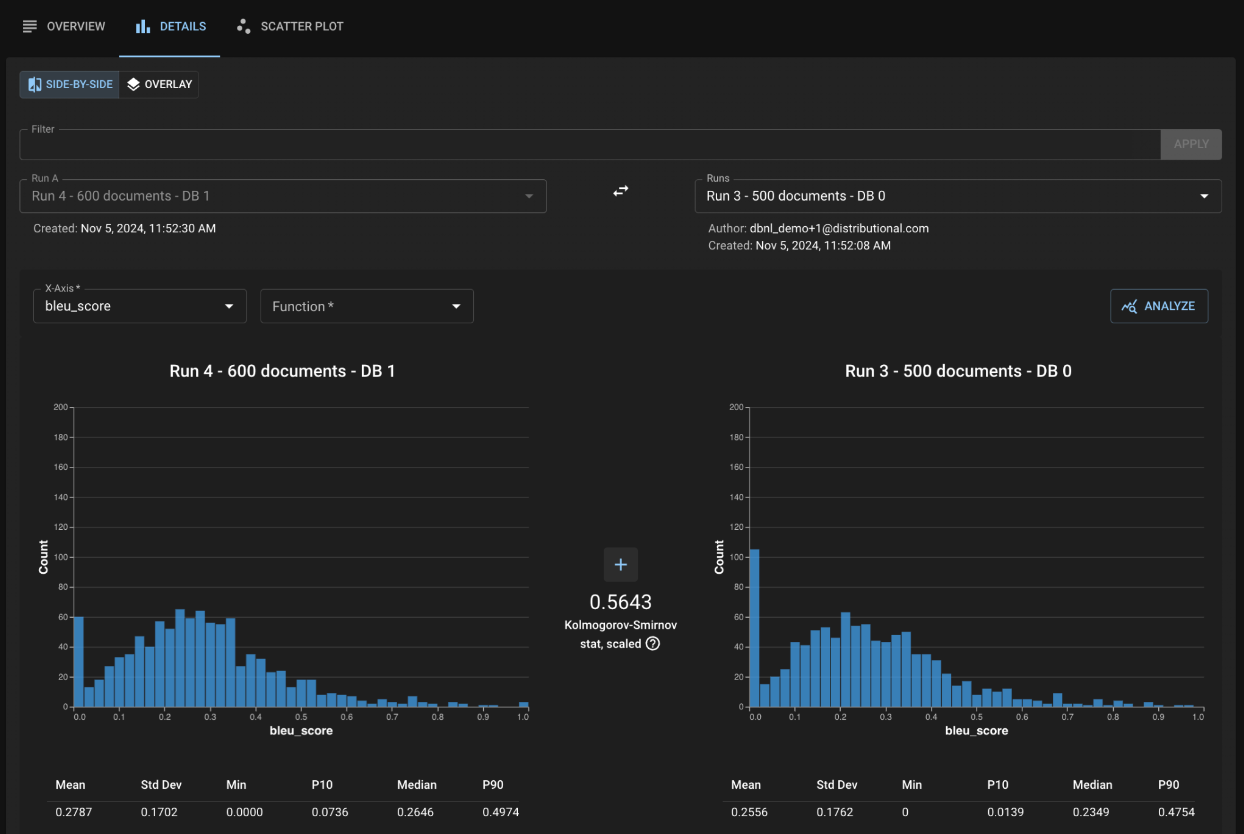

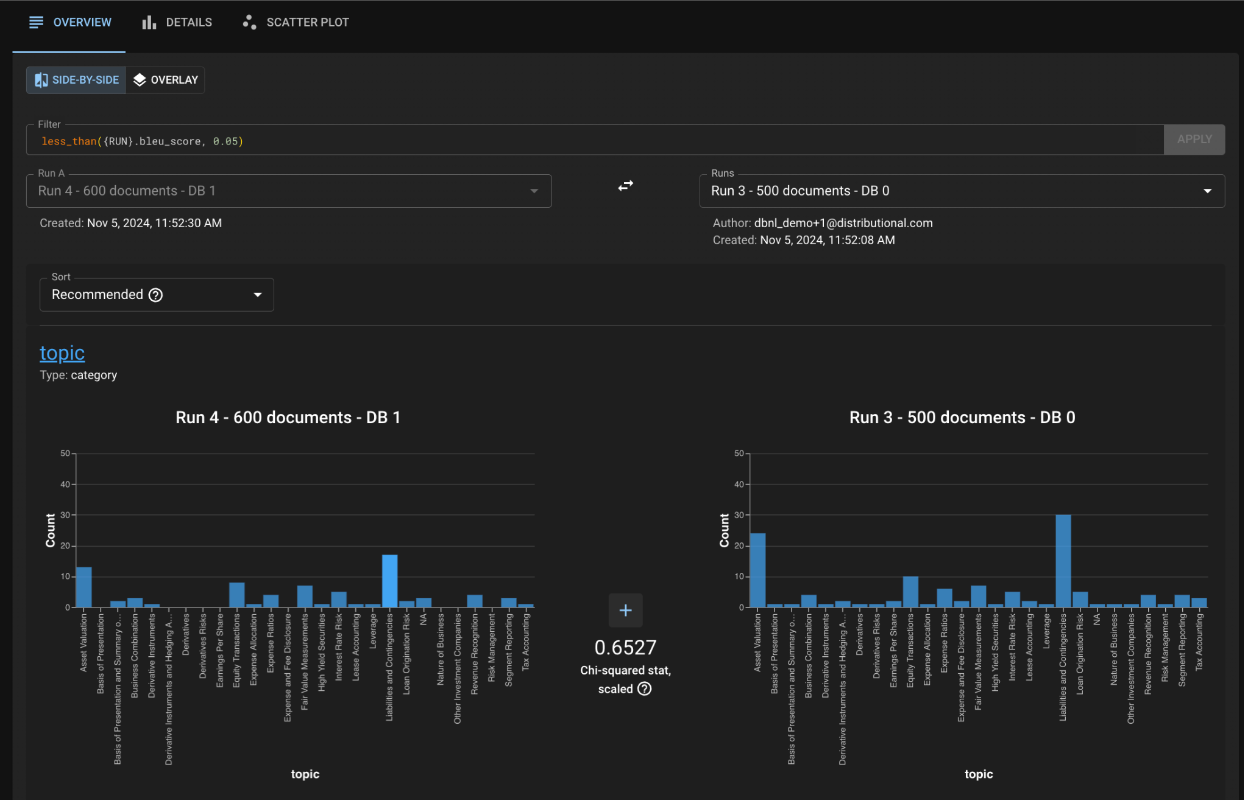

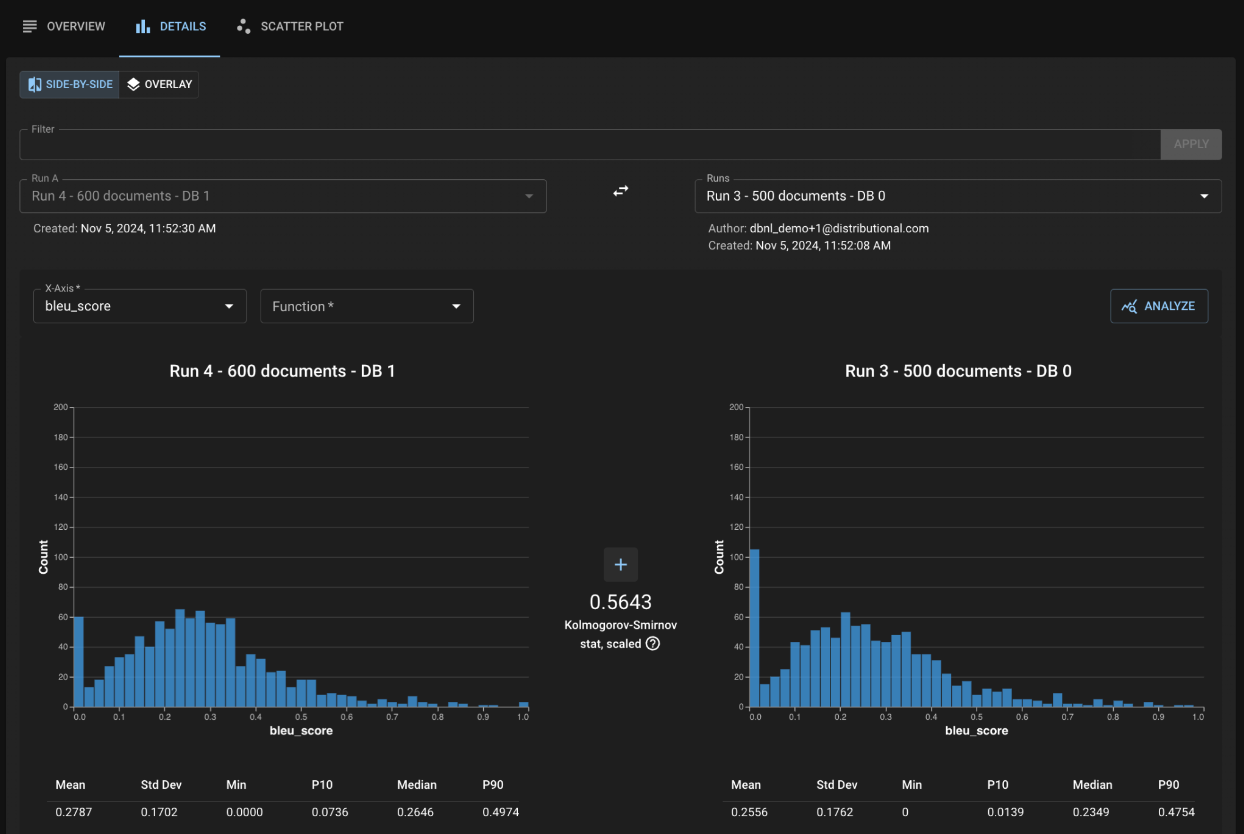

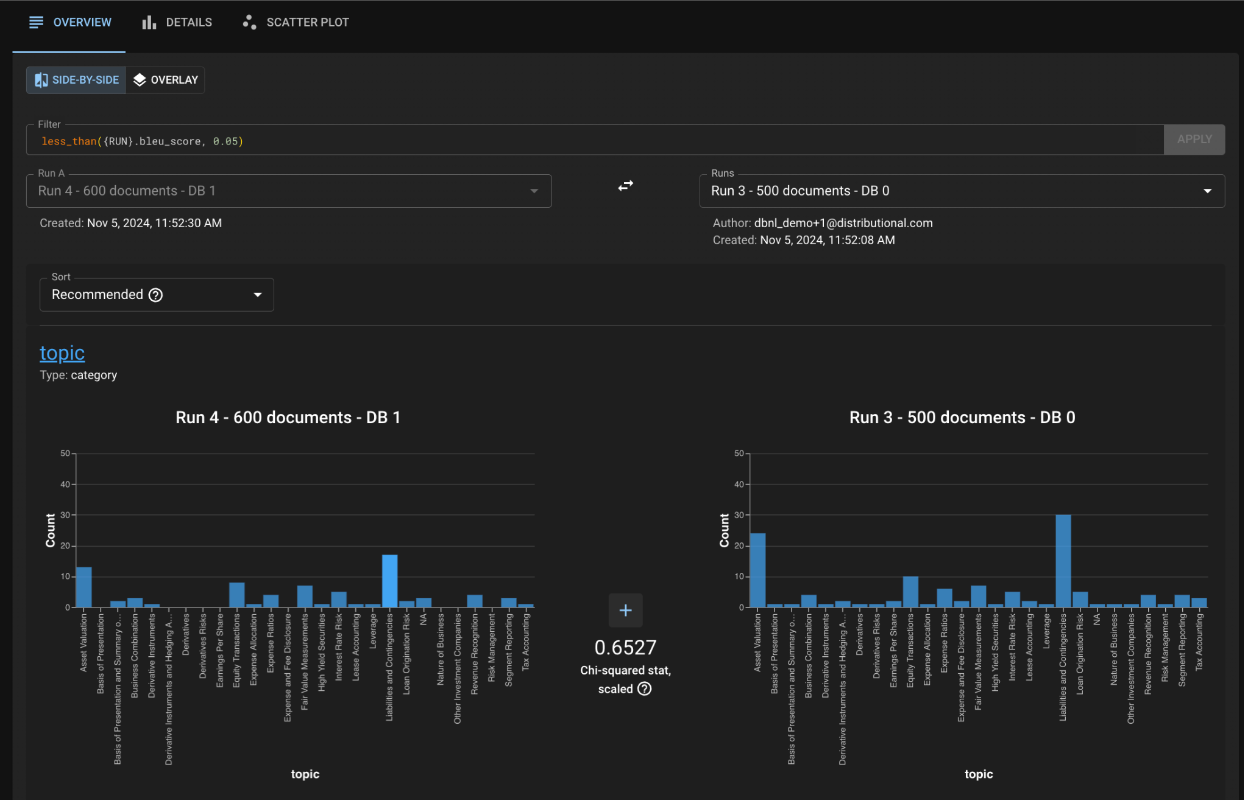

Compare page

Was this helpful?

Test Sessions are not the only place to learn about your app

Was this helpful?

Was this helpful?