Overview - DBNL

Analyze hidden behavioral signals from production log data to continuously improve your AI products

What is DBNL?

DBNL is an Adaptive Analytics platform designed to discover and track hidden behavioral signals in production AI logs and traces so that product owners can confidently know exactly where and how to improve their AI products over time. The platform gives a detailed snapshot of agent behavior - the interplay and correlations between users, context, tools, models, and metrics. Patterns in behavioral signals are automatically surfaced as Insights that can be investigated and tracked. This empowers AI teams to accelerate the AI data flywheel by pinpointing the signals and specific examples they can use to improve their products with confidence.

Why DBNL?

The AI data flywheel promises better agentic performance over time through post-training optimization on real production data, but not all data is created equal. DBNL helps AI product owners fill the critical gap between high level monitoring tools (focused on aggregate performance through evals, logging, and tracing) and low level debugging tools (focused on single-trace observability) to pinpoint hidden behavioral signals and relevant example data for post-training optimization. This allows AI product owners to better understand agent and user behavior to know exactly where and how to improve AI products in production.

Start analyzing right away with the Quickstart

Who is DBNL for?

Distributional is built for AI product teams looking to understand and improve their AI agents that have

Scale: More than 1,000 traces or logs per day (too many to manually inspect)

Data: Access to full spans from OTEL trace data or similarly rich data for analysis (See our Semantic Convention)

Value: Quantifiable business metrics to track and improve

Understanding: You already monitor aggregate performance (but need richer analysis to know where and how to improve and fix your AI agents)

How do I deploy DBNL?

DBNL is openly distributed and free to deploy within your cloud or on-premises environment, keeping your data safe, secure, and always under your control. Head over to our Quickstart to get started right away.

Analytics-Driven AI Data Flywheel

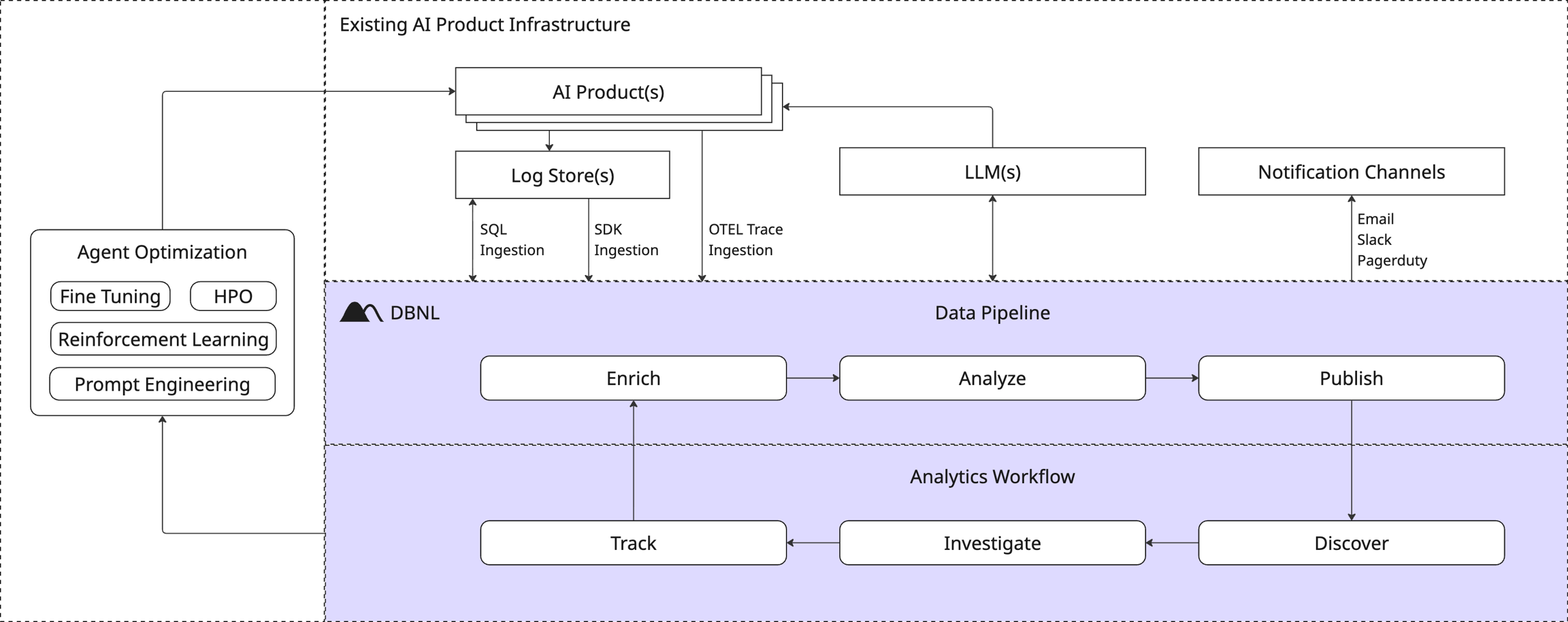

DBNL integrates with your existing AI tools to easily and securely perform analytics for any AI product. The DBNL Data Pipeline ingests, enriches, and analyzes production AI logs and traces, surfacing behavioral signals. These signals are published to Dashboards and as Insights, allowing users to discover, investigate, and track them as part of the DBNL Analytics Workflow. This gives you concrete signals and relevant data to power improvements to your agent as part of an AI data flywheel.

Ingest

Production log data from AI products is published continuously via OTEL Trace Ingestion or pushed in batches via SDK Log Ingestion or pulled in batches from existing tables via a SQL Integration Ingestion.

Enrich

Data is augmented with LLM-as-judge, NLP, and other Metrics provided by DBNL or customized by the user to create a vector of rich behavioral information for every log line or trace, capturing the interplay and correlations between users, context, tools, models, and metrics. These behavioral vectors define a high-dimensional distributional fingerprint of behavior for the AI product rich with behavioral signals.

Analyze

Unsupervised learning and statistical techniques are applied to the distributional fingerprint daily to discover Insights; patterns in behavior related to filtered subsets of logs.

Publish

Dashboards are updated and new Insights are generated to represent newly observed and discovered behavior from the latest production data.

Discover

Product owners review generated Insights and Dashboards for greatest potential product impact.

Investigate

Product owners explore and refine evidence-based behavioral signals through exploration of metrics and inspection of the raw Logs.

Optimize and Repeat

The signals discovered and the relevant examples surfaced can be used to perform post-training optimization like fine tuning, reinforcement learning, prompt/context engineering, hyperparameter optimization, or any other improvements to the underlying agent as part of an Analytics-Driven AI Data Flywheel.

As improvements to the agent are made and new production data is ingested, the workflow adapts automatically by using tracked Metrics and Segments to guide deeper and more customized analysis over time.

Next Steps

Ready to start using DBNL? Head straight to our Quickstart to get set up on the platform and start testing your AI products right away for free.

Want to learn more about the workflow? Check out the Adaptive Analytics Flywheel.

Want to understand more about the platform? Check out the Architecture, Deployment options, and other aspects of the Platform.

Was this helpful?