Model Connections

How to hook up LLMs to DBNL

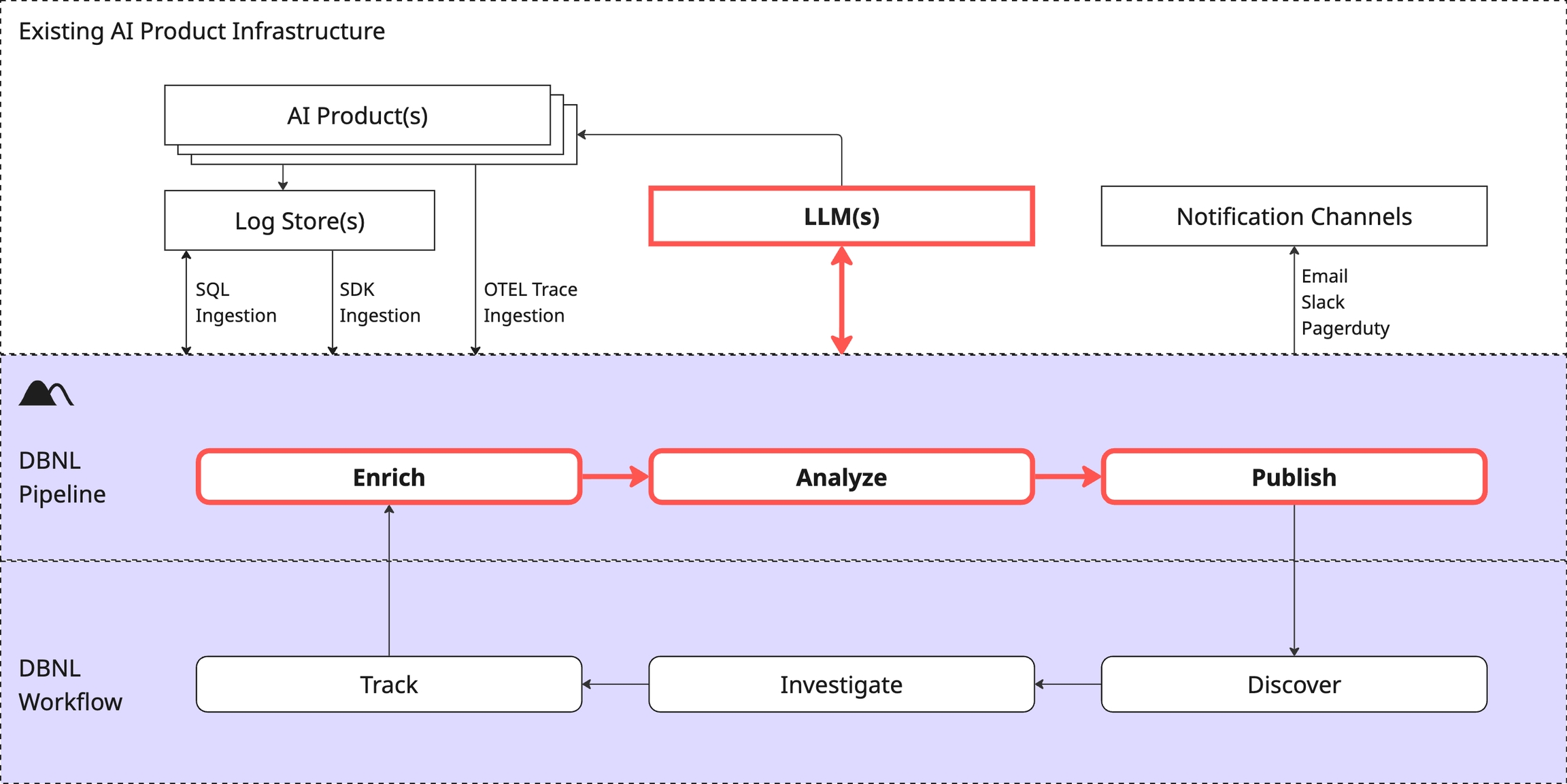

Why does DBNL require a Model Connection?

Model Connections are how DBNL interfaces with LLMs, which is required for each step of the DBNL Data Pipeline to function. It enables DBNL to

Compute LLM-as-judge Metrics as part of the enrich step.

Perform certain unsupervised analytics processes as part of the analysis step.

Translate surfaced behavioral signals into human readable Insights as part of the publish step.

The Model Connection will be called many times per day per project (for every LLM-as-judge metric, for analysis steps, for Insight generation, etc). We recommend cutting a new API key for your DBNL Model Connection so you can monitor and budget usage. See Types of Model Connections for tradeoffs on different approaches.

Types of Model Connections

Fundamentally a Model Connection needs to be able to expose a LLM chat completion interface that is accessible by your DBNL deployment. It can be

An externally managed service (e.g. together.ai, OpenAI, etc)

A cloud managed service that is part of your VPC (e.g. Bedrock, Vertex, etc)

A locally managed deployment (e.g. a cluster of NVIDIA NIMs running in your DBNL k8s cluster as part of your deployment)

There are pros and cons to each of these approaches:

Externally managed service (together.ai, OpenAI, etc)

Fast and easy to set up (just provide keys)

Model and scaling flexibility

Requires sending data outside of your cloud environment

Higher cost, on demand model

Cloud managed service (Bedrock, Vertex, etc)

Data stays within your cloud provider

Often managed by another team within the organization

Can be higher cost than locally running models

Usage, rate limits are typically shared across organization

Locally managed deployment (NVIDIA NIMs in k8s cluster)

Data stays within your local deployment

Cheaper than a managed service

Maximum control of cost vs timing tradeoffs

Requires access to GPU resources

Can require local admin and debugging

Recommended Model Connections

The following models are known to work well for LLM-as-judge, analysis, and Insight generation.

We recommend using a similar "mid-size" model that trades off speed, cost, and quality well.

Creating a Model Connection

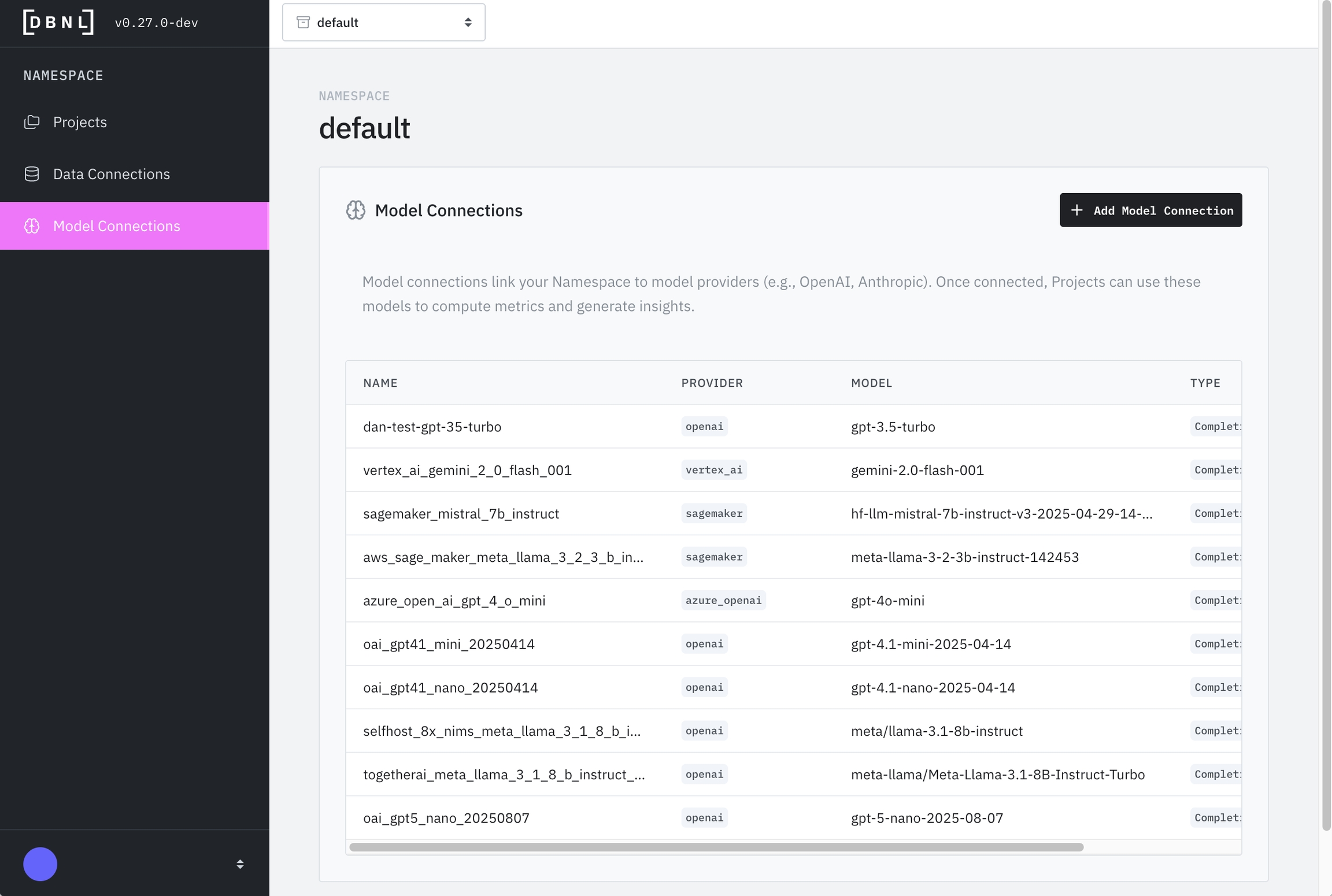

Model Connections are defined at the Namespace level of an Organization and can be used by any Projects within the Namespace. For convenience, a new Model Connection can be created as part of the Project Creation flow as well.

A Model Connection has the following attributes:

Name (required): How the Model Connection is referenced when setting a default Model Connection for a project or LLM-as-judge Metric.

Description (optional): Human readable description of the connection for reference.

Model (required): The model name to be used as part of the API call (e.g.

gpt-3.5-turbo,gemini-2.0-flash-001, etc). See the documentation for your model provider for more details.Provider (required): One of

AWS Bedrock: Managed AWS service for foundation models.

AWS Sagemaker: Platform to build, train, and deploy machine learning models by AWS (not recommended for production DBNL deployments).

Azure OpenAI: Microsoft service providing OpenAI models via Azure cloud.

Google Gemini: Google’s AI model for chat, code, and reasoning.

Google Vertex AI: Managed GCP service for building and deploying models.

NVIDIA NIM: NVIDIA microservices for deploying optimized AI models easily.

OpenAI: Managed service for advanced language and reasoning models

OpenAI-compatible: Any provider that exposes an "OpenAI-like" API, like together.ai

Configuration Parameters (required): Depending on the provider selected, you may need to provide additional required information like Access Key IDs, Secret Access Keys, preferred regions, endpoints/URLs, etc.

Configuration Parameters by Provider

Different providers require different configuration parameters:

AWS Access Key ID: Your AWS IAM access key with Bedrock permissions

AWS Secret Access Key: Corresponding secret key

AWS Region: Region where Bedrock is available (e.g.,

us-east-1,us-west-2)

AWS Access Key ID: Your AWS IAM access key

AWS Secret Access Key: Corresponding secret key

Endpoint URL: Your Sagemaker endpoint URL

AWS Region: Region where your endpoint is deployed

API Key: Your Azure OpenAI resource key

Endpoint URL: Your Azure OpenAI endpoint (e.g.,

https://your-resource.openai.azure.com/)API Version: Azure OpenAI API version (e.g.,

2024-02-01)

API Key: Your Google AI Studio API key

Project ID: Your GCP project ID

Region: GCP region (e.g.,

us-central1)Service Account JSON: Path to service account credentials file (for authentication)

Endpoint URL: URL where your NIM service is deployed (e.g.,

http://nim-service.default.svc.cluster.local:8000)API Key: (Optional) If authentication is enabled on your NIM deployment

API Key: Your OpenAI API key from platform.openai.com

API Key: API key from your provider

Base URL: Provider's API endpoint (e.g.,

https://api.together.xyz/v1for together.ai)

Editing a Model Connection

A Model Connection can be edited or deleted by clicking on the "Model Connections" tab on the sidebar of the Namespace landing page.

Debugging a Model Connection

A Model Connection can be tested by navigating to the specific Model Connection as above and clicking on the "Validate" button. This will send a simple request to the endpoint and inform you if it was able to complete the request.

Next Steps

Ready to send data to your project? Start ingesting data into your project using your defined Data Connection to kick off the Adaptive Analytics Workflow.

Want to understand more about the platform? Check out the Architecture, Deployment options, and other aspects of the Platform.

Was this helpful?