Data Pipeline

How log data becomes behavioral signals

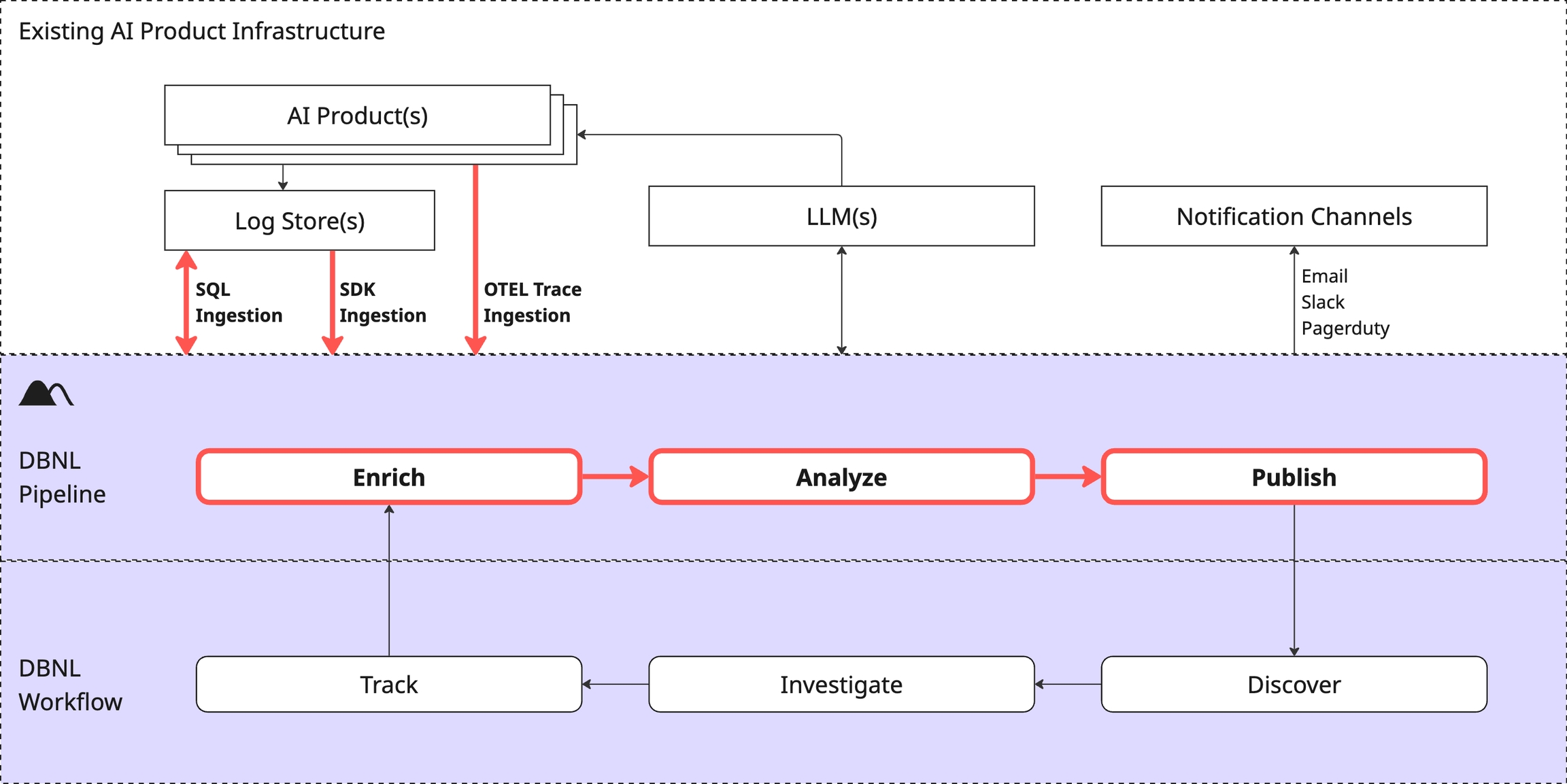

The Data Pipeline is how DBNL converts raw production AI log data into actionable Insights and Dashboards for each Project and stores it for future analysis within the Data Model.

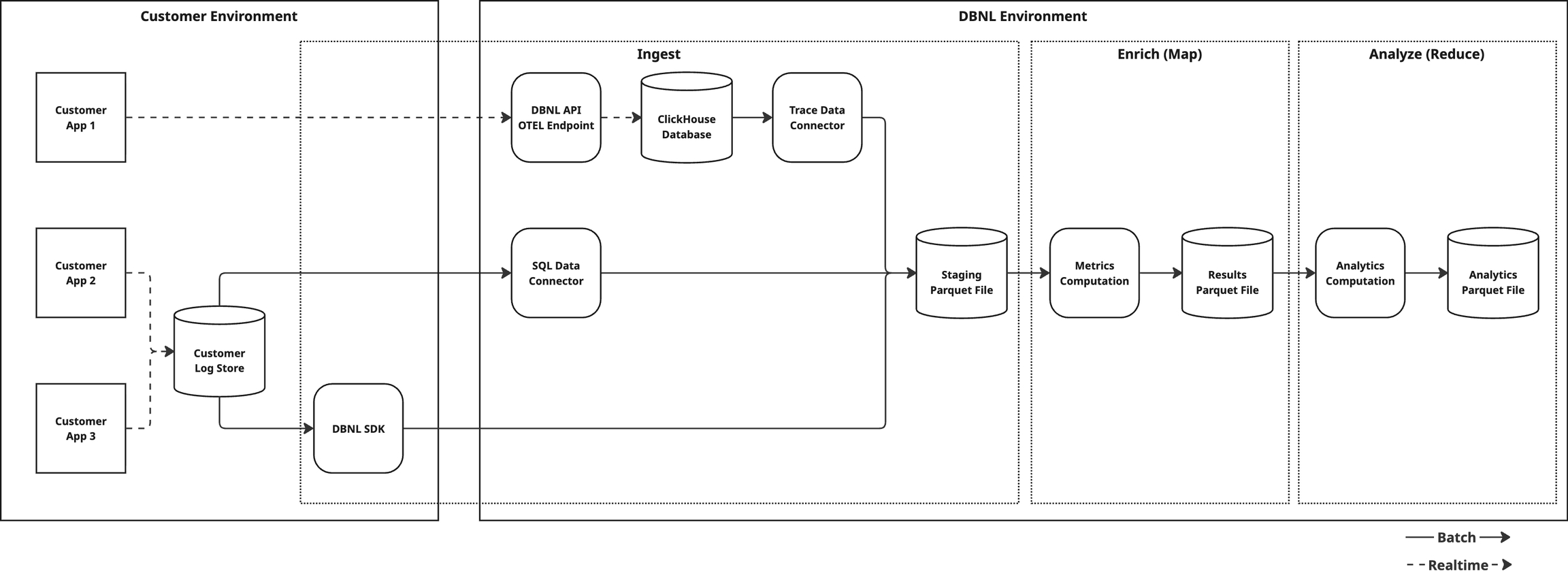

The Data Pipeline is invoked as production log data is ingested into your DBNL Deployment. This process kicks off at data ingestion if using SDK Log Ingestion and SQL Integration Ingestion and daily at UTC midnight for OTEL Trace Ingestion.

A DBNL Data Pipeline Run performs the following tasks:

Ingest: Raw production log data is flattened into Columns. By using the DBNL Semantic Convention certain Columns can have rich semantic meaning and allow for deeper Insights to be generated.

Analyze: Various unsupervised learning techniques are applied to the enriched log data to discover behavioral signals corresponding to shifts, segments, or outliers in behavior as Insights.

Publish: Insights and updated charts are published to Dashboards for consumption by the user.

Data Model

Columns

A single log represents the captured behavior from a production AI product. Data from each log is flattened into multiple Columns, using the DBNL Semantic Convention whenever possible. The required Columns for a given log are:

input: The text input to the LLM.output: The text response from the LLM.timestamp: The UTC timecode associated with the LLM call.

Other semantically known columns can be found in the DBNL Semantic Convention. By using these known column names DBNL can provide better Insights.

Metrics

Metrics are computed from Columns and appended as new Columns for each log.

Segments

Segments represent filters on Columns of Logs.

Was this helpful?