Hello World (Sentiment Classifier)

In this tutorial, we demonstrate core Distributional usage, including data submission and test execution, on a tweet sentiment classifier.

The data files required for this tutorial are available in the following file.

Objective

The objective of this tutorial is to demonstrate how to use Distributional, often abbreviated dbnl. You will write and execute tests that provide confidence that the underlying model is consistent with its previous version. At the end of this tutorial, you will have learned about the following elements of successful dbnl usage:

Define data schema - Create a run config which explains to dbnl the columns which will be sent and how those columns interact with each other.

Once this work is done, the same run config will be reused during consistent integration testing.

Push data to DBNL - Use the Python SDK to report runs to dbnl.

Please contact your Applied Customer Engineer to discuss integrations with your data storage platform

Design consistent integration tests - Review data that exists in dbnl to design tests for consistency of app status.

Common testing strategies for consistency include:

Confirming that distributions have the same statistics,

Confirming that distributions are overall similar,

Confirming that specific results have not deviated too severely.

Execute and review test sessions - After test sessions are completed, you can inspect individual assertions to learn more about the current app behavior.

Setting the scene

As owners of the sentiment classifier, you might want to improve the model's performance, or you might want to change the model to a different one that is more efficient. In both cases, it is important to ensure that the new model is functioning as expected. This tutorial presents a change from a rule-based classic sentiment classifier with one powered by LLMs.

To do this, you identify a fixed benchmark dataset of 10000 tweet in order to ensure that any changes that might be observed between models are caused by the models and not the data.

Below is an example where you fetch these tweets from Snowflake. The fetch_tweets function will return a list of tweets with the columns tweet_id, tweet_content, and ground_truth_sentiment.

Define the data schema (run_config)

run_config)You define the schema of the columns present in the test data through your run configuration. This is important to ensure that the data is in the correct format before it is sent to dbnl.

Note that, in this example, the tweets are indexed based on an arbitrary ordering; that ordering is referred to as the tweet_id. You have the ability to match individual results between runs using this tweet_id, which enables tests between individual results.

Prepare the results for dbnl

The run_model_original and run_model_new functions will return a pandas dataframe with the sentiment columns which are then saved to disk.

Latency values are computed externally, but could also be returned from the model itself, along with data such as the number of tokens used by an LLM tool.

These two dataframes contain measurements of the status of the sentiment classifier as measured according to all of the columns. This data provides the necessary information for executing tests to assert similar or aberrant behavior between the two models.

Push the runs to dbnl

After following the Getting Started instructions, you follow the steps below to push data to DBNL:

Authenticate with dbnl (API Token available at https://app.dbnl.com/tokens)

Create a project in dbnl.

Create a run configuration in dbnl.

Create a run in that project using that run config and a dataframe from earlier.

Define tests of consistent behavior

At this point, you can view and compare the runs for the two models within the dbnl website: the output of the above code contains the appropriate urls. You also can use the website or the SDK to create tests of consistent behavior. We discuss these tests in this section, demonstrating examples of three different types of test configurations below. Each test has an assertion which has some constant threshold that may seem arbitrary at a glance. Determining the proper thresholds comes with experience and learning the degree to which statistics change. You can read more about choosing a threshold, our product roadmap includes such features as automatically determining threshold based on user preferences.

Example 1: Test that the means of the distributions have not changed

This is an example test on the positive_sentiment column. The test asserts that the mean of the positive_sentiment column in the new model is within 0.1 of the mean of the positive_sentiment column in the original model.

The value 0.1 represents a rather large average shift in distribution over 10000 tweets -- any change at this level is certainly worth investigating.

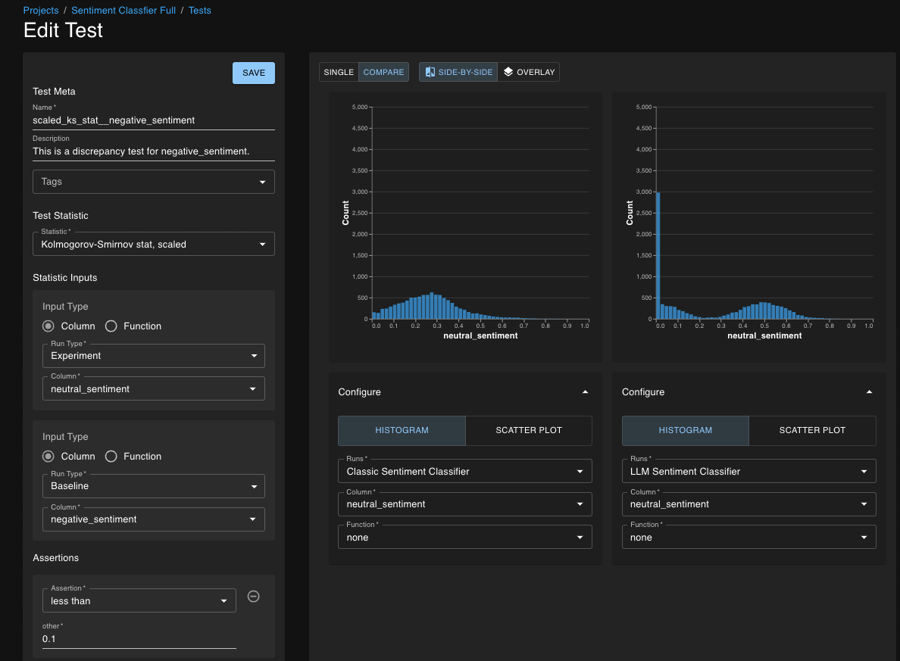

Example 2: Test that the distributions are overall similar

This is an example test on the neutral_sentiment column. The test asserts that the distribution of the neutral_sentiment column in the new model is similar to the distribution of the neutral_sentiment column in the original model.

Here, we used the Kolmogorov-Smirnov statistic (with some scaling that we have developed internally) to measure the similarity of these distributions. The threshold 0.1 is something that must be learned after some practice and experimentation, as the quantity has no physical interpretation. Please reach out to your Applied Customer Engineer if you would like guidance on this process.

Example 3: Test that specific results have not deviated too severely

This is an example test on the negative_sentiment column. The test asserts that on a tweet by tweet basis, the negative_sentiment column in the new model is on average within 0.1 of the negative_sentiment column in the original model.

In this situation, if any single result were to vary (either increasing or decreasing negative sentiment) by 0.1, that would be okay. But if the average change were 0.1, that implies a rather consistent deviation in behavior for a large number of results. Because this is row-wise operation, increases and decreases in sentiment both contribute equally (not canceling each other out).

Using the Web Application to define tests

The above examples show how to use a test payload to define tests. This is also possible with the UI. Here is an example of how to use the UI to conduct Test 2 above:

Execute the tests

After defining the tests (examples above of the types of tests defined in this tutorial), you can execute them using the SDK. This process involves defining a baseline run, defining the tests, and submitting the new run which will automatically trigger the tests.

Defining a baseline

Set the run as the baseline run for the project. This will be used as the baseline for all tests which compare the EXPERIMENT run to the BASELINE run.

Defining the tests

The tests are defined in the test_payloads.json file. The prepare_incomplete_test_spec_payload function is used to prepare the test spec payload for the test. This adds the project id (and tag ids if they exist) to the test spec payload.

Submitting the v2 run, automatically triggering a test

The send_run_data_to_dbnl function is used to send the run data to dbnl. This function will automatically trigger the tests defined in the test_payloads.json file. The run submitted is treated as the EXPERIMENT run.

Reviewing a completed test session

The first completed test session, comparing the original sentiment classifier against the high F1-score LLM-based sentiment classifier, shows some passing assertions and some failed assertions.

Digging into these failed test assertions, you can see that there is a significant difference in behavior both on the result-by-result level and at the level of the full distribution. For the matched absolute difference, you see that the distribution of neutral sentiment has shifted well beyond the threshold.

When you study the KS test for the positive sentiment, you see a rather massive change in distribution; the original unimodal distribution on the right has shifted to a bimodal distribution on the left.

Clicking on the Compare button takes us to the page used to study how two runs relate through the various columns. In particular, we can use the scatter plot functionality to study how the sentiment is a function of the tweets themselves.

You and your teammates can have a lively discussion regarding whether this is in fact desired behavior. But this test session alerted you to the change in behavior and provided follow up analysis on your data to understand how this change manifested and whether it is a problem.

Was this helpful?