Distributional Testing

Why we need to test distributions

AI testing requires a very different approach than traditional software testing. The goal of testing is to enable teams to define a steady baseline state for any AI application, and through testing, confirm that it maintains steady state, and where it deviates, figure out what needs to evolve or be fixed to reach steady state once again. This process needs to be discoverable, logged, organized, consistent, integrated and scalable.

AI testing needs to be fundamentally reimagined to include statistical tests on distributions of quantities to detect meaningful shifts that warrant deeper investigation.

Distributions > Summary Statistics: Instead of only looking at summary statistics (e.g. mean, median, P90), we need to analyze distributions of metrics, over time. This accounts for the inherent variability in AI systems while maintaining statistical rigor.

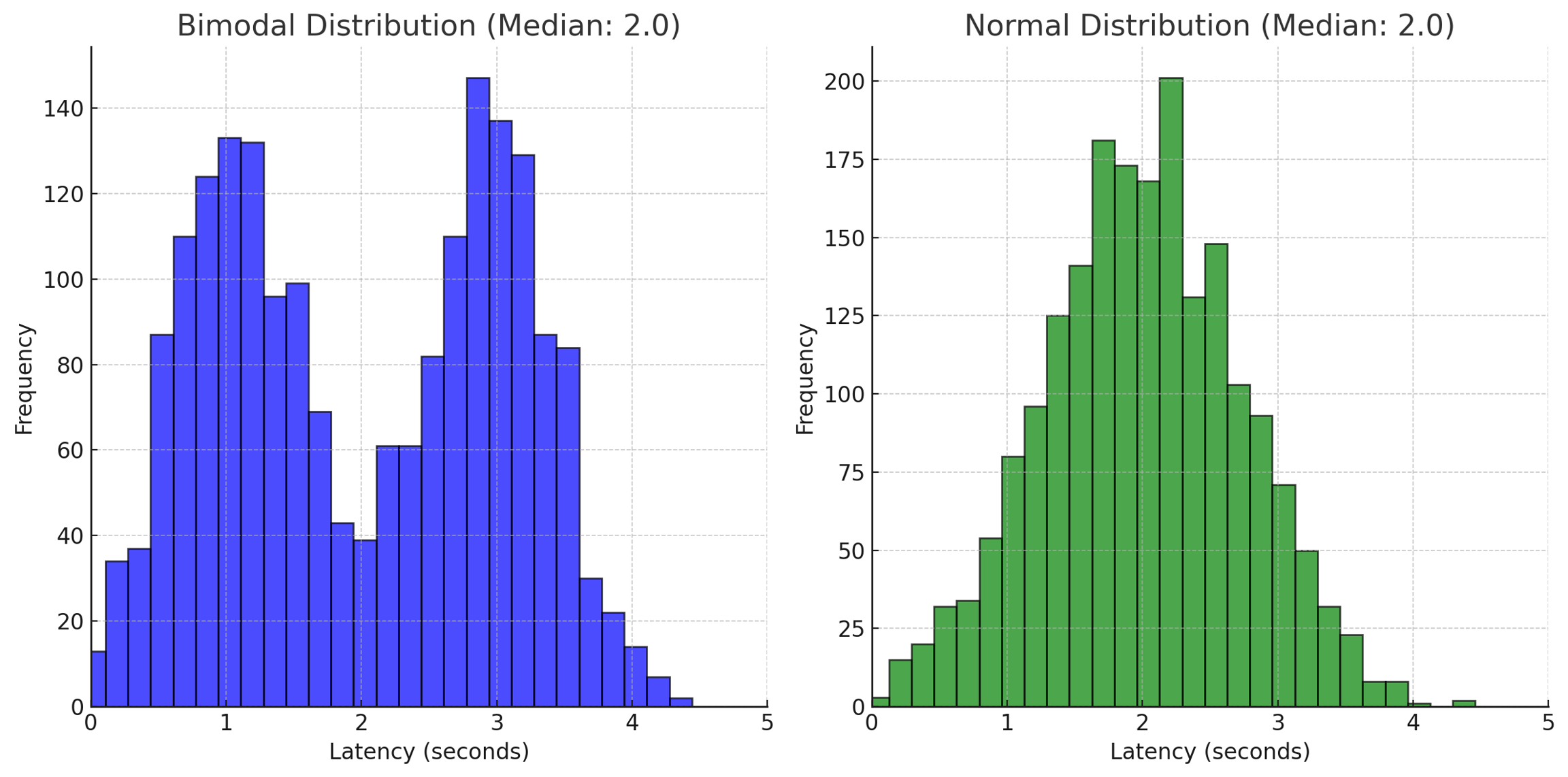

Why is this useful? Imagine you have an application that contains an LLM and you want to make sure that the latency of the LLM remains low and consistent across different types of queries. With a traditional monitoring tool, you might be able to easily monitor P90 and P50 values for latency. P50 represents the latency value below which 50% of the requests fall and will give you a sense of the typical (median) response time that users can expect from the system. However, the P50 value for a normal distribution and bimodal distribution can be the same value, even though the shape of the distribution is meaningfully different. This can hide significant (usage-based or system-based) changes in the application that affect the distribution of the latency scores. If you don’t examine the distribution, these changes go unseen.

Consider a scenario where the distribution of LLM latency started with a normal distribution, but due to changes in a third-party data API that your app uses to inform the response of the LLM, the latency distribution becomes bimodal, though with the same median (and P90 values) as before. What could cause this? Here’s a practical example of how something like this could happen. The engineering team of the data API organization made an optimization to their API which allows them to return faster responses for a specific subset of high value queries, and routes the remainder of the API calls to a different server which has a slower response rate.

The effect that this has on your application is that now half of your users are experiencing an improvement in latency, and now a large number of users are experiencing “too much” latency and there’s an inconsistent performance experience among users. Solutions to this particular example include modifying the prompt, switching the data provider to a different source, format the information that you send to the API differently or a number of other engineering solutions. If you are not concerned about the shift and can accept the new steady state of the application, you can also choose to not make changes and declare a new acceptable baseline for the latency P50 value.

Was this helpful?