Notifications

Overview

Notifications provide a way for users to be automatically notified about critical test events (e.g., failures or completions) via third-party tools like PagerDuty.

With Notifications you can:

Add critical test failure alerting to your organization’s on-call

Create custom notifications for specific feature tests

Stay informed when a new test session has started

Quick Links

Setting up a Notification Channel in your Namespace

Supported Third-Party Notification Channels

Setting up a Notification in your Project

Setting up a Notification Channel in your Namespace

A Notification Channel describes who will be notified and how.

Before setting up a Notification in your project, you must have a Notification Channel set up in your Namespace. Notification Channels on a Namespace can be used by Notifications in all Projects belonging to the Namespace.

In adding your Notification Channel you will be able to select which third-party integration you'd like to be notified through.

In your desired Namespace, choose Notification Channels in the menu sidebar.

Note: you must be a Namespace admin in order to do this.

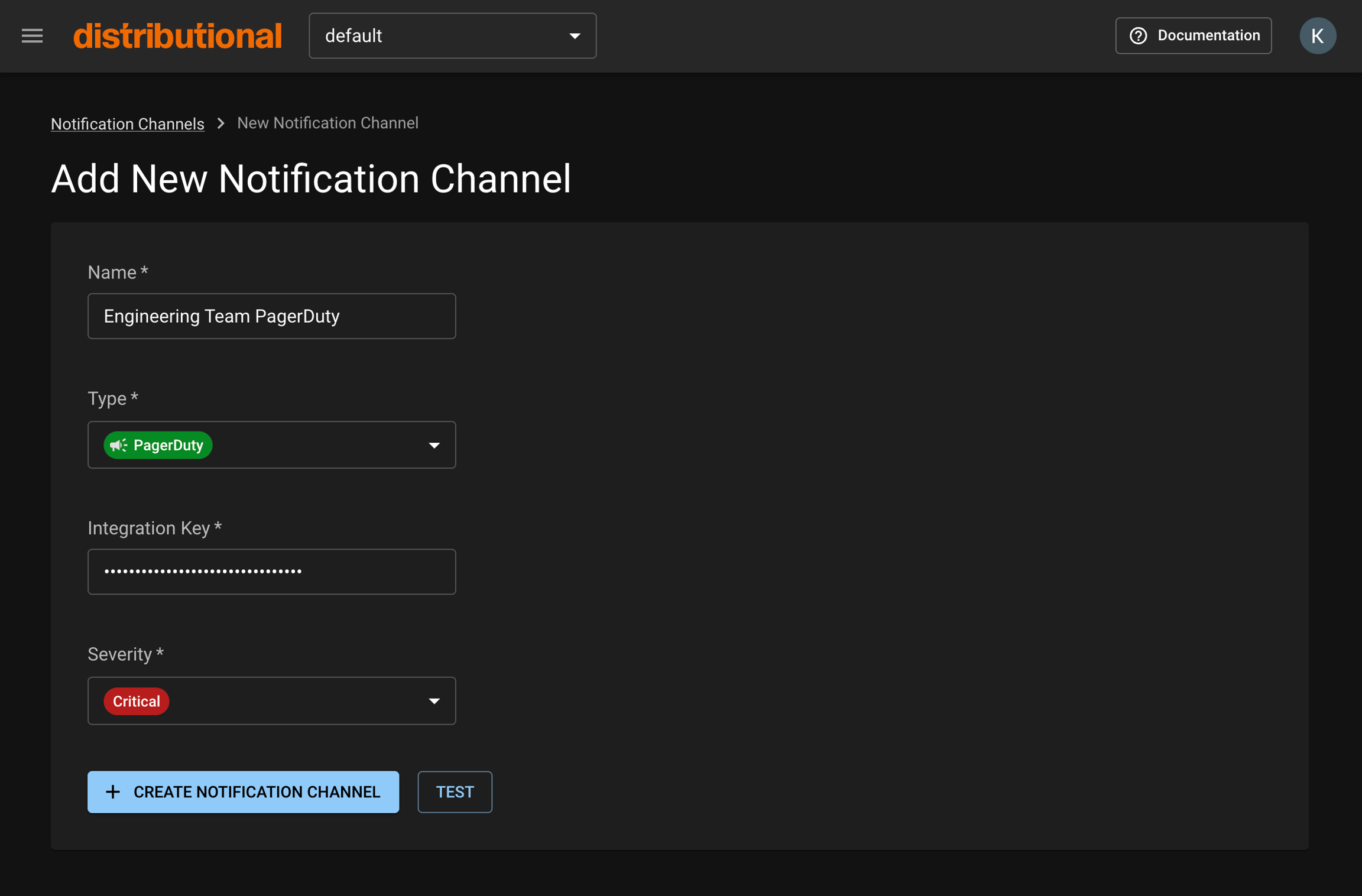

Click the New Notification Channel button to navigate to the creation form.

Complete the appropriate fields.

Optional: If you’d like to test that your Notification Channel is set up correctly, click the Test button. If it is correctly set up, you should receive a notification through the integration you’ve selected.

Click the Create Notification Channel button. Your channel will now be available when setting up your Notification.

Supported Third-Party Notification Channels

Note: More coming up in the product roadmap

Setting up a Notification in your Project

Navigate to the Project page of your desired project.

Under Test Sessions, click the Configure Notifications button to navigate to the Project’s Notifications page.

Click the New Notification button to take you to the creation form.

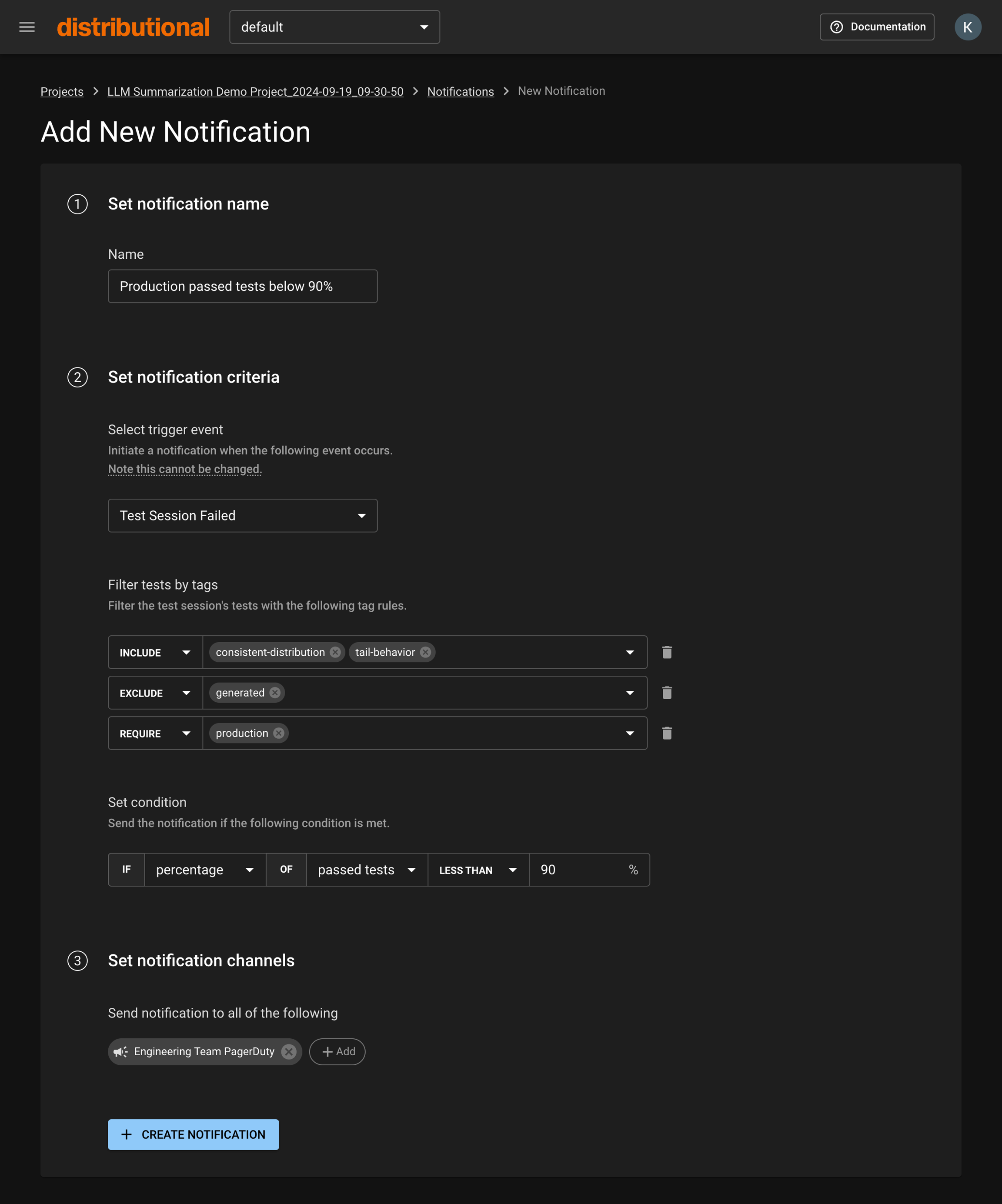

Set your Notification’s name, criteria, and Notification Channels.

Click the Create Notification button. Your Notification will now notify you when your specified criteria are met.

Notification Criteria

Trigger Event

The trigger event describes when your Notification is initiated. Trigger events are based on Test Session outcomes.

Tags Filtering

Filtering by Tags allows you to define which tests in the Test Session you care to be notified about.

There are three types of Tags filters you can provide:

Include: Must have ANY of the selected

Exclude: Must not have ANY of the selected

Require: Must have ALL of the selected

When multiple types are provided, all filters are combined using ‘AND’ logic, meaning all conditions must be met simultaneously.

Note: This field only pertains to the ‘Test Session Failed’ trigger event

Condition

The condition describes the threshold at which you care to be notified. If the condition is met, your Notification will be sent.

Note: This field only pertains to the ‘Test Session Failed’ trigger event

Was this helpful?