Test Drawer Through Shortcuts

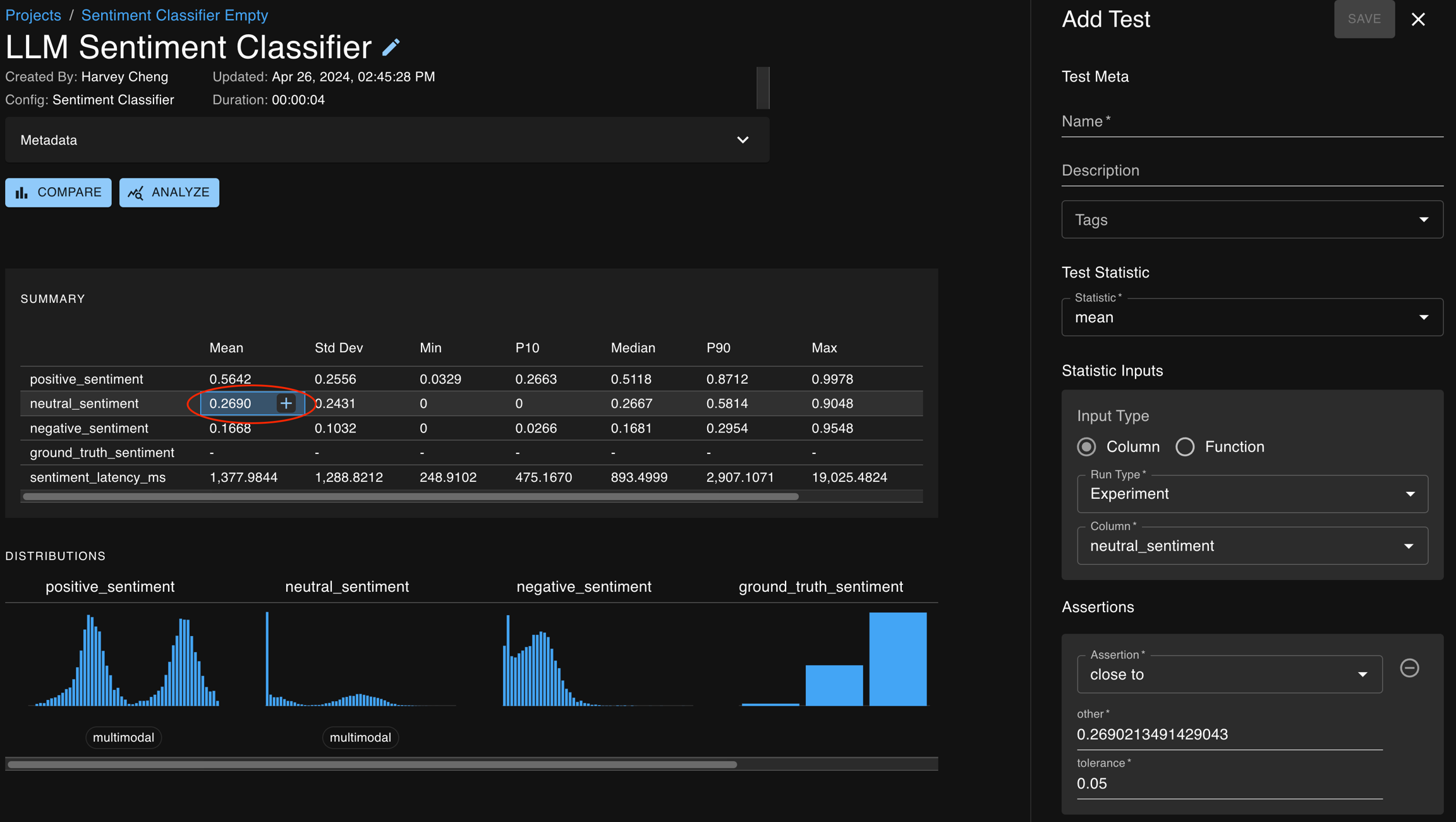

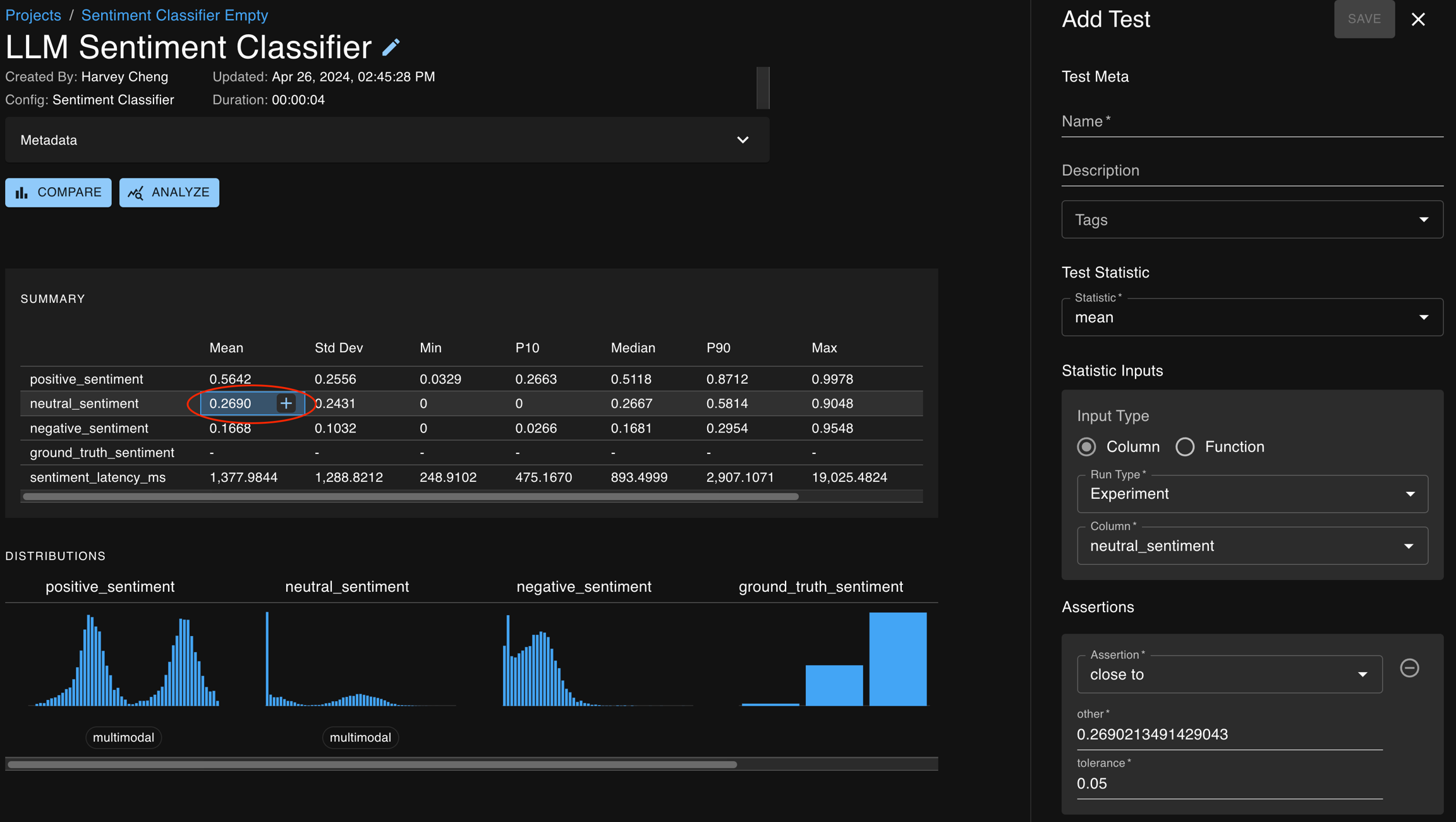

Example of the Summary Statistics Table

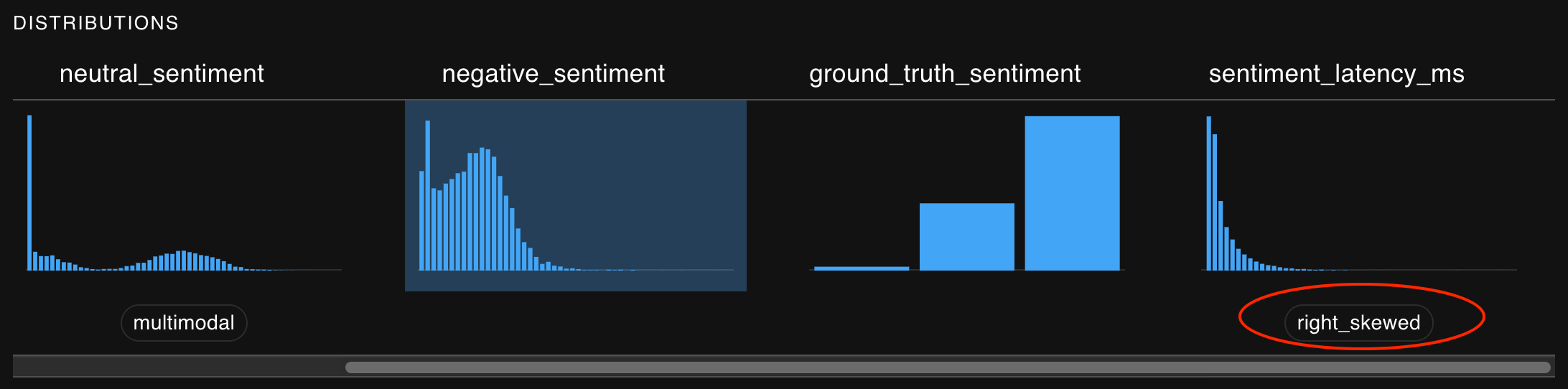

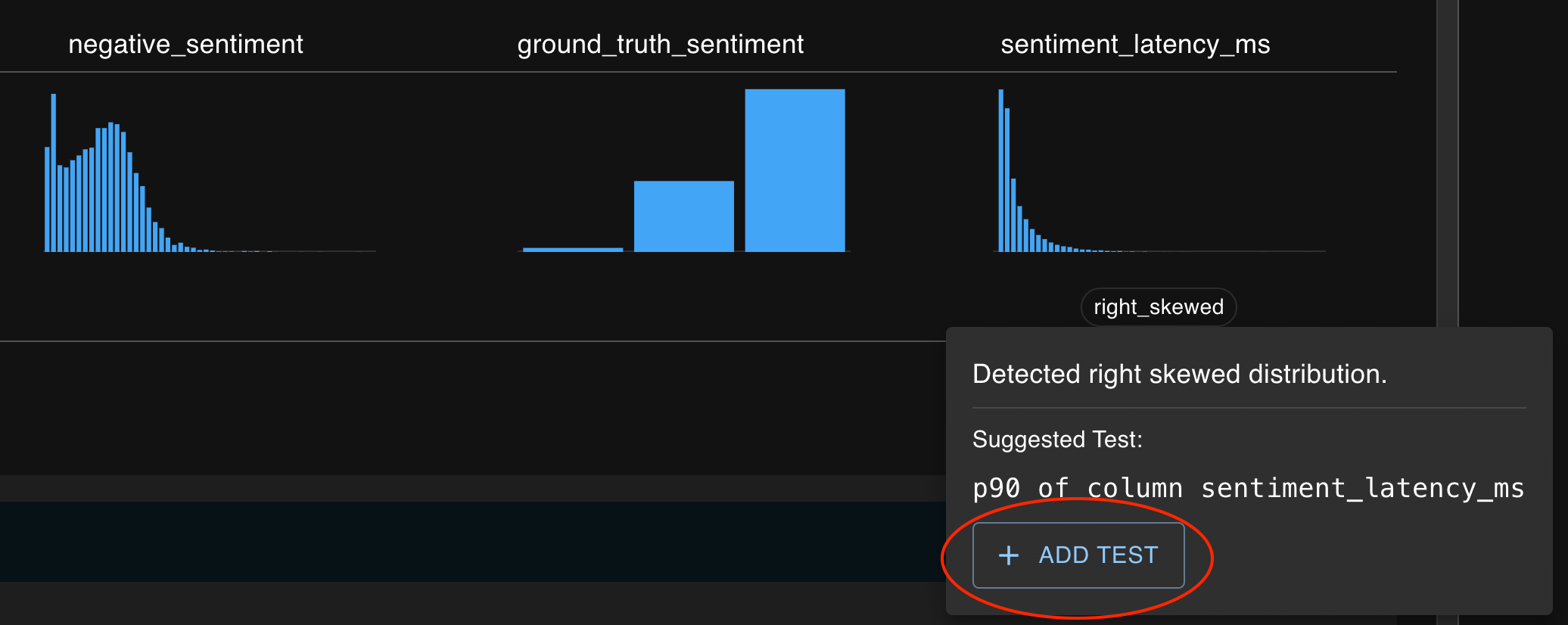

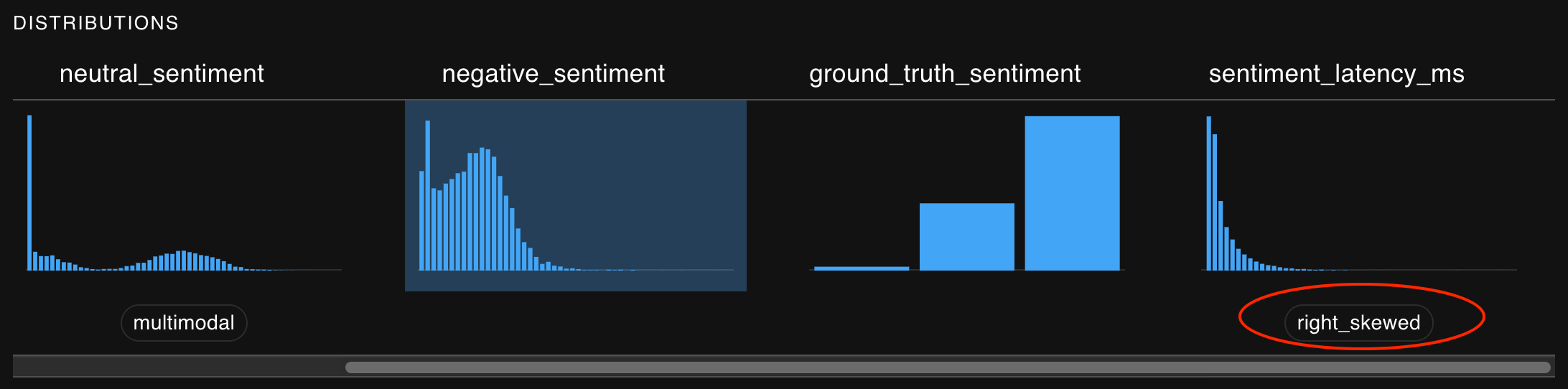

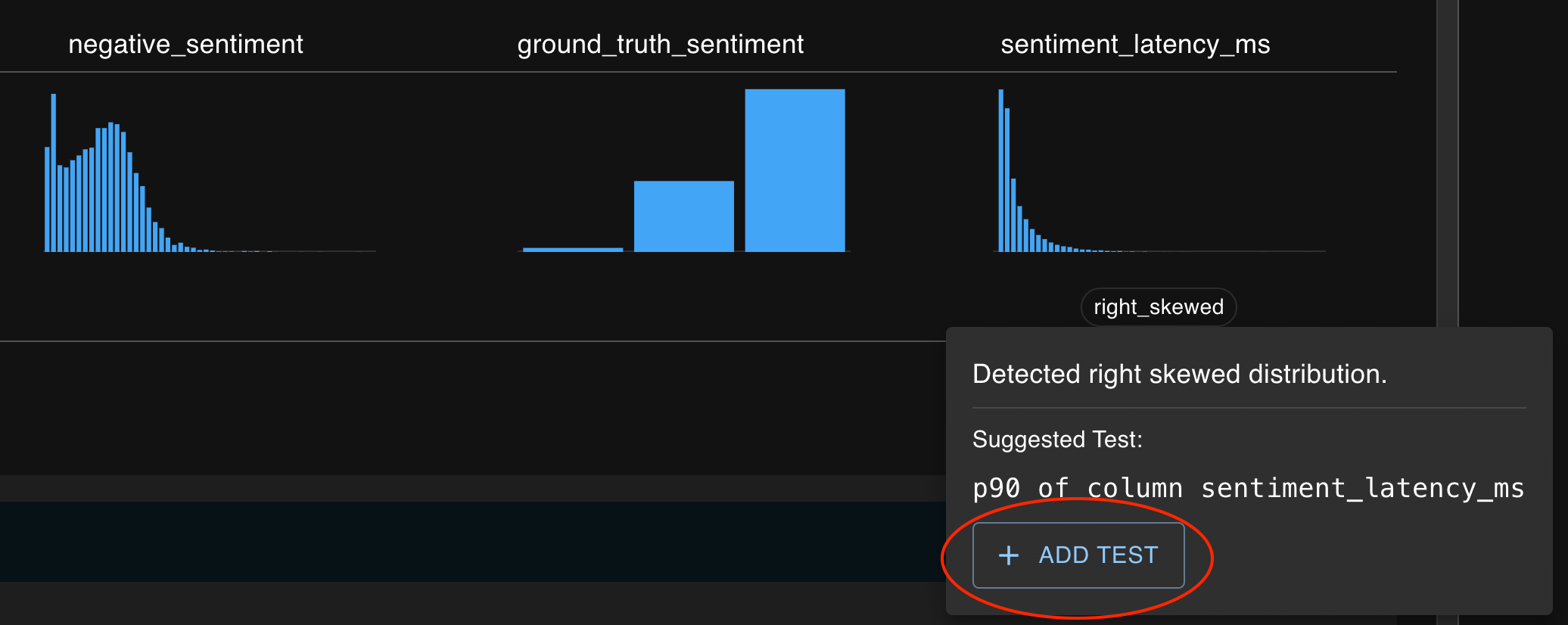

Example of the Mini Charts

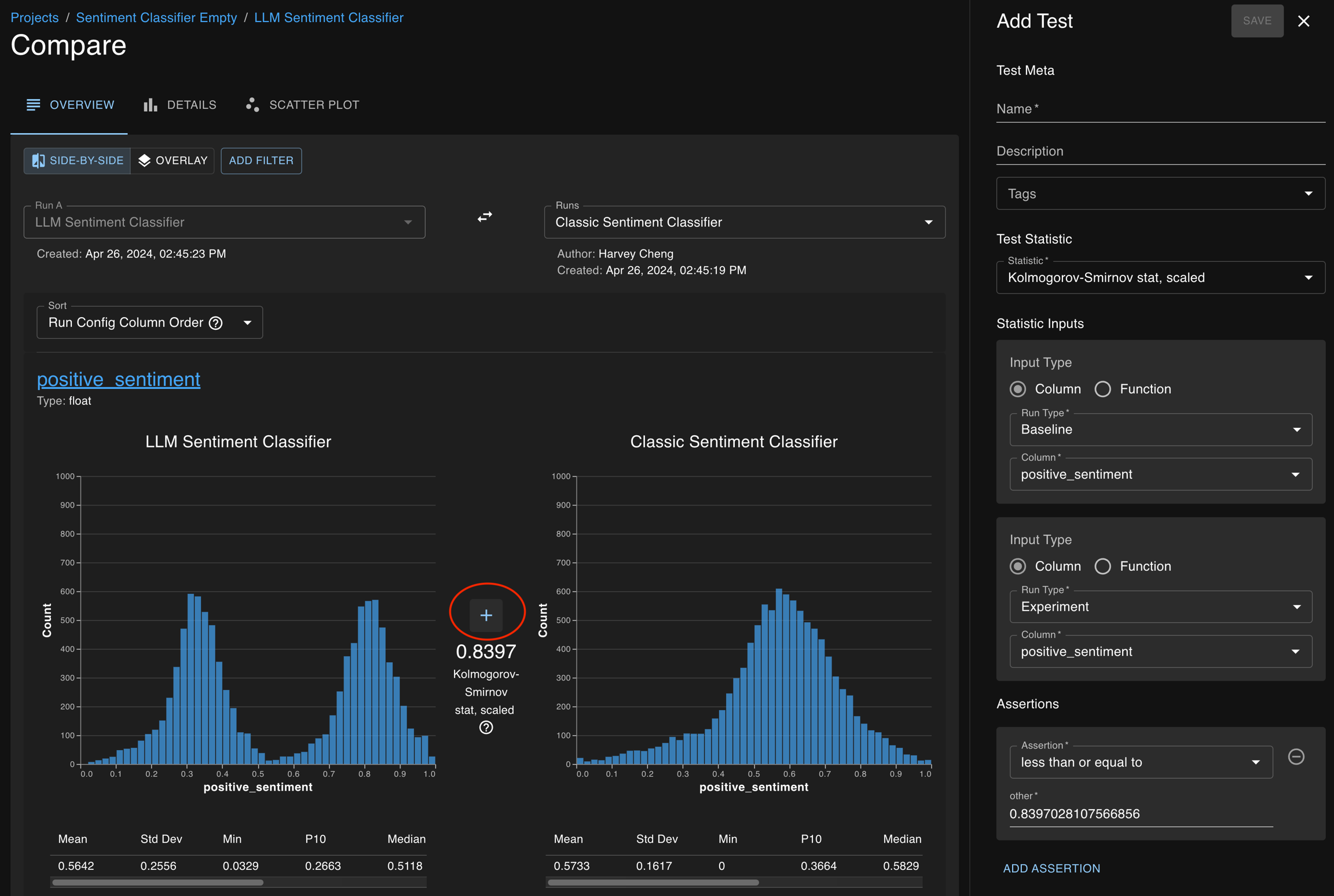

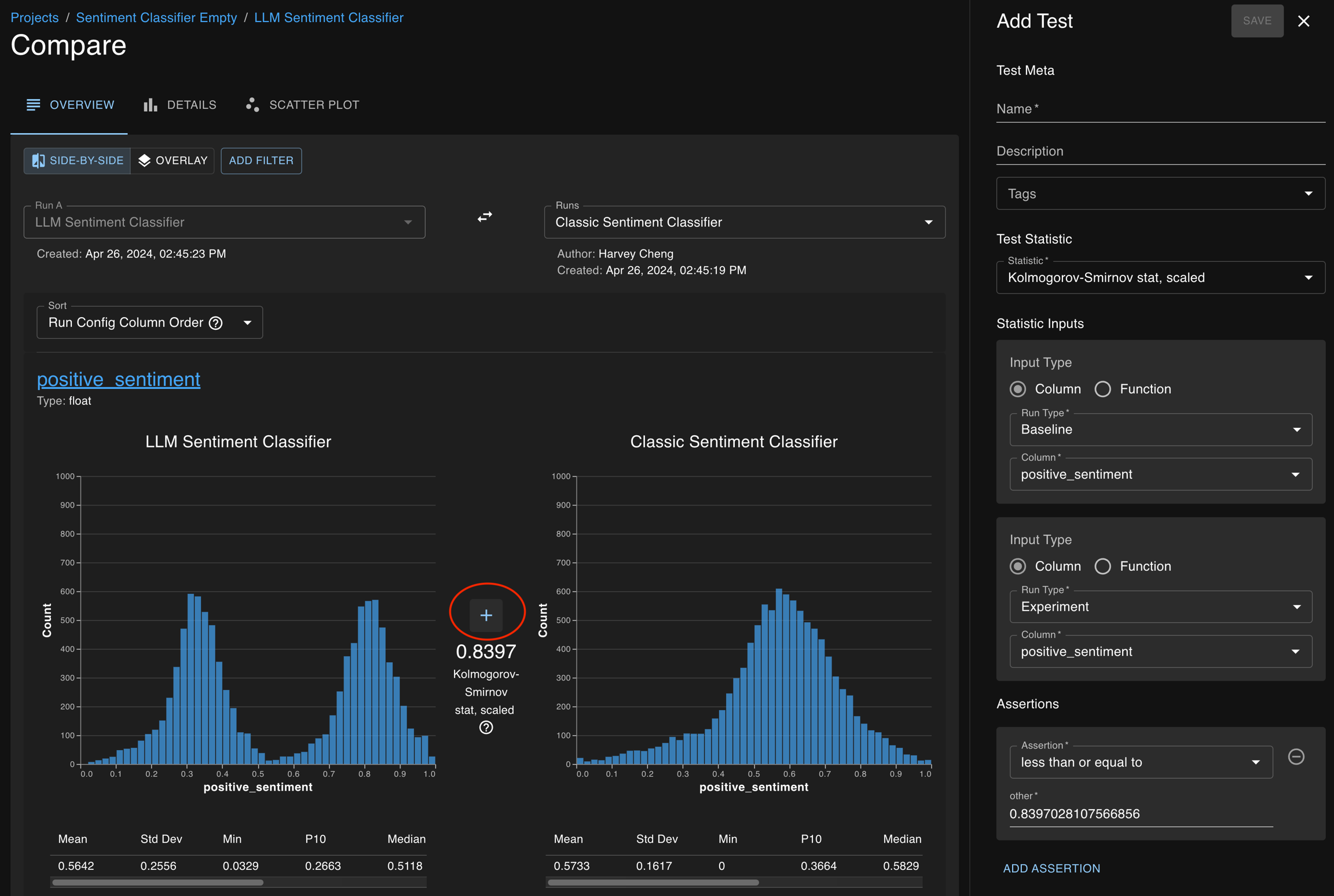

Example on the Compare Page

Was this helpful?

Was this helpful?

Was this helpful?