Reviewing and recalibrating automated Production tests

Directing dbnl to execute the tests you want

A key part of the dbnl offering is the creation of automated Production tests. After their creation, each Test Session offers you the opportunity to Recalibrate those tests to match your expectations. For GenAI users, we think of this as the opportunity to “codify your vibe checks” and make sure future tests pass or fail as you see fit.

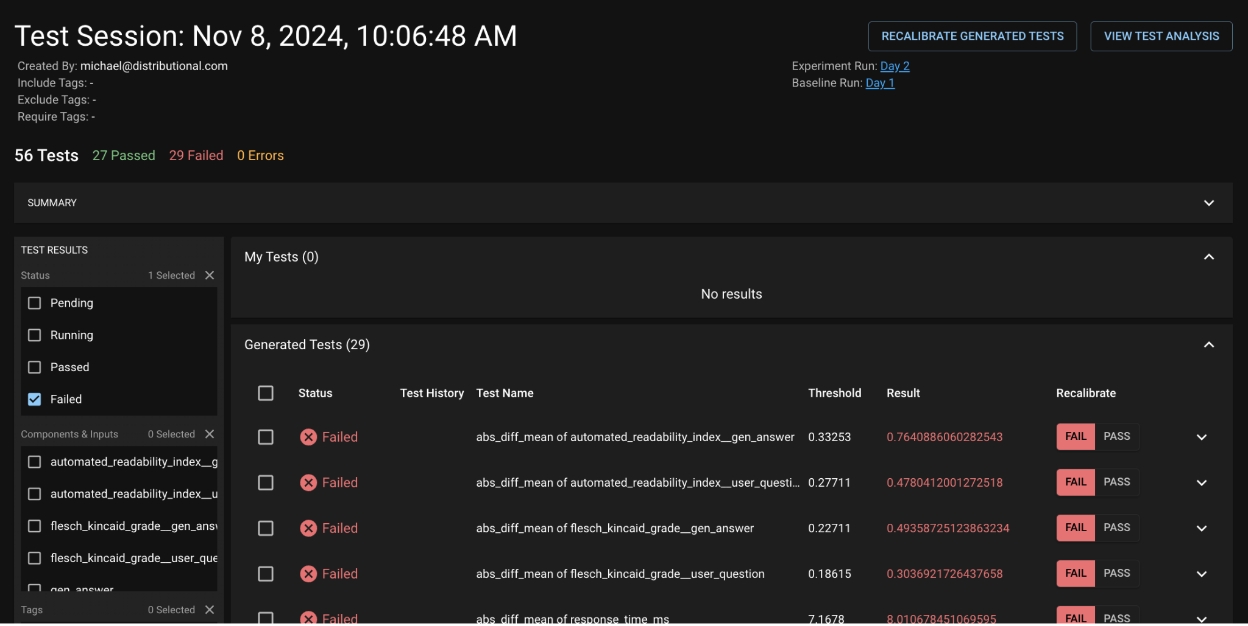

The previous section showed a brief snapshot of a test session to understand how your app has been performing. Our UI also provides advanced capabilities that allow you to dig deeper into our automated Production tests. Below we can see a sample Test Session with a suite of dbnl-generated tests. The View Test Analysis button lets you dig deeper into any subset of tests – in this image, we have subselected only the failed tests to try and learn whether there is something sufficiently concerning that should fail.

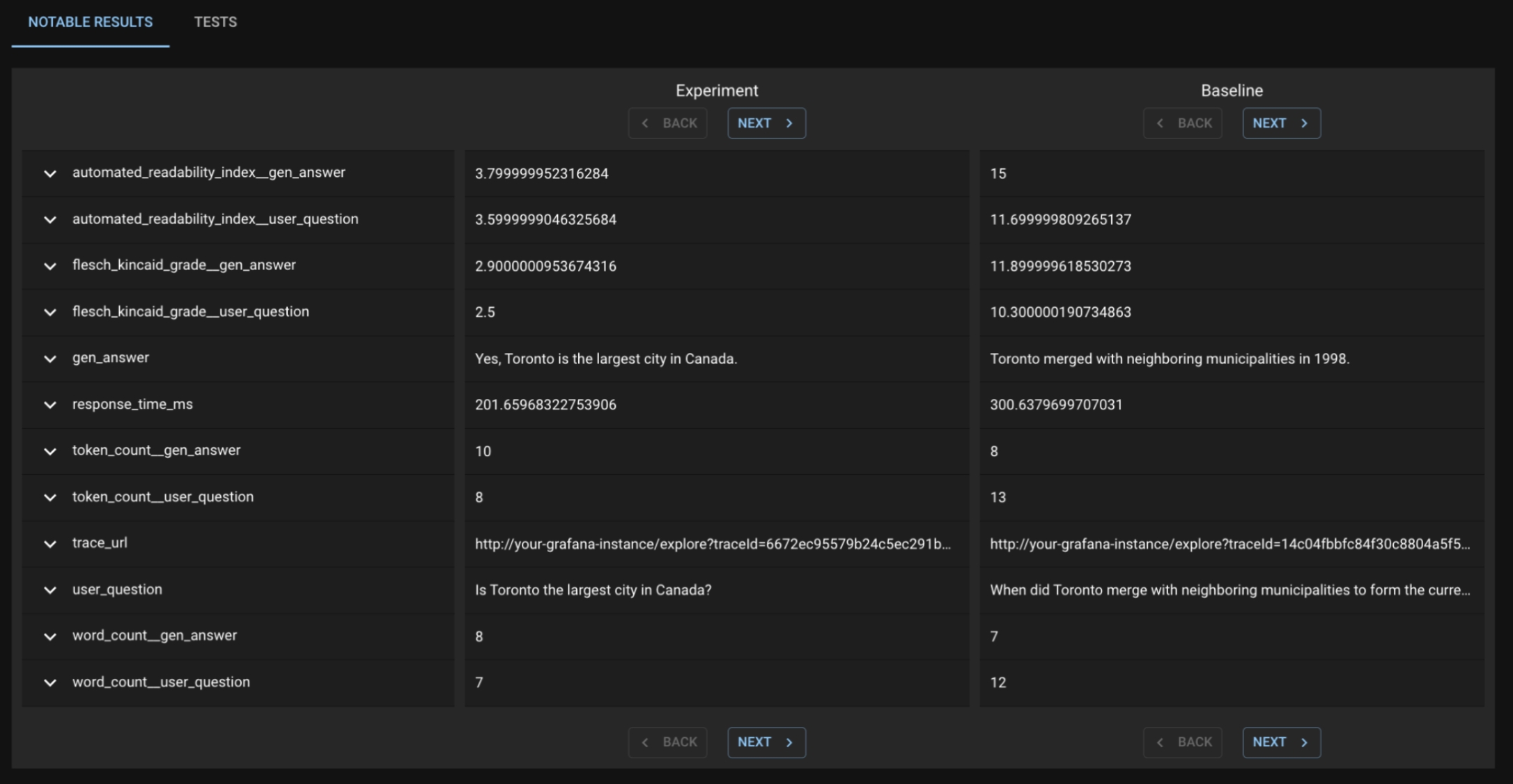

In the subsequent page, there is a Notable Results tab where dbnl provides a subset of app usages that we feel are the most extremely different between the Baseline and Experiment run. When you leaf through these Question/Answer pairs, we do not see anything terribly frightening— just the standard randomness of LLMs. As such, on the original page, I choose to Recalibrate Generated Tests to pass, and I will not be alerted in the future.

We recommend that you always inspect the first 4-7 test sessions for a new Project. This helps ensure that the tests effectively incorporate the nondeterministic nature of your app. After those initial Recalibration actions, you can define notifications to only trigger when too many tests fail.

Was this helpful?