Creating Tests

As you become more familiar with the behavior of your application, you may want to build on the default App Similarity Index test with tests that you define yourself. Let's walk through that process.

Designing a Test

The first step in coming up with a test is determining what behavior you're interested in. As described in the section on Runs, each Run of your application reports its behavior via its results, which are organized into columns (and scalars). Once you've identified the column or scalar you'd like to test on, then you need to determine what statistic you'd like to apply to it and the assertion you'd like to make on that statistic.

This might seem like a lot, but DBNL has your back! While you can define tests manually, DBNL has several ways of helping you identify what columns you might be interested in and letting you quickly define tests on them.

Context-Driven Test Creation

As you browse the DBNL UI, you will see "+" icons or "+ Add Test" buttons appear. These provide context-aware shortcuts for easily creating relevant tests.

At each of these locations, a test creation drawer will open on the right side of the page with several of the fields pre-populated based on the context of the button, alongside a history of the statistic, if relevant. Here are some of the best places to look for DBNL-assisted test creation:

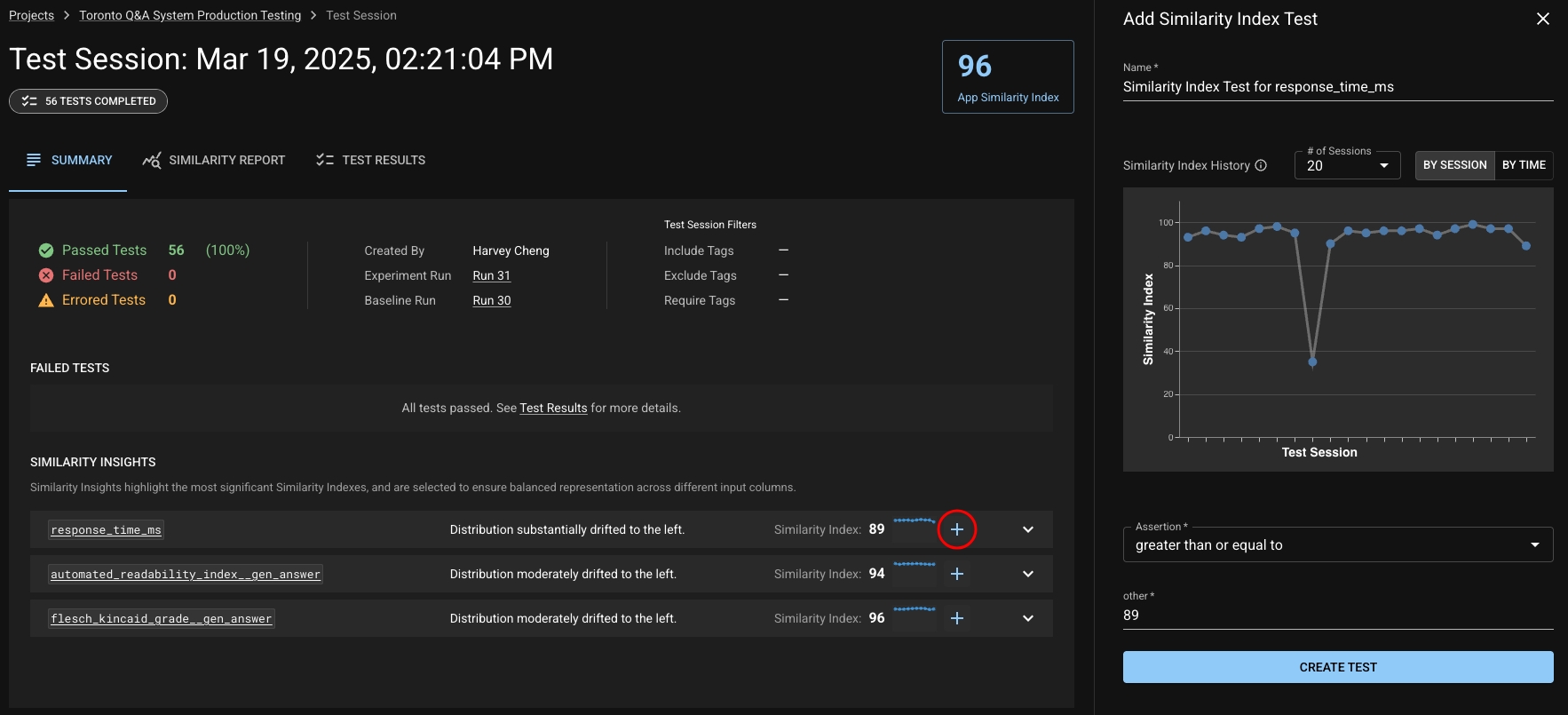

Similarity Insights

When you're looking at a Test Session, DBNL will provide insights about which columns or metrics have have demonstrated the most drift. These are great candidates to define tests on if you want to be specifically alerted about their behavior. You can click the "Add Test" button to create a test on the Similarity Index of the relevant column. The Similarity Index history graph can help guide you on choosing a threshold.

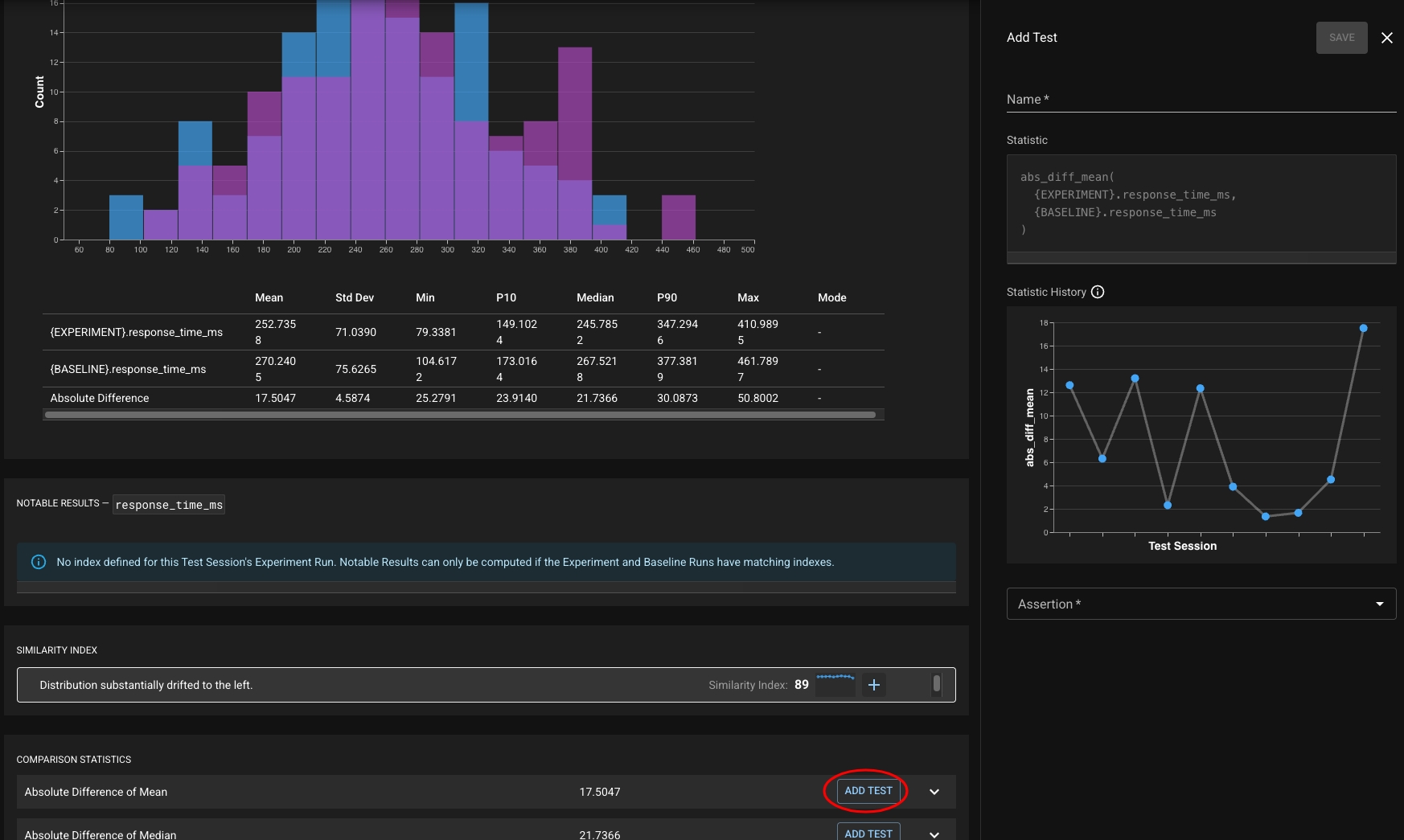

Column or Metric Details

When scrolling through the details of a column or metric from a Test Session (found by drilling into that column or metric from the Similarity Insights or Similarity Report), there are several "Add Test" buttons provided to allow you to quickly create a test on a relevant statistic or Similarity Index. The respective history graphs can help guide you on choosing a threshold.

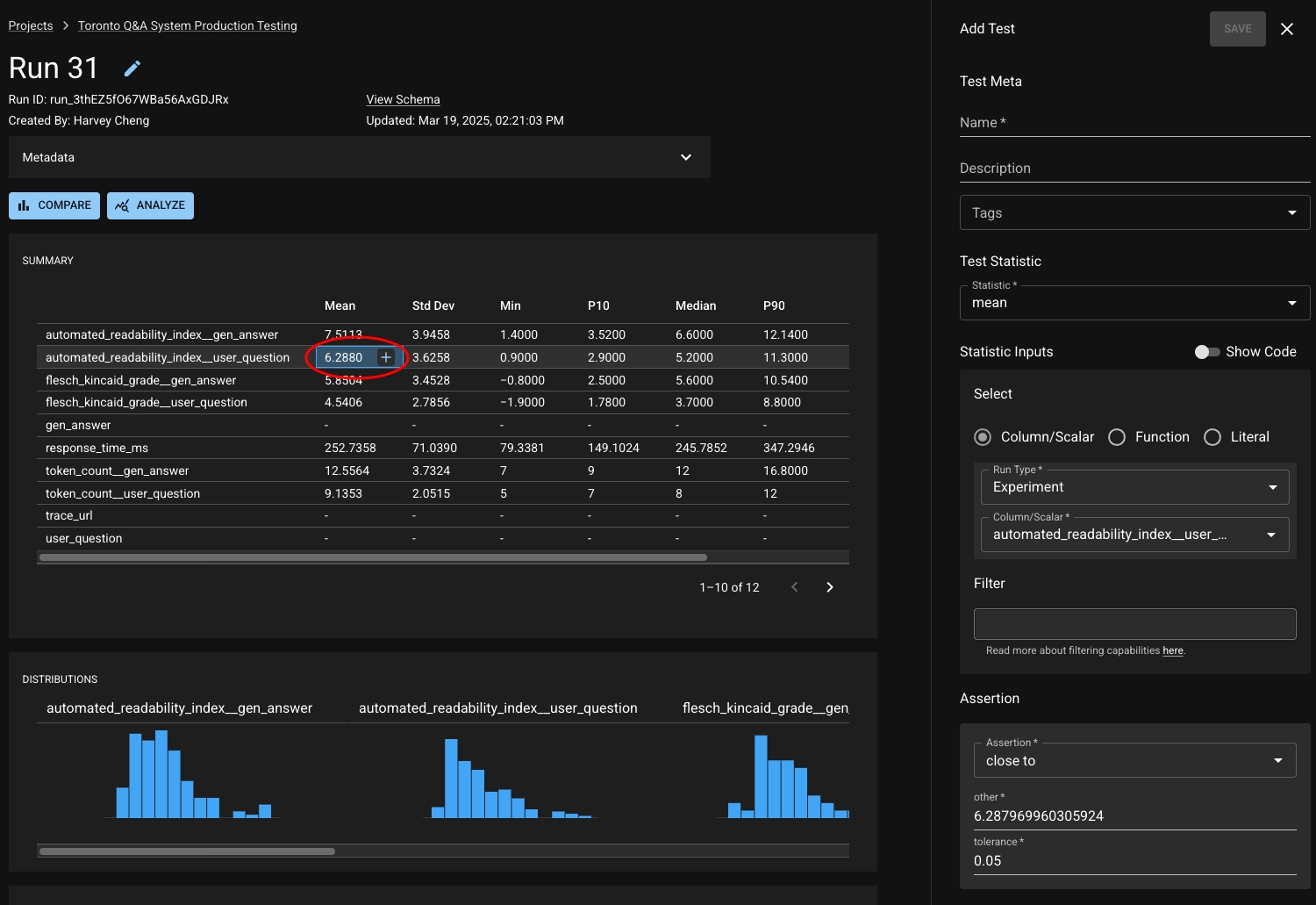

Summary Statistics Table

When viewing a Run, each entry in the summary statistics table can be used to seed creation of a test for that chosen statistic.

And More!

These shortcuts appear in several other places in the UI as well when you are inspecting your Runs and Test Sessions; keep an eye out for the "+"!

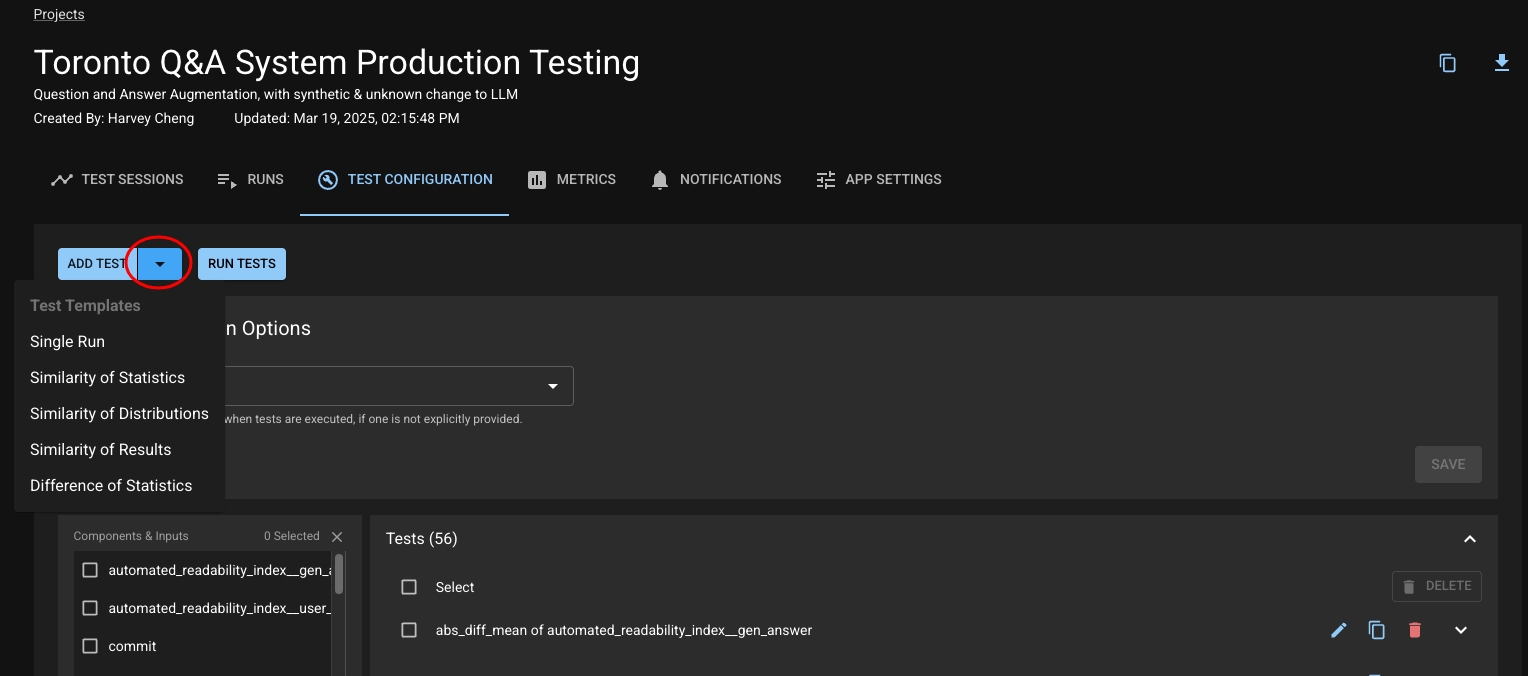

Templated Tests

Test templates are macros for basic test patterns recommended by Distributional. It allows the user to quickly create tests from a builder in the UI. Distributional provides five classes of test templates:

Single Run: These are parametric statistics of a column.

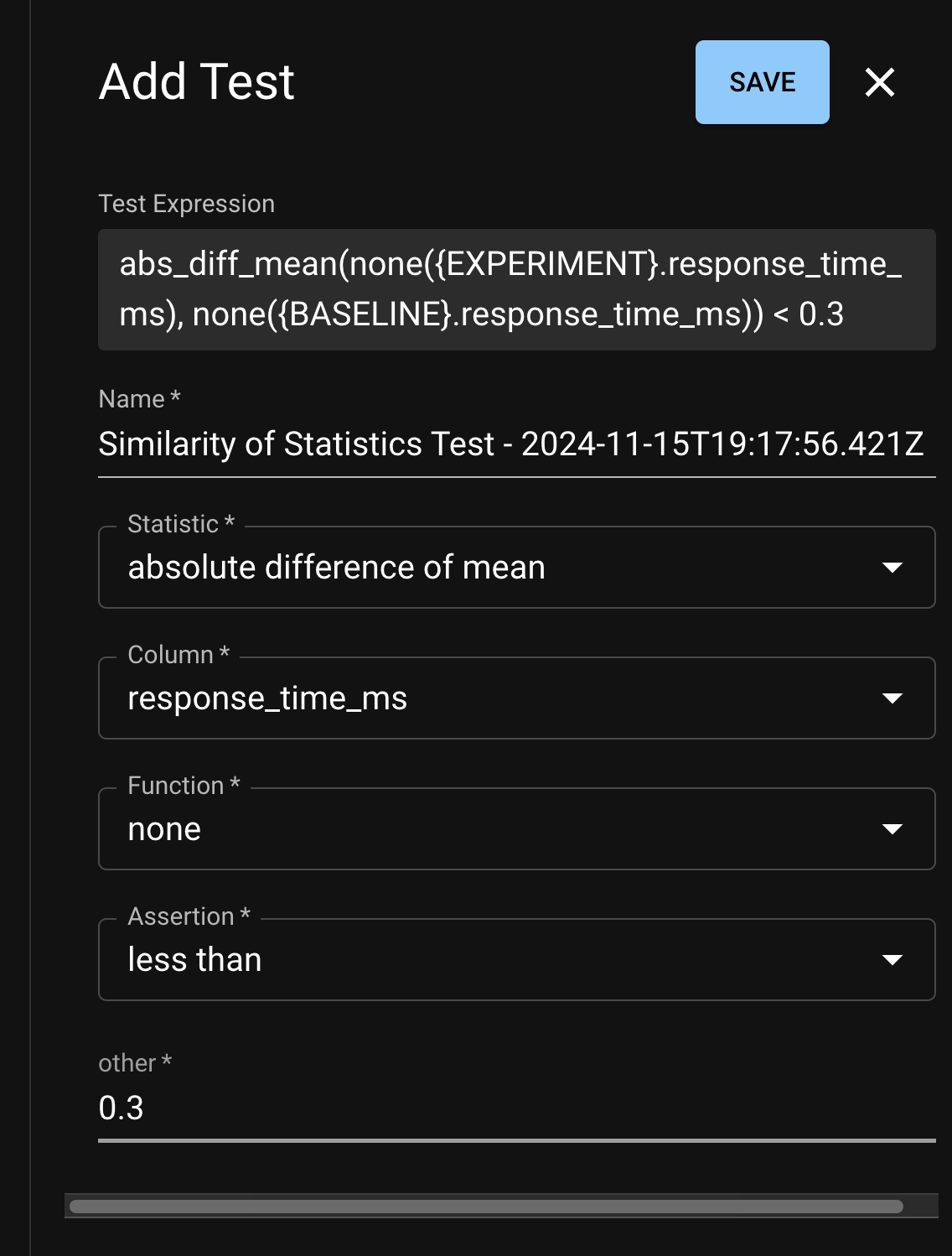

Similarity of Statistics: These test if the absolute difference of a statistic of a column between two runs is less than a threshold.

Similarity of Distributions: These test if the column from two different runs are similarly distributed is using a nonparametric statistic.

Similarity of Results: These are tests on the row-wise absolute difference of result

Difference of Statistics: These tests the signed difference of a statistic of a column between two runs

From the Test Configuration tab on your Project, click the dropdown next to "Add Test".

Select from one of the five options. A Test Creation drawer will appear and the user can edit the statistic, column, and assertion that they desire. Note that each Test Template has a limited set of statistics that it supports.

Creating Tests Manually (Advanced)

If you have a good idea of what you want to test or just want to explore, you can create tests manually from either the UI or via the Python SDK.

Let's say you are building an Q&A chatbot, and you have a column for the length of your bot's responses, word_count. Perhaps you want to ensure that your bot never outputs more than 100 words; in that case, you'd choose:

The statistic

max,The assertion

less than or equal to,and the threshold

100.

But what if you're not opinionated about the specific length? You just want to ensure that your app is behaving consistently as it runs and doesn't suddenly start being unusually wordy or terse. DBNL makes it easy to test that as well; you might go with:

The statistic

absolute difference of mean,The assertion

less than,and the threshold

20.

Now you're ready to go and create that test, either via the UI or the SDK:

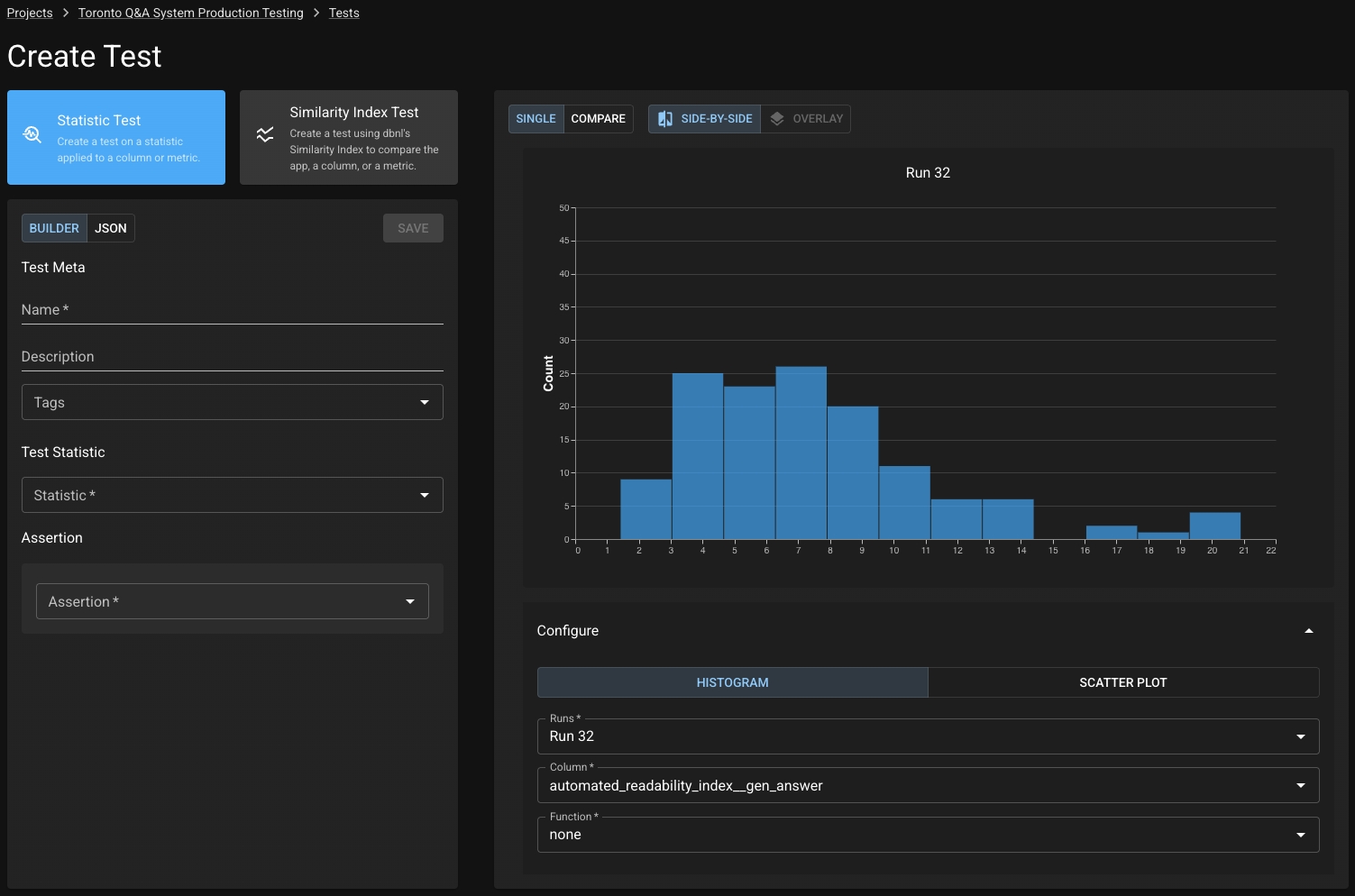

From your Project, click the "Test Configuration" tab.

At the top, you can click "Add Test" to open the test creation page, which will enable you to define your test through the dropdown menu on the left side of the window.

On the left side you can configure your test by choosing a statistic and assertion. Note that you can use our builder or build a test spec with raw JSON (you can see some example test spec JSONs here). On the right, you can browse the data of recent Runs to help you figure out what statistics and thresholds are appropriate to define acceptable behavior.

You can see a full list with descriptions of available statistics and assertions here.

Was this helpful?