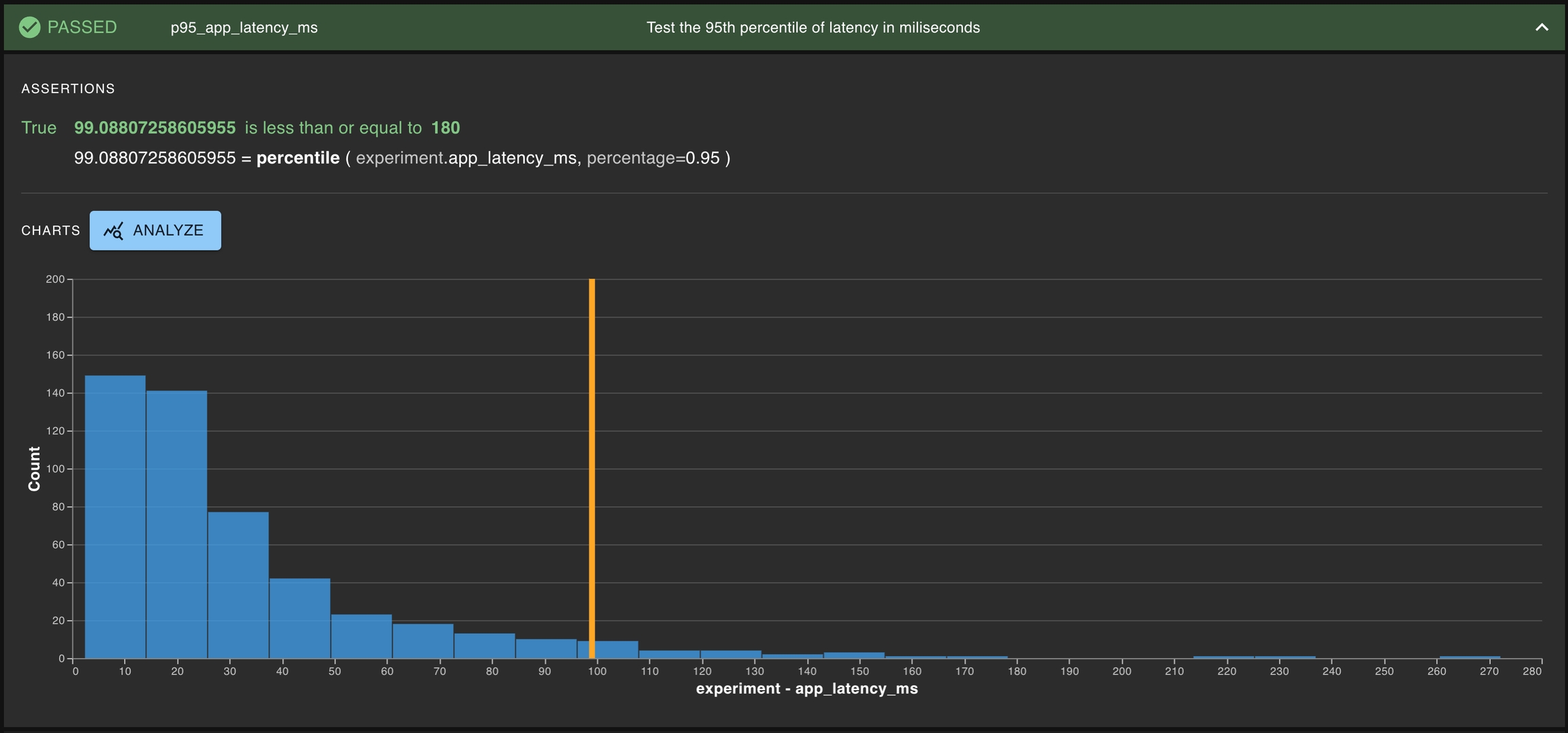

Test That a Given Distribution Has Certain Properties

A common type of test is testing whether a single distribution contains some property of interest. Generally, this means determining whether some statistics for the distribution of interest exceeds some threshold. Some examples of this can be testing the toxicity of a given LLM or the latency for the entire AI-powered application.

This is especially common for development testing, where it is important to test if a proposed app reaches the minimum threshold for what is acceptable.

Was this helpful?