Metrics

What are Metrics?

Metrics are measurable properties that help quantify specific characteristics of your data. Metrics can be user-defined, by providing a numeric column computed from your source data alongside your application data.

Alternatively, the Distributional SDK offers a comprehensive set of metrics for evaluating various aspects of text and LLM outputs. Using Distributional's methods for computing metrics will enable better data-exploration and application stability monitoring capabilities.

The SDK documentation contains more details on Metrics including some example usage.

Using Metrics

The SDK provides convenient functions for computing metrics from your data and reporting the results to Distributional:

import dbnl

import dbnl.eval

import pandas as pd

# login to dbnl

dbnl.login()

project = dbnl.create_project(name="Metrics Project")

df = pd.DataFrame(

{

"id": [1, 2, 3],

"question": [

"What is the meaning of life?",

"What is the airspeed velocity of an unladen swallow?",

"What is the capital of Assyria?",

],

"answer": [

"To be happy and fulfilled.",

"It's a question of aerodynamics.",

"Nineveh was the capital of Assyria.",

],

"expected_answer": [

"42",

"It's a question of aerodynamics.",

"Nineveh was the capital of Assyria.",

],

}

)

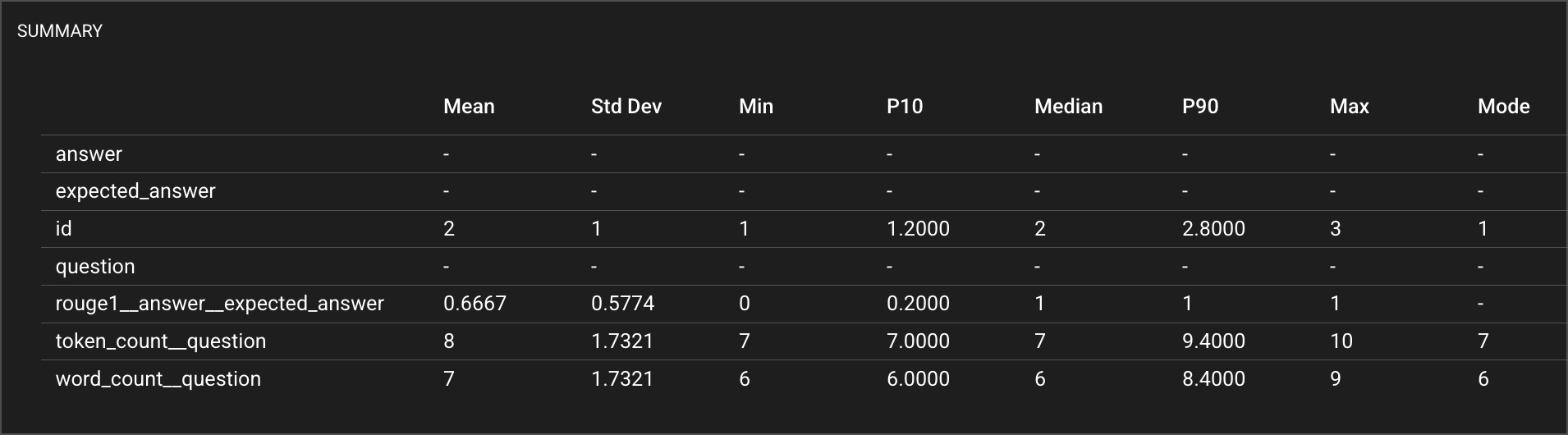

# Create individual metrics

metrics = [

dbnl.eval.metrics.token_count("question"),

dbnl.eval.metrics.word_count("question"),

dbnl.eval.metrics.rouge1("answer", "expected_answer"),

]

# Compute metrics and report results to Distributional

run = dbnl.eval.report_run_with_results(

project=project, column_data=df, metrics=metrics

)

See the SDK documentation for a more complete list and description of available metrics.

Convenience Functions

The SDK includes helper functions for creating common groups of related metrics based on consistent inputs.

import dbnl

import dbnl.eval

import pandas as pd

# login to DBNL

dbnl.login()

project = dbnl.create_project(name="Metrics Project")

df = pd.DataFrame(

{

"id": [1, 2, 3],

"question": [

"What is the meaning of life?",

"What is the airspeed velocity of an unladen swallow?",

"What is the capital of Assyria?",

],

"answer": [

"To be happy and fulfilled.",

"It's a question of aerodynamics.",

"Nineveh was the capital of Assyria.",

],

"expected_answer": [

"42",

"It's a question of aerodynamics.",

"Nineveh was the capital of Assyria.",

],

}

)

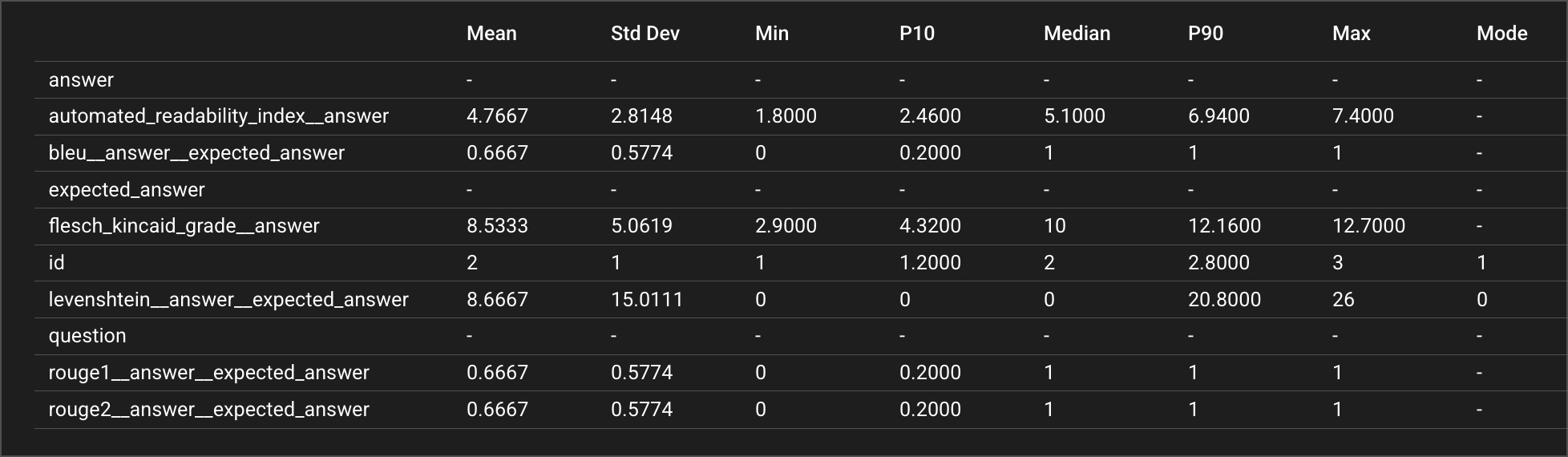

# Get standard text evaluation metrics

text_eval_metrics = dbnl.eval.metrics.text_metrics(

prediction="answer", target="expected_answer"

)

# Get comprehensive QA evaluation metrics

qa_metrics = dbnl.eval.metrics.question_and_answer_metrics(

prediction="answer",

target="expected_answer",

input="question",

)

# Compute metrics and report results to Distributional

run = dbnl.eval.report_run_with_results(

project=project, column_data=df, metrics=(text_eval_metrics + qa_metrics)

)

See the SDK documentation for a more complete list and description of available functions.

Was this helpful?