Distributional Concepts

Understanding key concepts and their role relative to your app

Adaptive testing for AI applications requires more information than standard deterministic testing. This is because:

AI applications are multi-component systems where changes in one part can affect others in unexpected ways. For instance, a change in your vector database could affect your LLM's responses, or updates to a feature pipeline could impact your machine learning model's predictions.

AI applications are non-stationary, meaning their behavior changes over time even if you don't change the code. This happens because the world they interact with changes - new data comes in, language patterns evolve, and third-party models get updated. A test that passes today might fail tomorrow, not because of a bug, but because the underlying conditions have shifted.

AI applications are non-deterministic. Even with the exact same input, they might produce different outputs each time. Think of asking an LLM the same question twice - you might get two different, but equally valid, responses. This makes it impossible to write traditional tests that expect exact matches.

To account for this, each time you want to measure the behavior of the AI application, you will need to:

Record outcomes at all of the app’s components, and

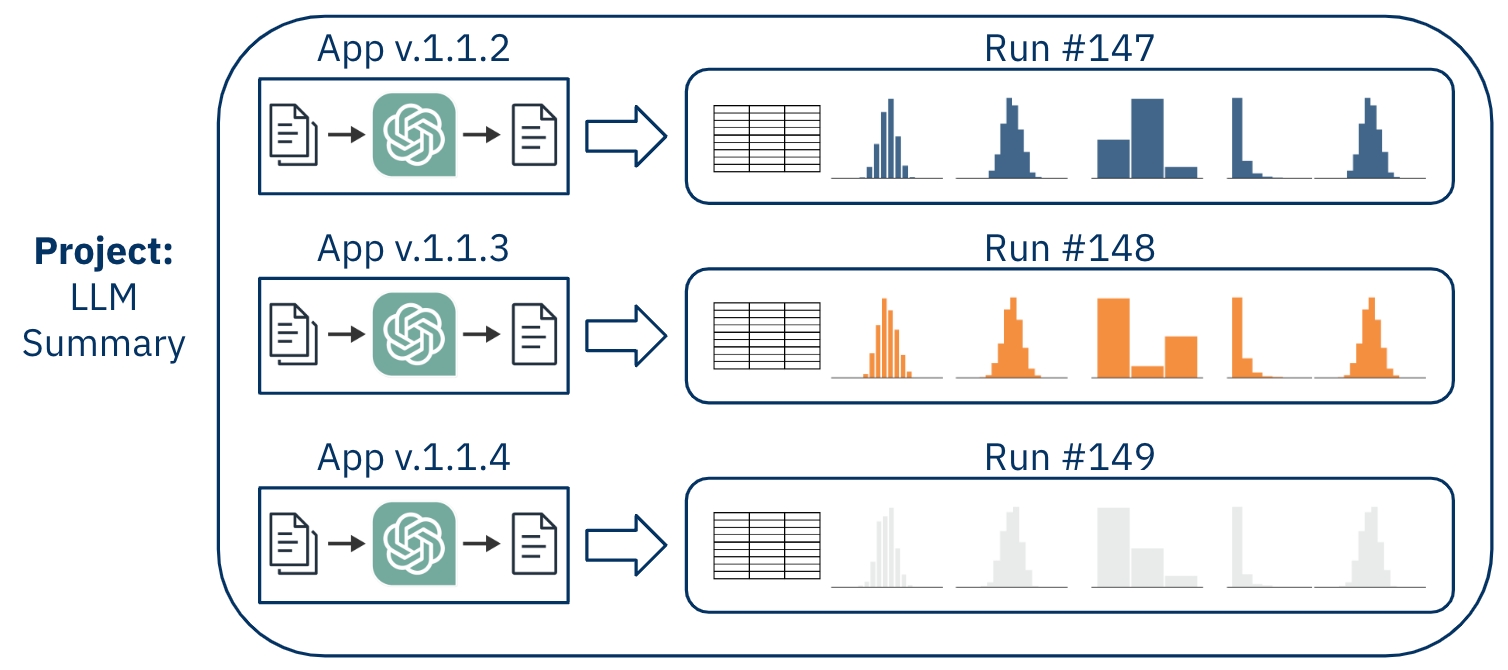

Push a distribution of inputs through the app to study behavior across the full spectrum of possible app usage.

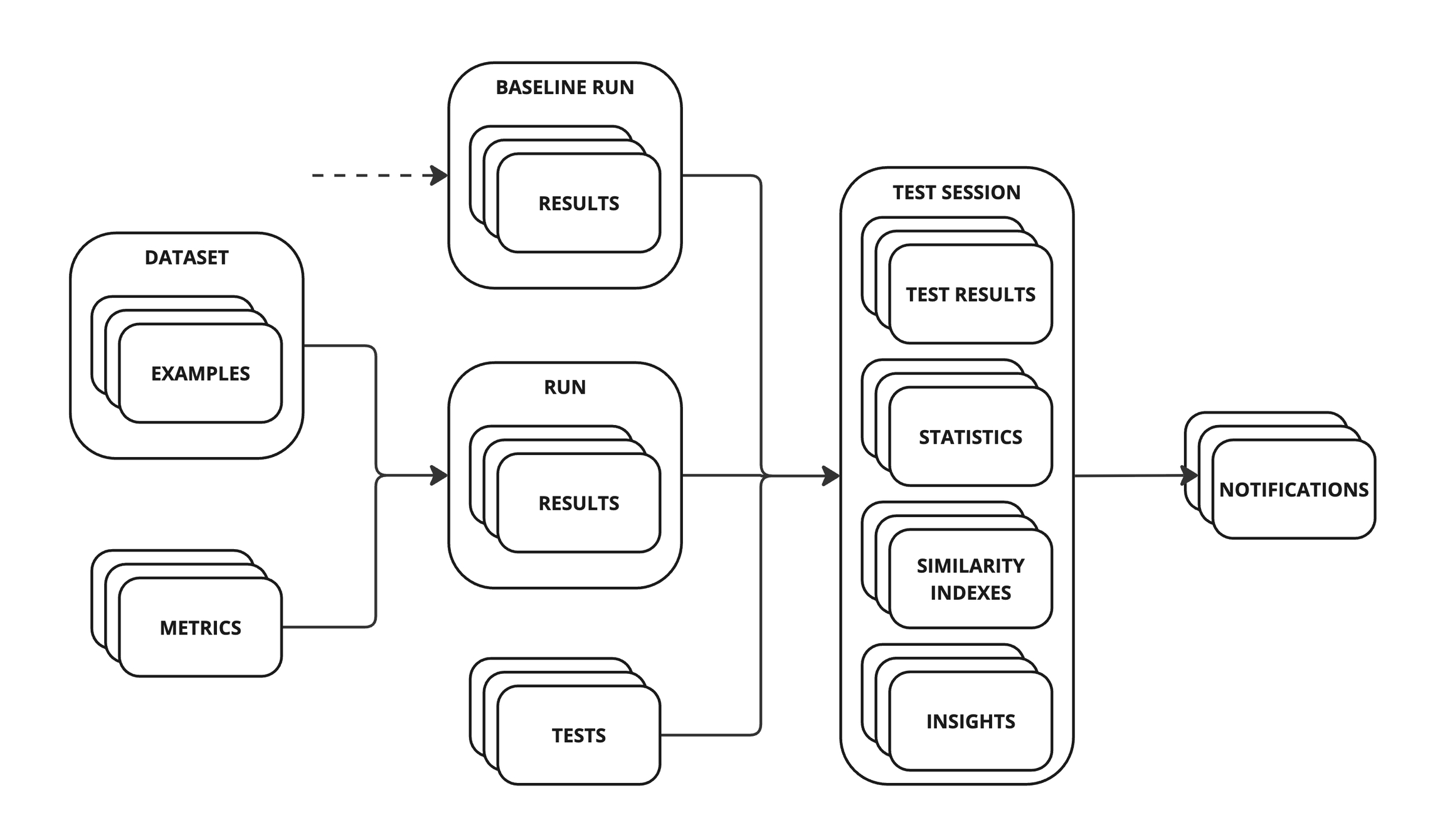

The inputs, outputs, and outcomes associated with a single app usage are grouped in a Result, with each value in a result described as a Column. The group of results that are used to measure app behavior is called a Run. To determine if an app is behaving as expected, you create a Test, which involves statistical analysis on one or more runs. When you apply your tests to the runs that you want to study, you create a Test Session, which is a permanent record of the behavior of an app at a given time.

Was this helpful?