Running Tests

You can run any tests you've created (or just the default App Similarity Index test) to investigate the behavior of your application.

Running Your Tests

When you run a Test Session, you are running your tests against a given Experiment Run.

Choose a Baseline Run

If you haven't already, take a look at the documentation on setting a Baseline Run. All the methods for running a test will allow you to choose a Baseline Run at the time of Test Session creation, but you can also set a default.

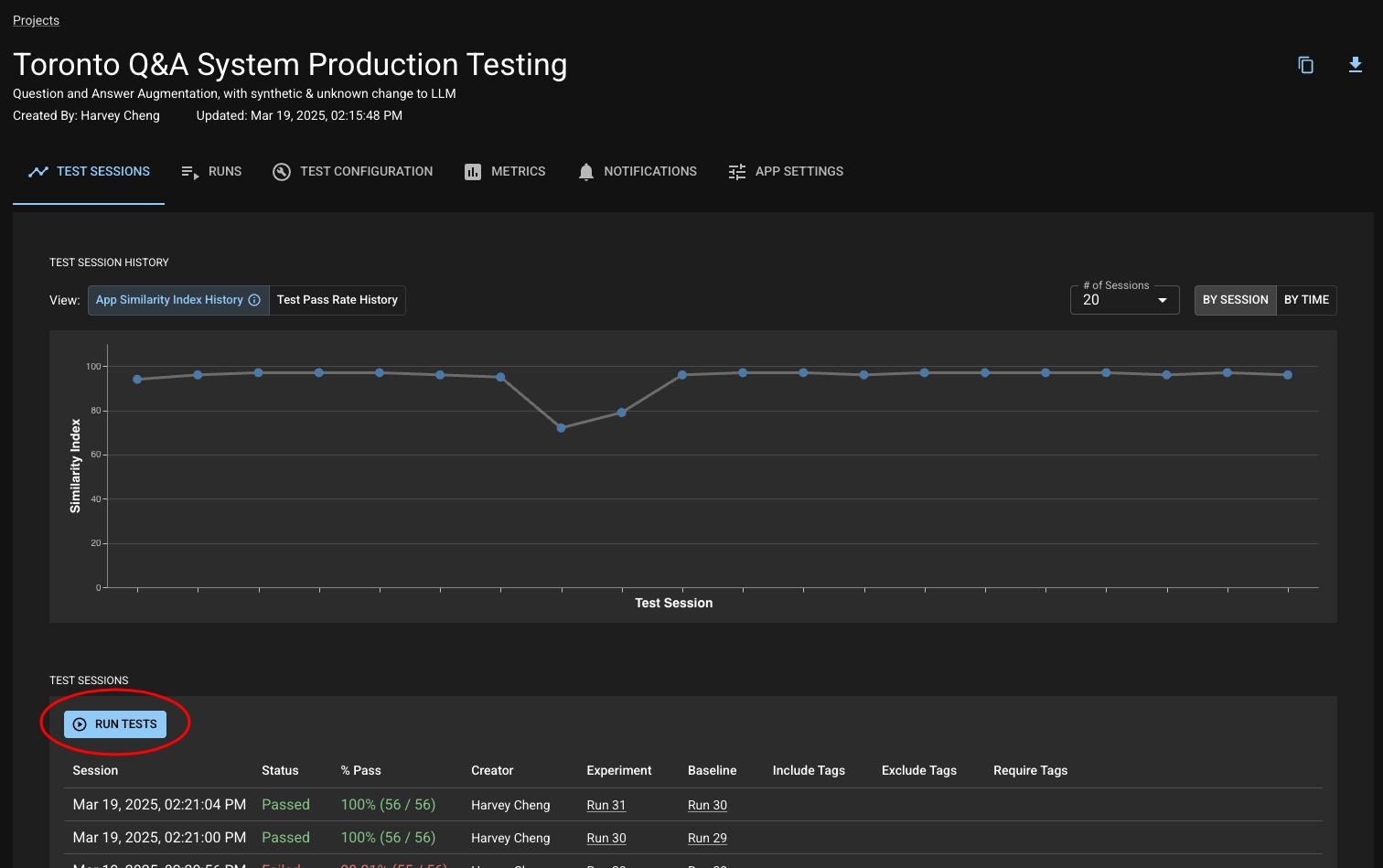

Create a Test Session

Tests are run within the context of a Test Session, which is effectively just a collection of tests run against an Experiment Run with a Baseline Run. You can create a Test Session, which will immediately run the tests, via the UI or the SDK:

You can choose to run the tests associated with a Project by clicking on the "Run Tests" button on your Project. This button will open up a modal that allows you to specify the Baseline and Experiment Runs, as well as the tags of the tests you would like to include or exclude from the test session.

Continue onto Reviewing Tests for how to look at and interpret the results from your Test Session.

Was this helpful?