LLM Models

Distributional can call a third-party or self-hosted LLM on your behalf, with your credentials, to generate results or create metrics based on text data inputs.

DBNL supports the following LLM providers:

OpenAI

Azure OpenAI

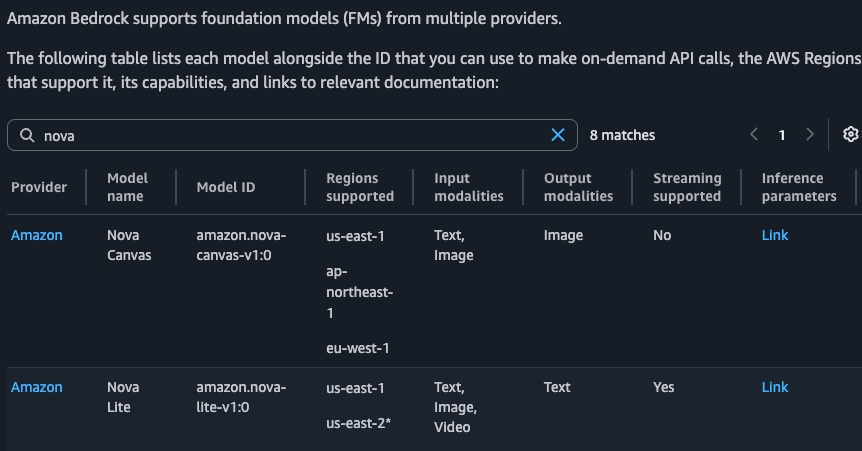

AWS Bedrock

AWS SageMaker

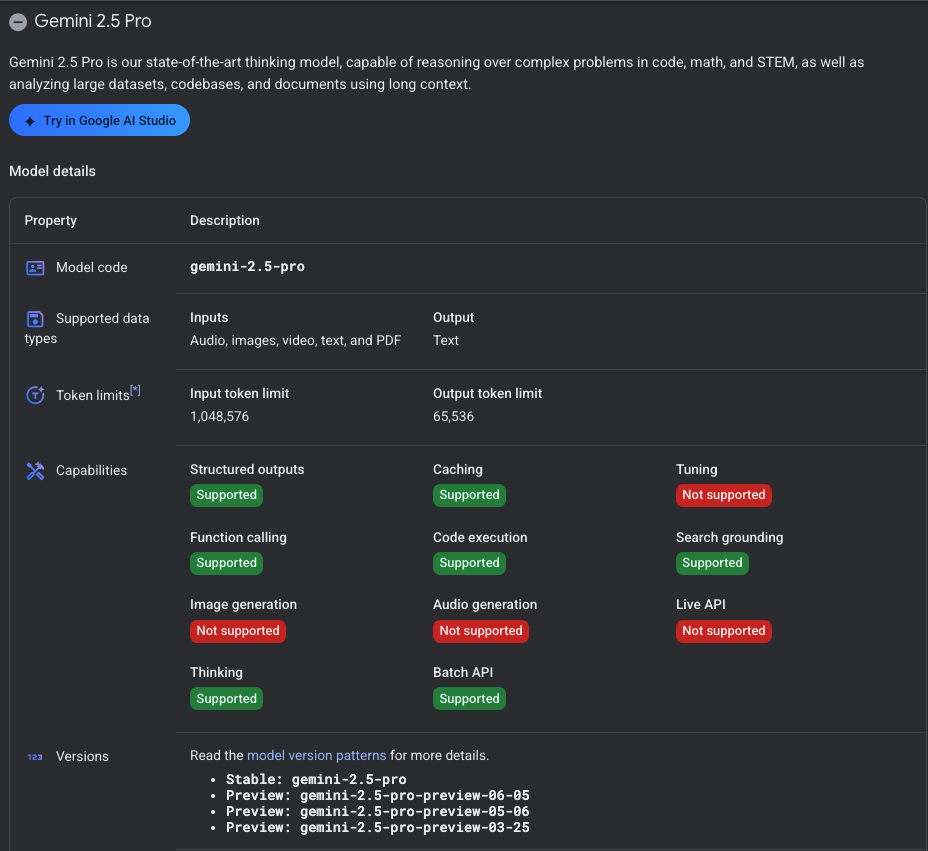

Google Gemini

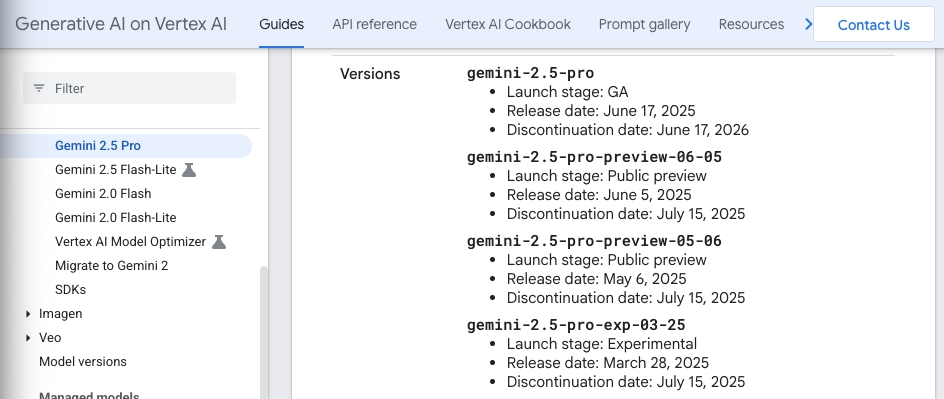

Google VertexAI

Providers

The following arguments are supported for the OpenAI provider.

api_key

API key to access OpenAI

base_url

[Optional] Location of where to access LLMs.

If not provided, defaults to call OpenAI directly. If provided, should be a valid URL that points to an OpenaAI-compatible provider, but not a specific model. For example:

http://my.test.server.example.com:8083/v1Models

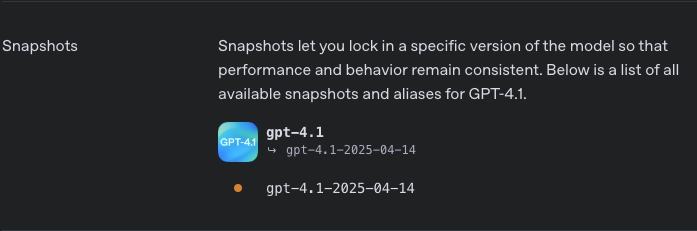

After setting your LLM Provider details, you must set the model you want Distributional to access on your behalf. Each provider has their own set of models that may be available. Distributional provides a Validate Model button to enable simple testing to confirm your model has been set up correctly.

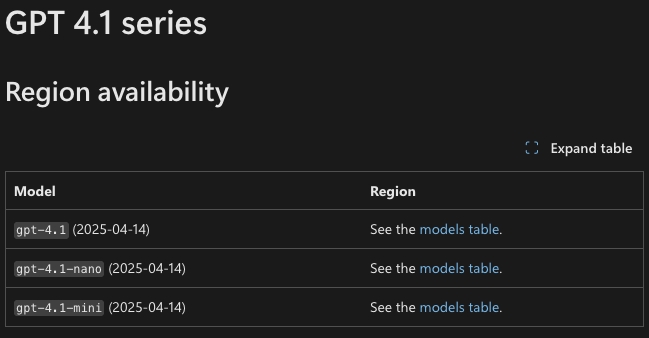

See OpenAI's Model documentation to learn more about their available models.

Was this helpful?