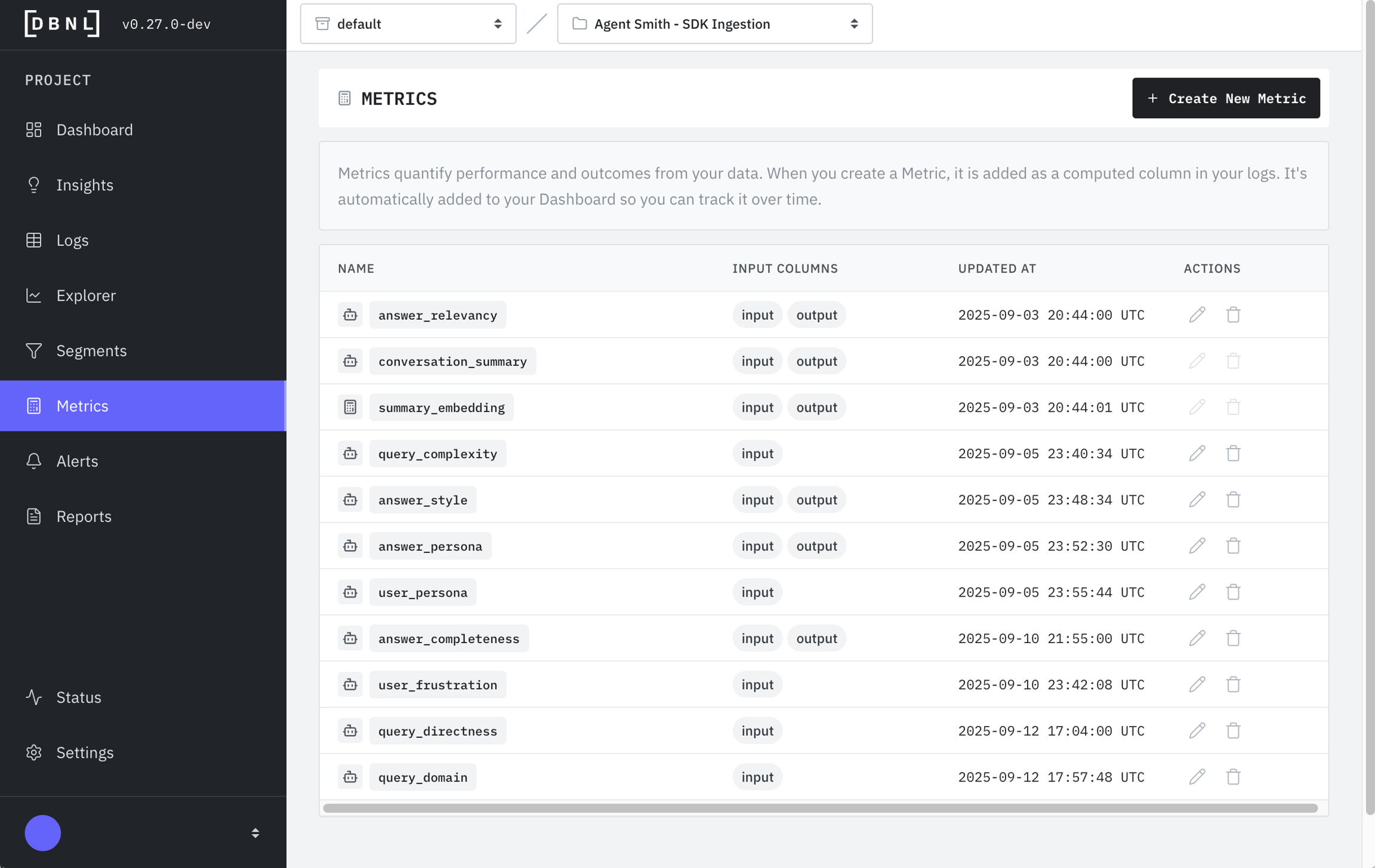

Metrics

Codify signals to track behavior that matters

A Metric is a mapping from Columns into meaningful numeric values representing cost, quality, performance, or other behavioral characteristics. Metrics are computed for every ingested log or trace as part of the DBNL Data Pipeline and show up in the Logs view, Explorer pages, and Metrics Dashboard.

DBNL comes with many built in metrics and templates that can be customized. Fundamentally, Metrics are one of two types:

LLM-as-judge Metrics: Evals and judges that require an LLM to compute a score or classification based on a prompt.

Standard Metrics: Functions that can be computed using non-LLM methods like traditional Natural Language Processing (NLP) metrics, statistical operations, and other common mapping functions.

Default Metrics

Every product contains the following metrics by default, computed using the required input and output fields of the DBNL Semantic Convention and the default Model Connection for the Project:

answer_relevancy: Determines if theinputis relevant to theoutput. See template.user_frustration: Assesses the level of frustration of theinputbased on tone, word choice, and other properties. See template.topic: Classifies the conversation into a topic based on theinputandoutput. This Metric is created after topics are automatically generated from the first 7 days of ingested data. Topics can be manually adjusted by editing the template.conversation_summary(immutable): A summary of theinputandoutput, used as part oftopicgeneration.summary_embedding(immutable): An embedding of theconversation_summary, used as part oftopicgeneration.

Creating a Metric

Metrics can be created by clicking on the "+ Create New Metric" button on the Metrics page.

When to Create a Metric

Create custom metrics when you need to:

Track specific business KPIs: Cost per conversation, resolution rate, escalation frequency

Monitor quality signals: Response accuracy, hallucination detection, safety violations

Measure performance: Response time, token efficiency, context utilization

Validate against requirements: Brand tone compliance, length constraints, format adherence

Debug recurring issues: Track patterns identified in Insights or Logs exploration

Good metrics are:

Actionable: The metric should inform decisions or trigger alerts

Measurable: Clear numeric or categorical output for every log

Relevant: Tied to product quality, user experience, or business outcomes

Consistent: Produces reliable results across similar inputs

When to Use Standard vs LLM-as-Judge Metrics

Use Standard Metrics when: You need fast, deterministic calculations (word counts, text length, keyword matching, readability scores)

Use LLM-as-Judge Metrics when: You need semantic understanding (relevance, tone, quality, groundedness)

Standard Metrics are faster and cheaper to compute, so prefer them when possible.

LLM-as-Judge Metrics

LLM-as-Judge Metrics can be customized from the built in LLM-as-Judge Metric Templates. Each of these Metrics is one of two types:

Classifier Metric: Outputs a categorical value equal to one of a predefined set of classes. Example:

llm_answer_groundedness.Scorer Metric: Outputs an integer in the range

[1, 2, 3, 4, 5]. Example:llm_text_frustration.

Standard Metrics

Standard Metrics are functions that can be computed using non-LLM methods. They can be built using the Functions available in the DBNL Query Language.

Creating Standard Metrics

Standard Metrics use query language expressions to compute values from your log columns. Here are common examples:

Example 1: Calculate Response Length

Track the word count of AI responses:

Metric Name:

response_word_countType: Standard Metric

Formula:

word_count({RUN}.output)

Example 2: Detect Refusal Keywords

Identify when the AI refuses to answer:

Metric Name:

contains_refusalType: Standard Metric

Formula:

or(or(contains(lower({RUN}.output), "sorry"), contains(lower({RUN}.output), "cannot")), contains(lower({RUN}.output), "unable"))

Example 3: Calculate Input Complexity

Measure how complex user prompts are:

Metric Name:

input_reading_levelType: Standard Metric

Formula:

flesch_kincaid_grade({RUN}.input)

Example 4: Detect Question Marks

Check if input is a question:

Metric Name:

is_questionType: Standard Metric

Formula:

contains({RUN}.input, "?")

Example 5: Compare String Similarity

Measure how similar input and output are (useful for detecting parroting):

Metric Name:

input_output_similarityType: Standard Metric

Formula:

subtract(1.0, divide(levenshtein({RUN}.input, {RUN}.output), max(len({RUN}.input), len({RUN}.output))))

Troubleshooting Metrics

Metric Not Appearing in Logs or Dashboard

Possible causes:

The metric was created after logs were ingested - metrics only compute for new data after creation

The pipeline run failed during the Enrich step - check the Status page

The metric references a column that doesn't exist in your data

Solution: Check Status page for errors, verify column names, and wait for the next pipeline run.

LLM-as-Judge Metric Returns Unexpected Values

Possible causes:

The Model Connection is using a different model than expected

The evaluation prompt is ambiguous or unclear

The column placeholders (e.g.,

{input},{output}) are incorrect

Solution: Test your Model Connection using the "Validate" button, review example logs to check if columns have expected values, and refine the evaluation prompt for clarity.

Standard Metric Formula Errors

Common errors:

Solution: Use the Query Language Functions reference to verify syntax, check column names match your data exactly, and add null/zero checks with conditionals.

Metric Computation is Slow

Possible causes:

LLM-as-Judge metrics are inherently slower (require Model Connection calls for each log)

Your Model Connection has high latency or rate limits

Large log volume

Solution: Use Standard Metrics where possible, consider a faster Model Connection (like local NVIDIA NIM), or increase pipeline timeout settings.

Metric Values Are All Null

Possible causes:

Required columns are missing from your logs

Formula syntax error causing computation to fail silently

Model Connection is unreachable or returning errors

Solution: Check logs to verify required columns exist, test formula on a small subset, validate Model Connection, and check Status page for pipeline errors.

Need more help? Contact [email protected] or visit distributional.com/contact. Include your metric definition and any error messages from the Status page.

Was this helpful?