Trading Strategy

The following tutorial will help you understand the basics of how to continuously test a multi-component system involving components owned by third-parties.

The data files required for this tutorial are available in the following file.

Trading Strategy Introduction

In this tutorial, we use a basic asset trading strategy to illustrate a small multi-component system. This trading strategy makes trade decisions that are based on tweets.

How the Trading Strategy Works

The tweets are analyzed for sentiment and entities by two separate components. Then the trades are recommended by another component for the entities that are mentioned in the tweets and sentiment of each tweet. If the positive sentiment of a tweet is no less than .7, a set of buy trades are recommended. Otherwise, a set of sell trades are recommended.

How to Test the Trading Strategy

The test strategy involves matching current behavior to expected baseline Trading System behavior. The process for testing the Trading System involves passing a fixed and representative dataset of tweets the the whole trading system and then to compare its performance at a granular, distributional level, with the performance of a recorded baseline of collecting such data.

In this tutorial, we will use Distributional's testing platform to run the tests. In order to do so, we will define a run config, the tests to run, and create code which compiles the results to send to dbnl.

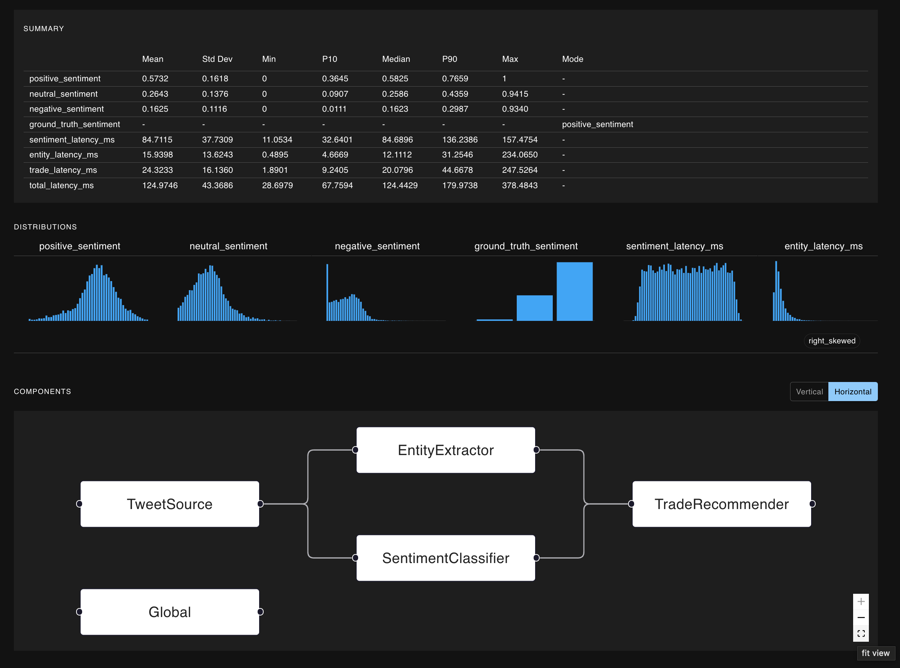

Trading Strategy Composition

The trading strategy is composed of 4 components: TweetSource, SentimentClassifier, EntityExtractor, and TradeRecommender with some dependency structure. That is, some of these components depend on the outputs of others.

Directed Acyclic Graph

The following is a directed acyclic graph (DAG) that represents the dependencies between the components of the Trading Strategy. Note that the Global component is a placeholder to represent the information that is shared (or derived) by more than one component.

Unexpected error with integration mermaid: Integration is not installed on this space

Component Definitions

For each of these components, we define its purpose, associated columns and artifacts, as well as a mention of team ownership and a sense of stochasticity.

Component: TweetSource

This component refers to the source data used to conduct all tests defined. This benchmark data is unchanged and is used as the input to the SentimentClassifier and the EntityExtractor components for our tests.

Columns:

tweet_id: Unique identifier for each tweet.tweet_content: Tweets related to financial markets.

Component: SentimentClassifier

This component is responsible for analyzing the sentiment of the tweets. Given a tweet, it will output a probability vector of the sentiment of the tweet. The vector has three elements: positive, neutral, and negative. The sum of the vector elements is 1. The SentimentClassifier is owned by a separate team and may be stochastic.

Columns:

positive_sentiment: Measure of positive sentiment, value between 0 and 1.neutral_sentiment: Measure of neutral sentiment, value between 0 and 1.negative_sentiment: Measure of positive sentiment, value between 0 and 1.ground_truth_sentiment: Ground truth sentiment category for the tweet.sentiment_latency_ms: Classifier inference time (in milliseconds).

Component: EntityExtractor

This component is responsible for extracting entities from the tweets. Given a tweet, it will output a list of entities. The EntityExtractor is owned by a separate team and may be stochastic.

Columns:

entities: List of entities extracted from the tweet.entity_latency_ms: Time taken by the entity extractor to process the tweet.

Component: TradeRecommender

This component is responsible for recommending trades based on the sentiment and entities of the tweets. Given the sentiment vector and the list of entities from the SentimentClassifier and EntityExtractor, it will output a list of trades. The TradeRecommender is owned by a separate team, and while it is not stochastic in how it follows the trading strategy, it may be stochastic because its upstream components are stochastic.

Columns:

buy_rec: List of company ticker symbols to buy.sell_rec: List of company ticker symbols to sell.trade_latency_ms: Time taken by the trading model to process the input and make a decision.

Component: Global

This component is a placeholder to represent the information that is shared (or derived) by more than one component. In this case, it represents the total time taken for the system to infer a trading recommendation from a tweet.

Columns:

total_latency_ms: Total time taken for the system to infer a trading recommendation from a tweet.

Creating data and sending it to dbnl

Run configuration

The run configuration defines the columns of data that are associated with the components of the Trading Strategy (listed above), as well as the DAG of the components. The run_config.json file in this tutorial defines the run configuration for the Trading Strategy.

Columns

The columns are defined in the columns field of the run configuration. Below we show the columns associated to the EntityExtractor component. Notice how each column is associated with a specific component, and how we can support more than just numerical types, as depicted with the entities, which is of type list. Also, the the data we are submitting includes application data, such as entity_latency_ms.

Components DAG

The components DAG is a dictionary which maps the component name to the list of the components that depend on it.

Prepare results for dbnl

In this tutorial, we have prepared a number of parquet files with examples of the data that is generated by testing the Trading Strategy. In reality, the creation of these files would be done by the user, and we are going to cover how to do this now.

To wit, we will sketch out a hypothetical environment and how to pass the tweets through the system, and track each of the columns that are associated with the components of the system. We will then compile these columns into a parquet file.

In subsequent sections, we will show how to send these parquet files to dbnl and eventually run some tests.

Environment

We'll assume that we can access Snowflake to retrieve the tweets and that the SentimentClassifier and EntityExtractor are external services we call. We'll implement the TradeRecommender and orchestrate the whole process, logging important steps. Finally, we'll save the results as a parquet file.

Steps

Step 1: Simulate Tweets with Ground Truth Sentiment stored in Snowflake

We will simulate fetching tweets from Snowflake. The fetch_tweets function will return a list of tweets with the columns tweet_id, tweet_content, and ground_truth_sentiment.

Step 2: Define Component Functions.

We'll define functions for each component in the process. Two of these functions will call the sentiment classifier and entity extractor services. The third function will implement the trade recommender. Note that in reality you would likely be calling all these services.

Component Function: Get Sentiment

Component Function: Get Entities

Component Function: Recommend Trades

Step 3: Implement the Orchestration

We'll implement the main function that calls each component, logs important information, and compiles the results.

Step 4: Save Results as a Parquet File

We'll save the final results in a parquet file.

Test Results Creation

We have now shown how to simulate the trading environment by passing the tweets through the trading system, tracking each of the columns that are associated with the components of the system, and compiling the results into a parquet file.

Send baseline run to dbnl

There are 10 parquet files associated with this tutorial. The first file is the baseline run, which is the run that we will use to compare the other runs to.

In this section, we will show how to send the parquet file to dbnl. We will be using the dbnl SDK and Python 3.8 and above.

Create a Project, a Run Config, and Submit the Baseline Run

Create a Project

Create a Run Configuration

Load the Baseline Run Results

We have organized the files such that the first alphabetically is the baseline.

We can load this data into a pandas DataFrame.

Create a new run and submit the baseline test results.

View the DAG of the components and the columns associated with each component

Consider spending some time within the Baseline Run. Notice when clicking on the components, only the columns associated with each component are displayed.

Set this Run as the Baseline

We can set this run we have just created as the baseline for all future tests. Now, when future runs are created, they will automatically be compared to this run whenever a test references the baseline.

This action can also be taken at the Test Configuration page.

Designing and executing continuous testing

Once you have data in Distributional, you can conduct continuous integration testing by creating tests which define consistent behavior between the current status of the app and the baseline as defined earlier.

Create tests

In this section, we will create tests that compare the baseline run to the other runs. Rather than using the website to create the tests, we will use the dbnl sdk to do so; the web UI is quite useful for iterating on test design based on insights from dbnl runs. We have prepared a test configuration file that defines the tests that we will create.

Test Configuration

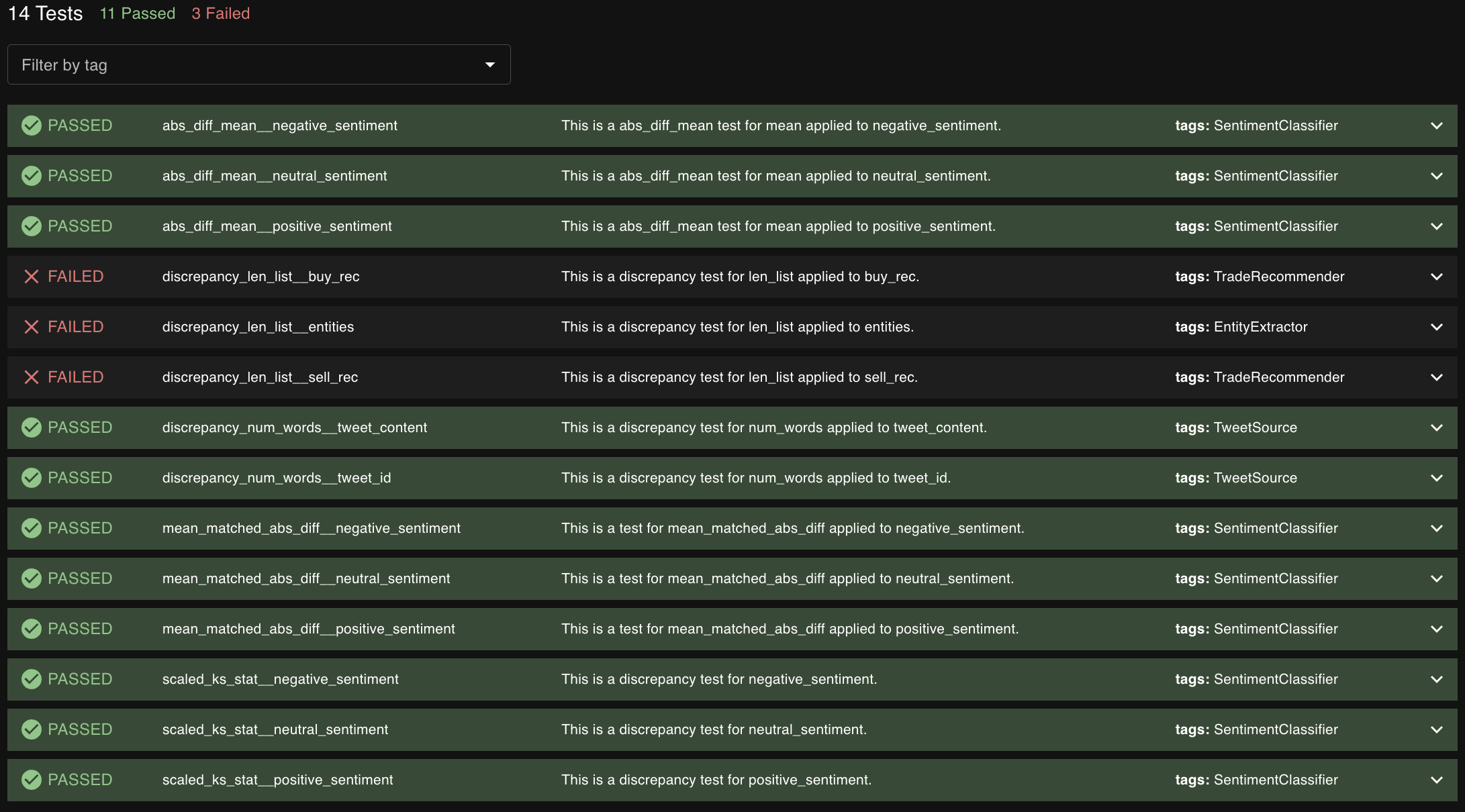

There are 14 tests in total and we show one below.

Example Test Payload (Soon to be deprecated schema)

Below is an example of a test payload which references the baseline run. This payload will eventually need to be hydrated with the proper project id as well as created tag ids. For now, we demonstrate the syntax of creating a test (to be described in the SentimentClassifier section right below).

What is being Tested?

These are all tests of consistency. We are testing that the system is behaving consistently across runs. We are actually not testing the system's performance against ground truth data. We are testing the system's performance against itself at a previous point in time. By holding the data constant, we can isolate the effects of the system's performance.

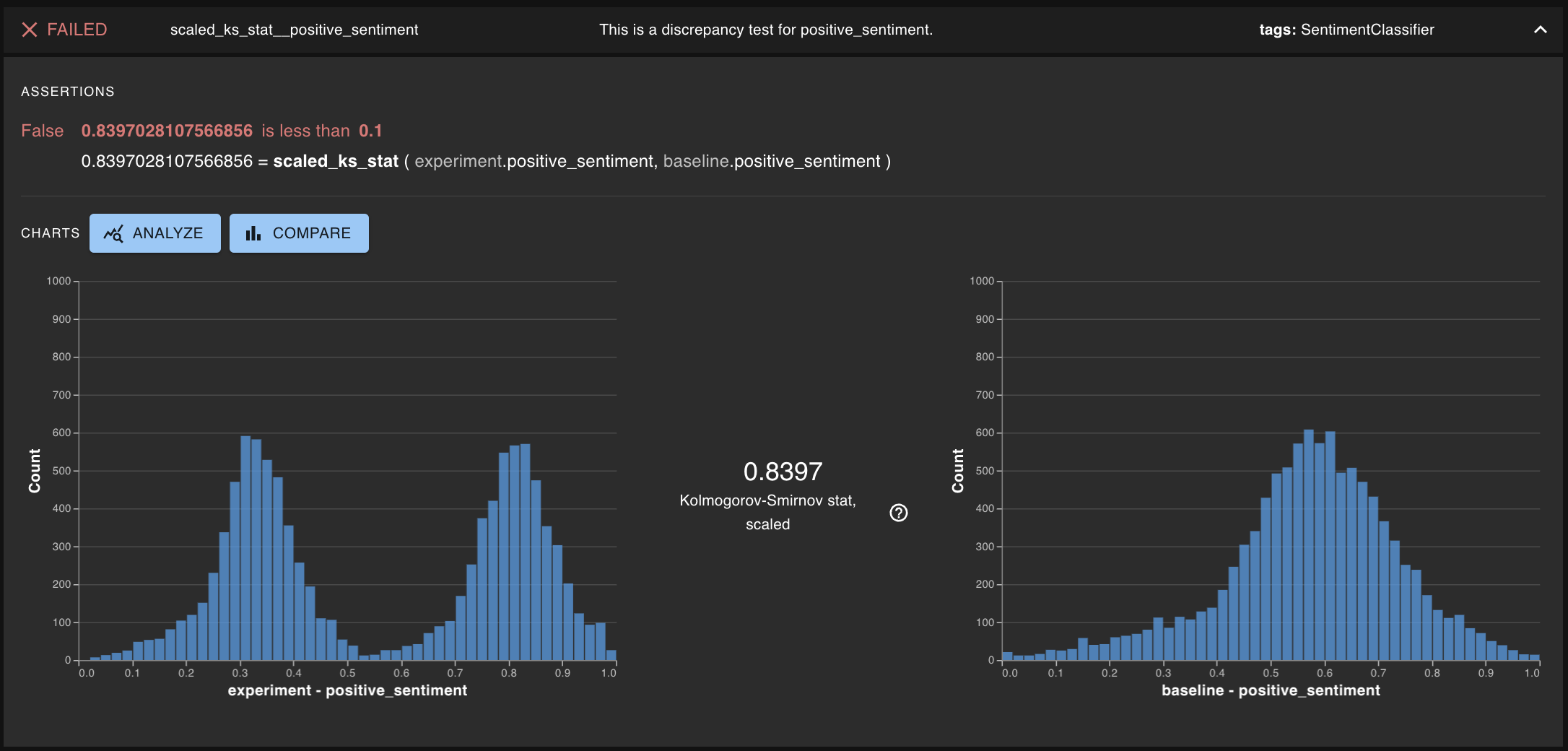

Sentiment Classifier

We write tests on consistency of the distributions of the probabilities of the sentiment classifier. These return a scaled version of the Kolmorogov-Smirnov statistic. We set a threshold about how large this statistic can be before we consider the test to have failed. These tests are all being compared to the corresponding distribution of the baseline run.

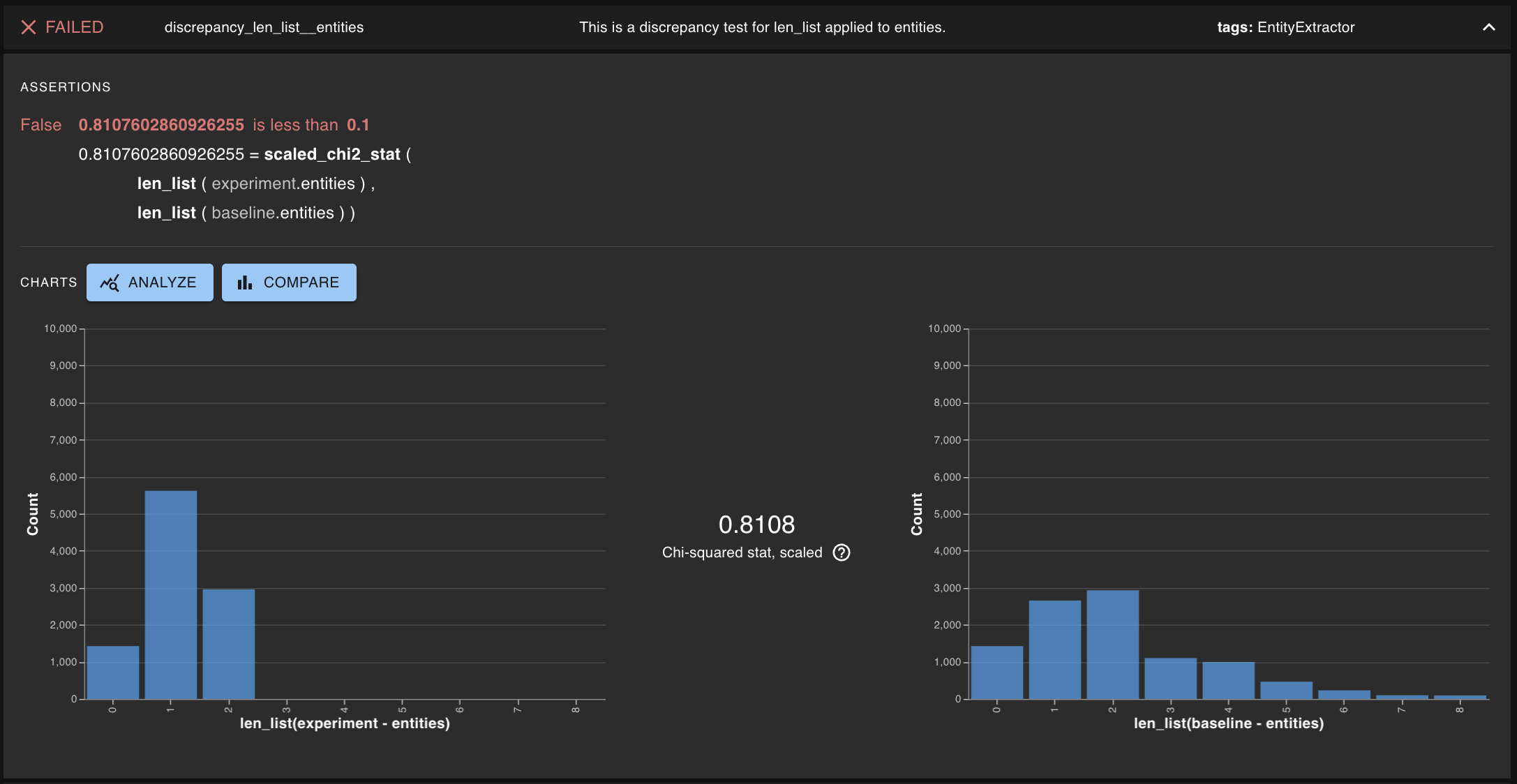

Entity Extractor

We write tests on the consistency of the distribution of the number of entities extracted. These return a scaled version of the chi-squared statistic. Similarly, we set a threshold about how large this statistic can be before we consider the test to have failed. These tests are all being compared to the corresponding distribution of the baseline run.

Purpose

By running these tests across every reasonable component of this system, we can isolate the components that are causing the system to behave differently in the event of a failure. By superimposing the results of these tests onto a DAG, we can see the path of the failure and the components that are causing the failure.

A Note On Thresholds

The thresholds are set by the user. This takes experience of seeing the system behave. Distributional has Forward-Deployed Engineers who are experienced in setting these thresholds; additionally we have plans to automate this process in our roadmap.

Submit Tests to DBNL

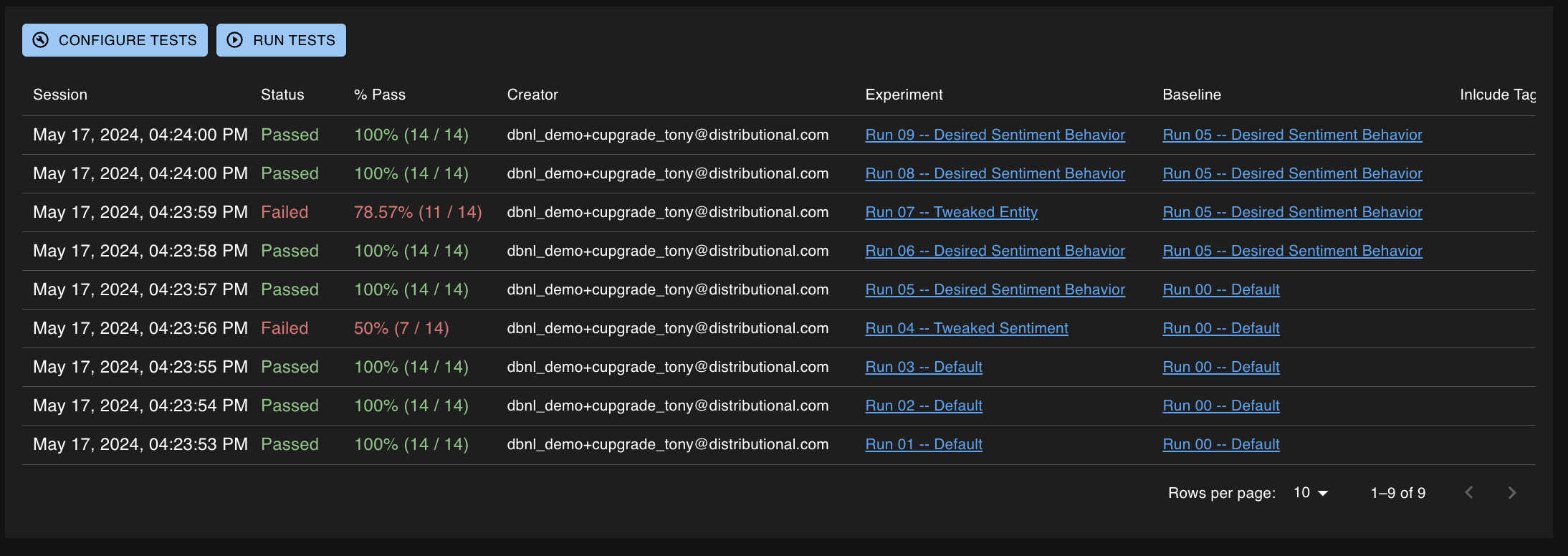

Exploration of Test Results

This tutorial provides 10 total runs reflecting the continuous testing of the asset trading strategy described above. You can use the code provided to submit these in chronological order, or your dedicated DBNL customer engineer can have these automatically loaded into your account. After that has taken place, if the baseline has been appropriately set, your test sessions will be automatically populated.

When a test session states Passed, no individual test assertions failed. When a test session states Failed, at least one test assertion was violated -- these warrant further investigation as described below.

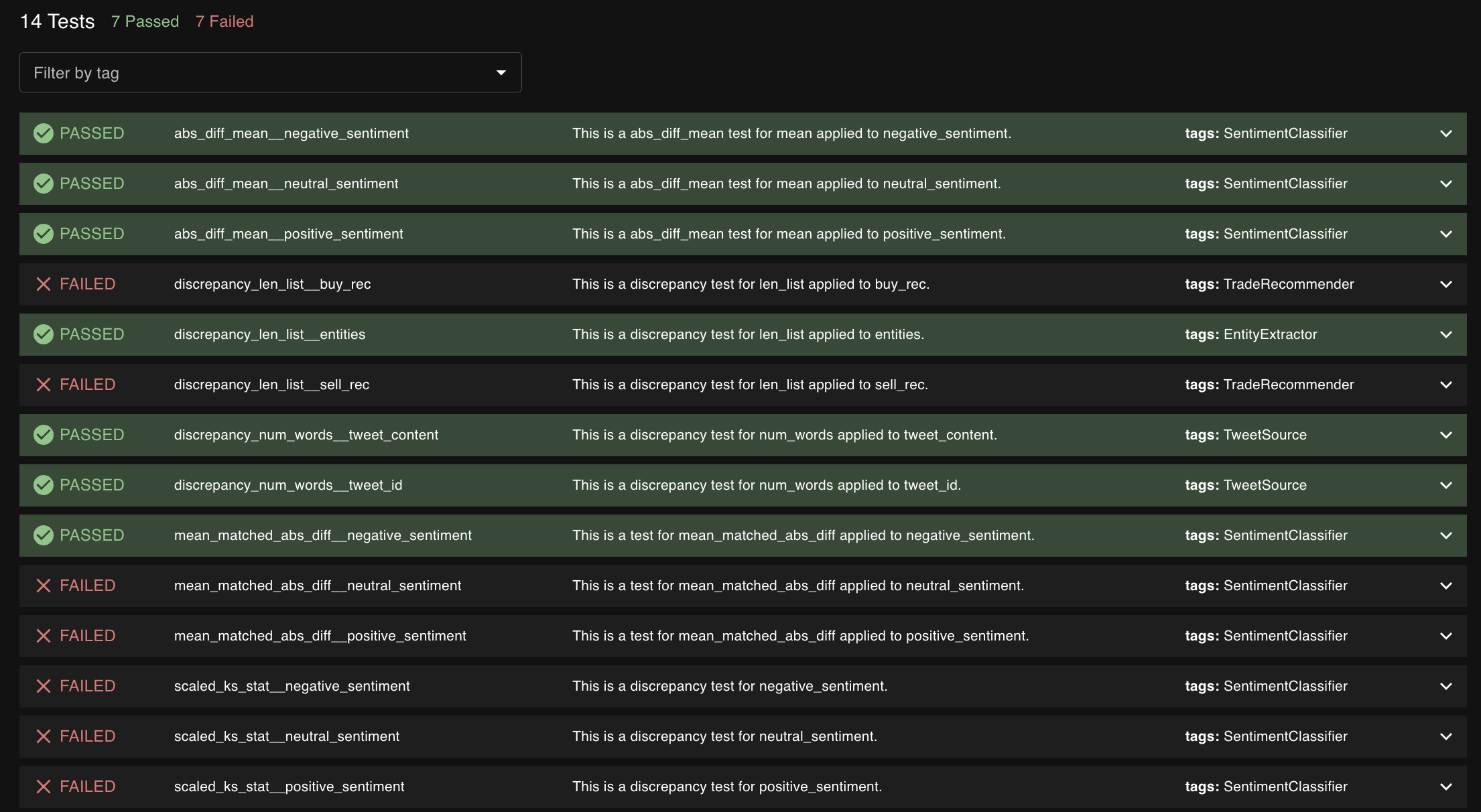

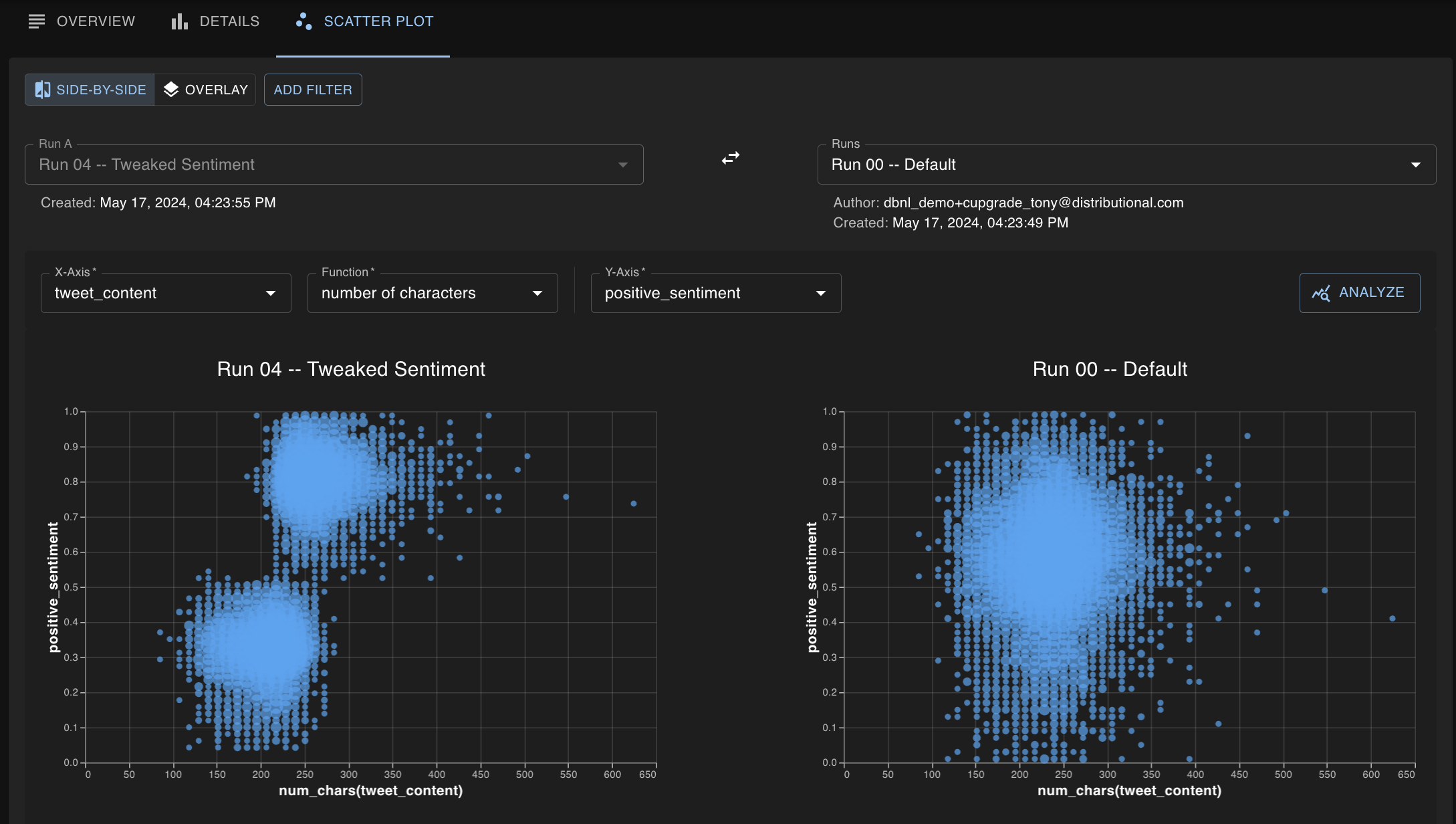

Run 04 - Loss of consistency in sentiment classifier

When we click into the earlier failed test session, the test session associated with Run 04, we can conduct some analysis and determine the root cause of failures.

As we can see from the tags present on the failures, the TradeRecommender and SentimentClassifier components both seem to encounter failures. Referring back to the run detail page where the Components DAG structure is present, we can see that the SentimentClassifier is upstream from the TradeRecommender. This leads us to believe that the SentimentClassifier is the more likely source of issues and worth investigating first.

Within this test session, we can expand out one of the failed assertions. In the image below, we can see some severely different behavior in sentiment -- well beyond the threshold for this KS test. Given that the TweetSource tests passed, we know that the data has not changed. To learn what behavior is changing in the SentimentClassifier we can visit the Compare page which is linked.

From the Compare page, we can try to learn how the columns of the run may yield insights as to why we are seeing such aberrant app behavior. In particular, we can use the raw text present in the tweet_content to conduct analysis on properties that DBNL can derive (including the length of the tweet). The graph below shows some, perhaps, bizarre change in behavior, where long tweets are seen very positively and short tweets are seen very negatively.

Using this information, our trading team was able to alert the sentiment classifier team to the large change in behavior. This helped lead them to fix a bug in their system which was corrected; after that correction, Run 05 was created to show that the behavior has returned to normal. After that, Run 05 is considered the new baseline.

Run 07 - Loss of consistency in entity extractor

Again, Distributional's continuous testing is able to catch failed behavior which emerges in Run 07. Below, we can see the test session (similarly to above) but the failed test assertions lead to a different conclusion than before.

Here, the TradeRecommender tests are again failing, but this time the EntityExtractor test is failing and the SentimentClassifier tests are all passing (as are the TweetSource tests). This breadth of testing across all components allows us to believe that the EntityExtractor is the most likely source of problems because it is upstream of TradeRecommender.

Expanding the test assertion associated with EntityExtractor shows us that the number of quantities that are being extracted have suddenly changed greatly. If this is not expected, then this is a massive shift in behavior and we need to investigate further.

Was this helpful?