LLM Models

Distributional can call a third-party or self-hosted LLM on your behalf, with your credentials, to generate results or create metrics based on text data inputs.

DBNL supports the following LLM providers:

OpenAI

Azure OpenAI

AWS Bedrock

AWS SageMaker

Google Gemini

Google VertexAI

Providers

The following arguments are supported for the OpenAI provider.

Any OpenAI-compatible endpoint (such as a self-hosted LLM in your environment) can be accessed this way.

api_key

API key to access OpenAI

base_url

[Optional] Location of where to access LLMs.

If not provided, defaults to call OpenAI directly. If provided, should be a valid URL that points to an OpenaAI-compatible provider, but not a specific model. For example:

The following arguments are supported for the Azure OpenAI provider.

api_key

API key to access an Azure-hosted OpenAI-compatible model

azure_endpoint

Location of where models are hosted. For example:

api_version

Version of Azure OpenAI REST API to use. As of 2025-07-01, Distributional uses only the "Data plan - inference" REST API. See official Azure OpenAI documentation for further details.

The following arguments are supported for the AWS Bedrock provider.

aws_access_key_id

Must start with AKIA.

Note: session keys are not supported as they expire too quickly and Distributional expects long-lived keys, as the LLM will be called on all future Runs.

aws_secret_access_key

AWS Secret Key

aws_region

[Optional] AWS Region where the AWS Bedrock Model is available

aws_bedrock_runtime_endpoint

[Optional] Endpoint to interact with AWS Bedrock Runtime API.

Example: bedrock-runtime.us-east-1.amazonaws.com.

The following arguments are supported for the OpenAI provider.

aws_access_key_id

Must start with AKIA. Session keys are not supported as they expire too quickly and Distributional expects long-lived keys, as the LLM will be called on all future Runs.

aws_secret_access_key

AWS Secret Key

aws_region

AWS Region where the AWS SageMaker Endpoint is available

endpoint_name

[Optional] Name of AWS Endpoint that hosts the Model. Can be left blank if the endpoint can be derived from the Model alone.

The following arguments are supported for the Google Gemini provider.

api_key

API key to access Google Gemini API

base_url

[Optional] Gemini API Base URL. If provided, should be a valid URL that points to a provider, but not a specific model. For example:

The following arguments are supported for the OpenAI provider.

google_application_credentials_json

JSON string containing credentials for a service account.

region

[Optional] GCP Region for Vertex AI.

gcp_project_id

[Optional] GCP Project ID for Vertex AI. If not provided, Distributional will try to infer from the provided credentials.

vertex_ai_endpoint

[Optional] Vertex AI Endpoint override, if desired.

Models

After setting your LLM Provider details, you must set the model you want Distributional to access on your behalf. Each provider has their own set of models that may be available. Distributional provides a Validate Model button to enable simple testing to confirm your model has been set up correctly.

See OpenAI's Model documentation to learn more about their available models.

Model names must be provided exactly as the Azure OpenAI Models REST API requires.

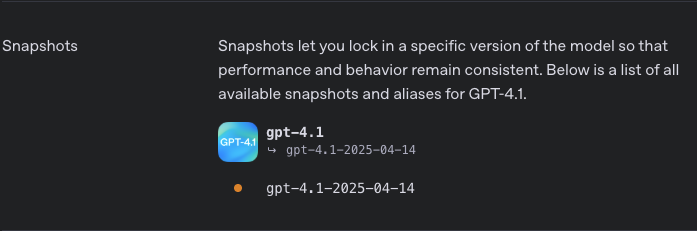

For example, the GPT-4.1 Model lists the appropriately-formatted names. Validation would fail for "GPT-4.1" but succeed for gpt-4.1 or gpt-4.1-2025-04-14.

See Azure AI Foundry's documentation to learn more about their available models.

Model names must be provided exactly as the Azure OpenAI Models REST API requires.

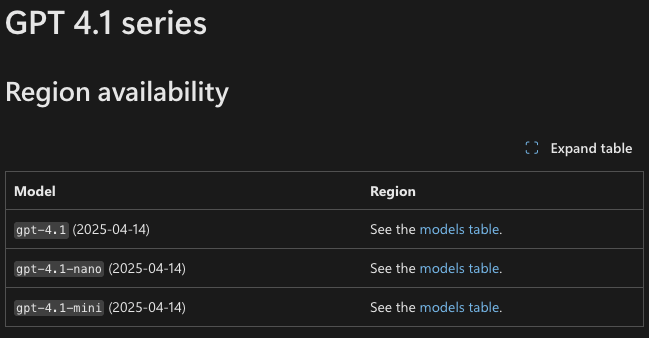

For example, the GPT-4.1 series lists the appropriately-formatted model names. Validation would fail for "GPT-4.1" but succeed for gpt-4.1 or gpt-4.1-nano.

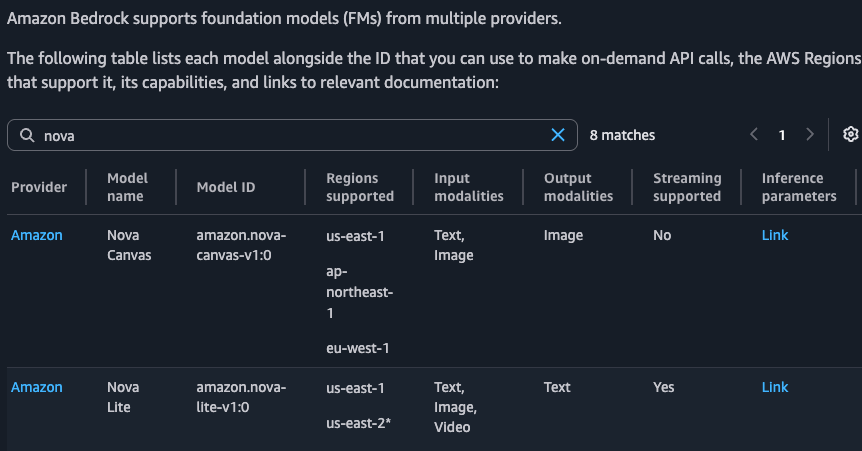

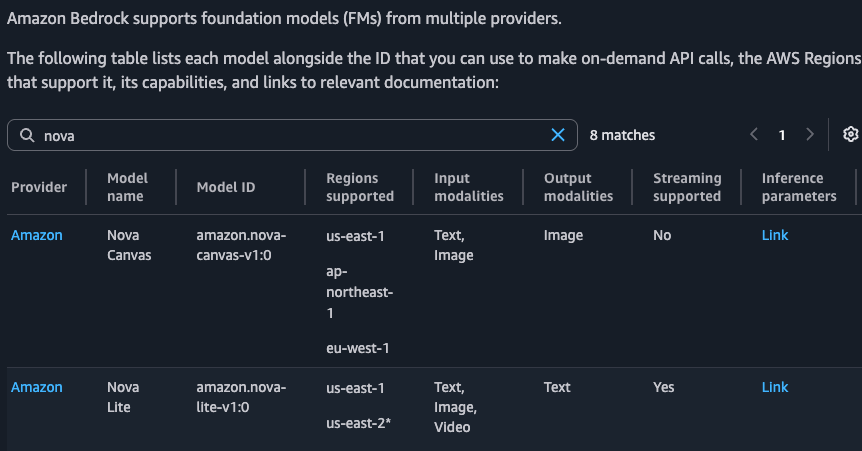

See AWS Bedrock's Model documentation to learn more about their available models.

Models must be provided exactly as the AWS Bedrock API requires.

For example, the table at the above documentation link lists the appropriately-formatted Model IDs. Validation would fail for "Nova Lite" but succeed for amazon.nova-lite-v1:0.

See AWS SageMaker's Model documentation to learn more about their available models.

Models must be provided exactly as the AWS SageMaker AI Studio API requires.

For example, picking a model from AWS SageMaker JumpStart, the model name "Falcon 7B Instruct BF16" will fail validation, but it will succeed with huggingface-llm-falcon-7b-instruct-bf16.

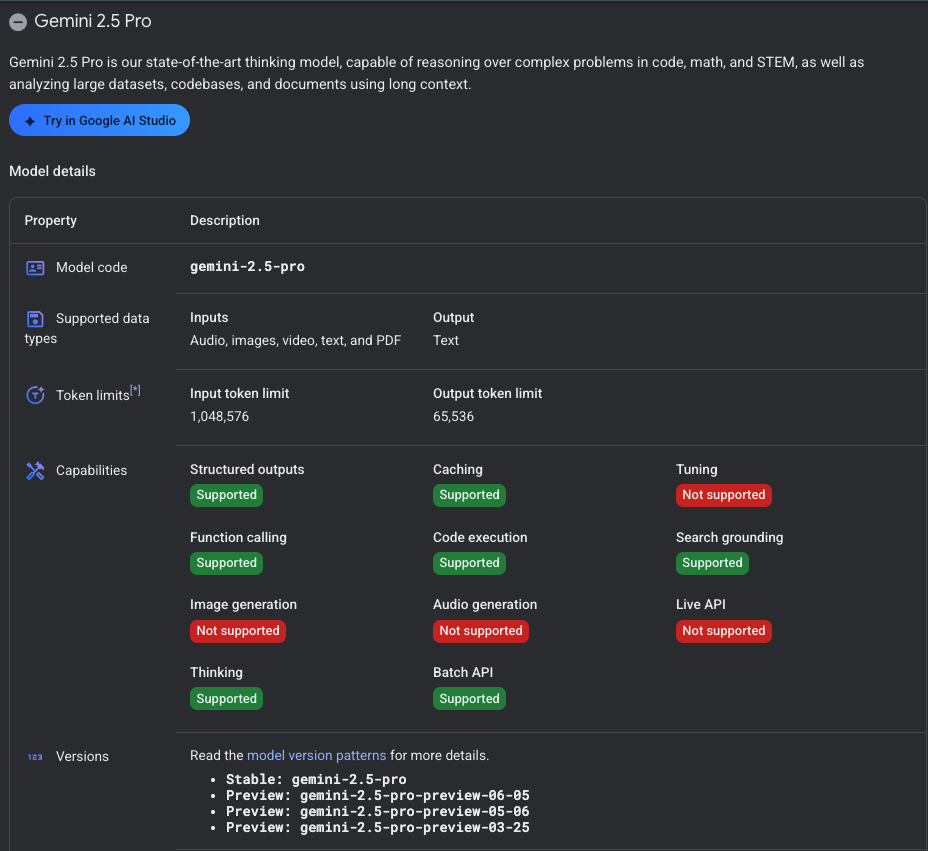

See Google Gemini's documentation to learn more about their available models.

Models must be provided exactly as the Google Gemini API requires.

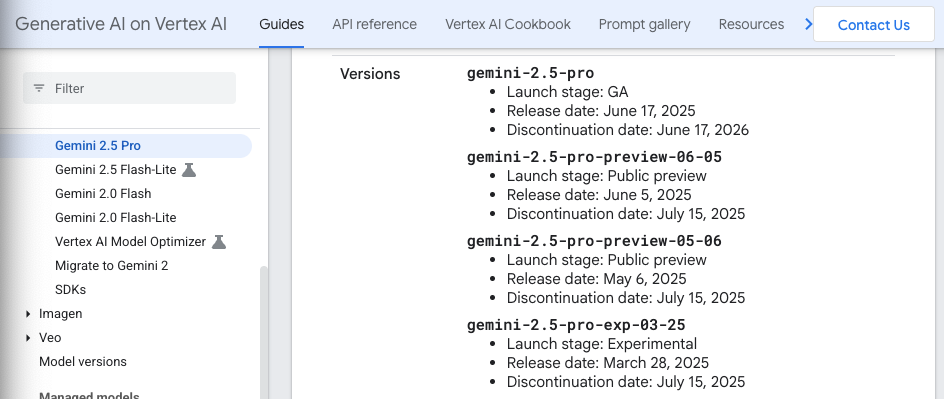

For example, Google Gemini 2.5 Pro, lists the appropriately-formatted model codes. Validation would fail for "Gemini 2.5 Pro", but succeed with gemini-2.5-pro or gemini-2.5-pro-preview-06-05.

See Google Vertex AI's documentation to learn more about their available models.

Models must be provided exactly as the Google Gemini API requires.

For example, Google Gemini 2.5 Pro, lists the appropriately-formatted model IDs. Validation would fail for "Gemini 2.5 Pro", but succeed with gemini-2.5-pro or gemini-2.5-pro-preview-06-05.

Was this helpful?