Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Getting Access

Get started with dbnl

This guide walks you through using the Distributional SDK to create your first project, submit two runs and create a test session to compare the behavior of your AI application over time.

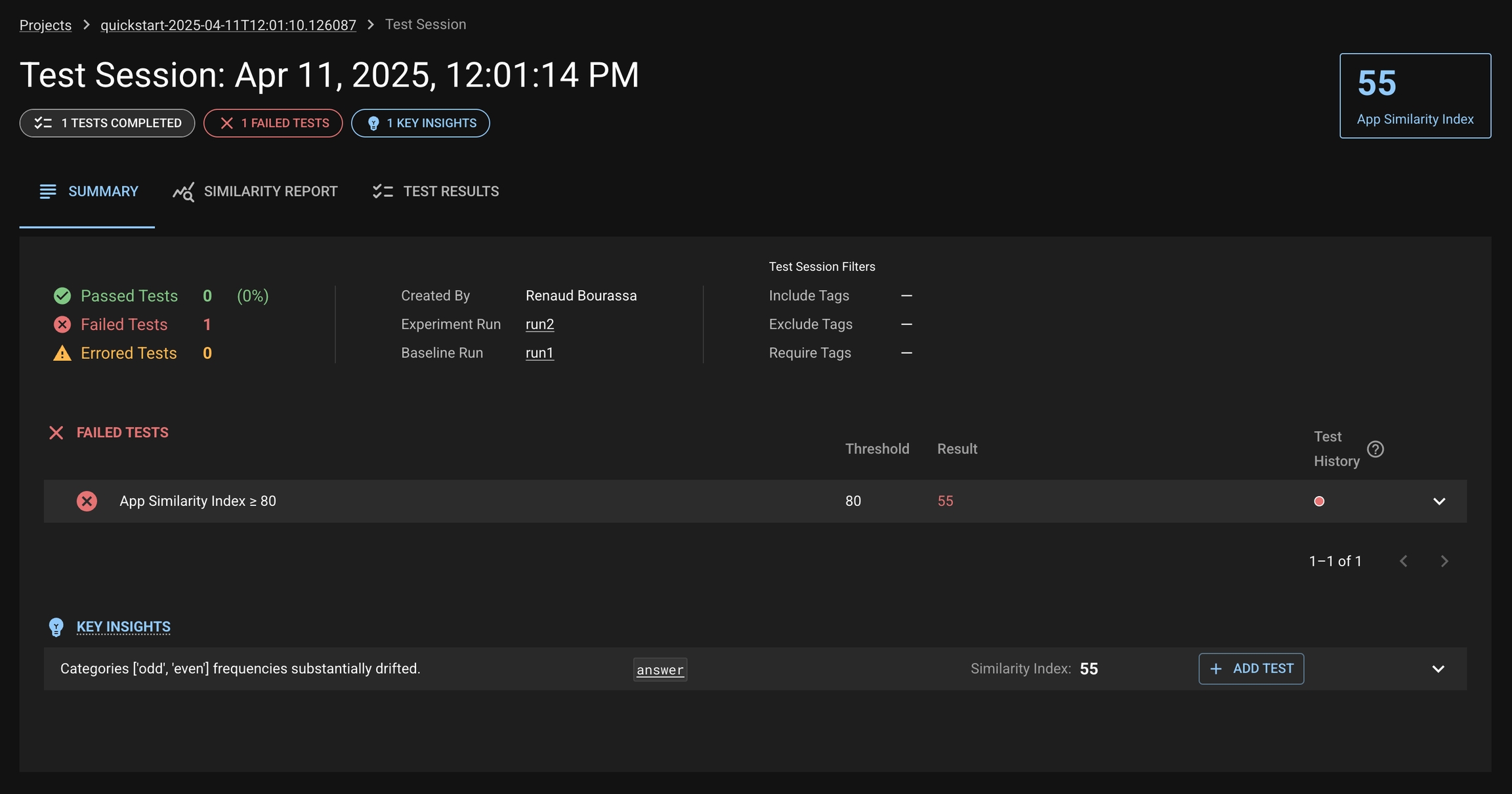

Congratulations! You ran your first Test Session. You can see the results of the Test Session by navigating to your project in the dbnl app and selecting your test session from the test session table.

By default, a similarity index test is added that tests whether your application has changed between the baseline and experiment run.

Distributional's adaptive testing platform

Distributional is an adaptive testing platform purpose-built for AI applications. It enables you to test AI application data at scale to define, understand, and improve your definition of AI behavior to ensure consistency and stability over time.

Define Desired Behavior Automatically create a behavioral fingerprint from the app’s runtime logs and any existing development metrics, and generate associated tests to detect changes in that behavior over time.

Adaptive testing requires a very different approach than traditional software testing. The goal of adaptive testing is to enable teams to define a steady baseline state for any AI application, and through testing, confirm that it maintains steady state, and where it deviates, figure out what needs to evolve or be fixed to reach steady state once again. This process needs to be discoverable, logged, organized, consistent, integrated and scalable.

Testing AI applications needs to be fundamentally reimagined to include statistical tests on distributions of quantities to detect meaningful shifts that warrant deeper investigation.

Distributions > Summary Statistics: Instead of only looking at summary statistics (e.g. mean, median, P90), we need to analyze distributions of metrics, over time. This accounts for the inherent variability in AI systems while maintaining statistical rigor.

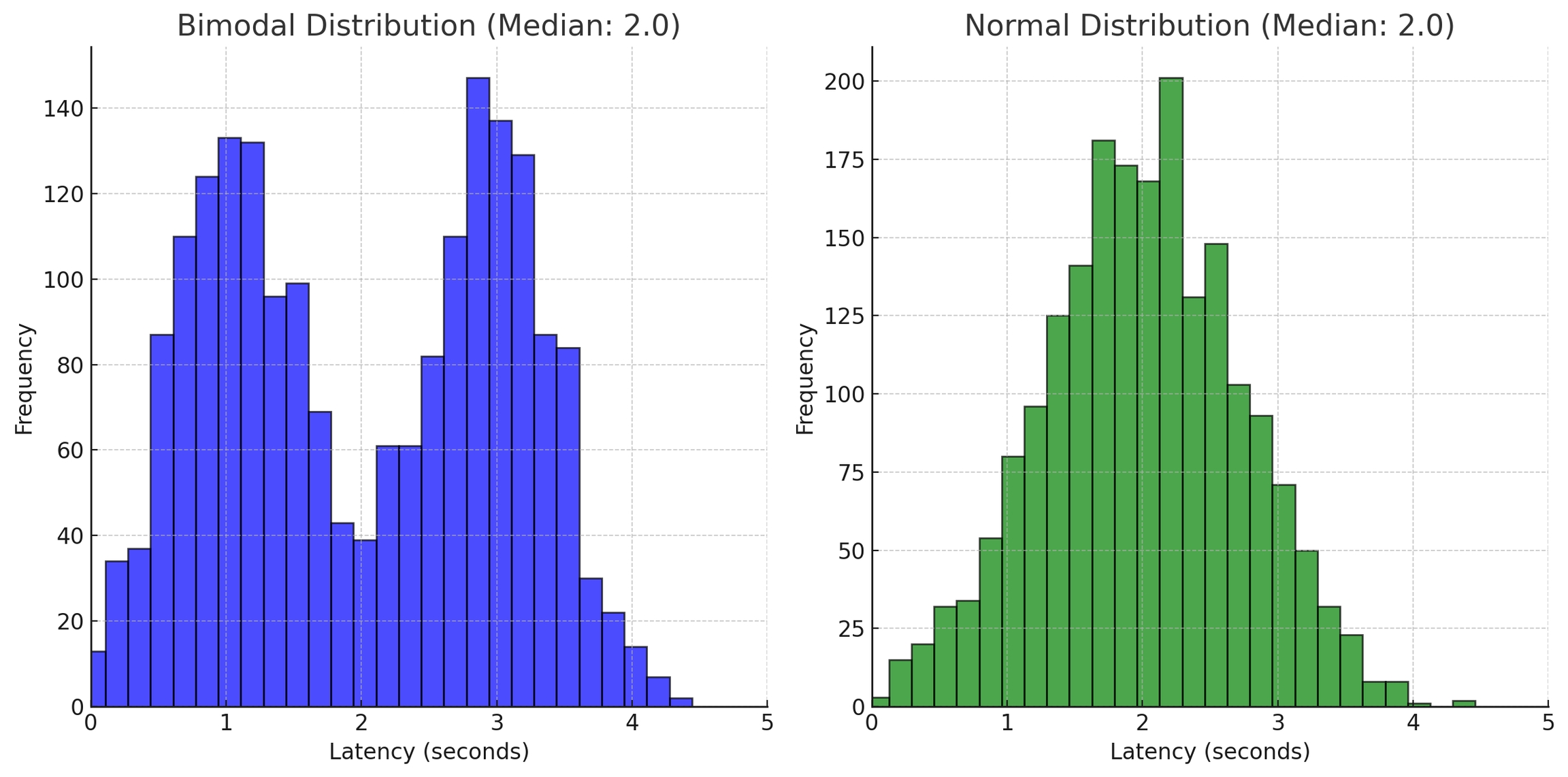

Why is this useful? Imagine you have an application that contains an LLM and you want to make sure that the latency of the LLM remains low and consistent across different types of queries. With a traditional monitoring tool, you might be able to easily monitor P90 and P50 values for latency. P50 represents the latency value below which 50% of the requests fall and will give you a sense of the typical (median) response time that users can expect from the system. However, the P50 value for a normal distribution and bimodal distribution can be the same value, even though the shape of the distribution is meaningfully different. This can hide significant (usage-based or system-based) changes in the application that affect the distribution of the latency scores. If you don’t examine the distribution, these changes go unseen.

Consider a scenario where the distribution of LLM latency started with a normal distribution, but due to changes in a third-party data API that your app uses to inform the response of the LLM, the latency distribution becomes bimodal, though with the same median (and P90 values) as before. What could cause this? Here’s a practical example of how something like this could happen. The engineering team of the data API organization made an optimization to their API which allows them to return faster responses for a specific subset of high value queries, and routes the remainder of the API calls to a different server which has a slower response rate.

The effect that this has on your application is that now half of your users are experiencing an improvement in latency, and now a large number of users are experiencing “too much” latency and there’s an inconsistent performance experience among users. Solutions to this particular example include modifying the prompt, switching the data provider to a different source, format the information that you send to the API differently or a number of other engineering solutions. If you are not concerned about the shift and can accept the new steady state of the application, you can also choose to not make changes and declare a new acceptable baseline for the latency P50 value.

Installing the Python SDK and Accessing Distributional UI

To install the latest stable release of the dbnl package:

To install a specific version (e.g., version 0.22.0):

eval ExtraThe dbnl.eval extra includes additional features and requires an external spaCy model.

To install the required en_core_web_sm pretrained English-language NLP model model for spaCy:

dbnl with the eval ExtraTo install dbnl with evaluation extras:

If you need a specific version with evaluation extras (e.g., version 0.22.0):

We recommend setting your API token as an environment variable, see below.

DBNL has three reserved environment variables that it reads in before execution.

To check your SDK version:

To check your API server version:

Logging into the web app

Clicking the hamburger menu (☰) on the top-left corner

Viewing the version number listed in the footer

Want access to the Distributional platform? . We’ll guide you through the process and ensure you have everything you need to get started.

While we offer SaaS and a for testing purposes, neither are suitable for a production environment. We recommend our option if you plan on deploying the dbnl platform directly in your cloud or on-premise environment.

Create a for your own AI application.

Upload your own data as to your Project.

Define to augment your Runs with novel quantities.

Add more to ensure your application behaves as expected.

Learn more about .

Use to be alerted when tests fail.

For access to the Distributional platform, .

Understand Changes in Behavior Get alerted when there are , understand what is changing, and pinpoint at any level of depth what is causing the change to quickly take appropriate action.

Improve Based on Changes Easily add, remove, or recalibrate over time so you always have a dynamic representation of desired state that you can use to test new models, roll out new upgrades, or accelerate new app development.

Distributional’s platform is designed to easily with your existing infrastructure, including data stores, orchestrators, alerting tools, and AI platforms. If you are already using a model evaluation framework as part of app development, those can be used as an input to further define behavior in Distributional.

Ready to start using Distributional? Head straight to our to get set up on the platform and start testing your AI application.

The dbnl SDK supports . You can install the latest release of the SDK with the following command on Linux or macOS, install a specific release, and install :

You should have already received an invite email from the Distributional team to create your account. If that is not the case, please reach out to your Distributional contact. You can access and/or generate your token at (which will prompt you to login if you are not already).

DBNL has three available deployment types, SaaS, , and .

DBNL_API_TOKEN

The API token used to authenticate your dbnl account.

DBNL_API_URL

The base url of the Distributional API. For SaaS users, set this variable to api.dbnl.com. For other users, please contact your sys admin.

DBNL_APP_URL

An optional base url of the Distributional app. If this variable is not set, the app url is inferred from the DBNL_API_URL variable. For on-prem users, please contact your sys admin if you cannot reach the Distributional UI.

Metrics are measurable properties that help quantify specific characteristics of your data. Metrics can be user-defined, by providing a numeric column computed from your source data alongside your application data.

Alternatively, the Distributional SDK offers a comprehensive set of metrics for evaluating various aspects of text and LLM outputs. Using Distributional's methods for computing metrics will enable better data-exploration and application stability monitoring capabilities.

The SDK provides convenient functions for computing metrics from your data and reporting the results to Distributional:

The SDK includes helper functions for creating common groups of related metrics based on consistent inputs.

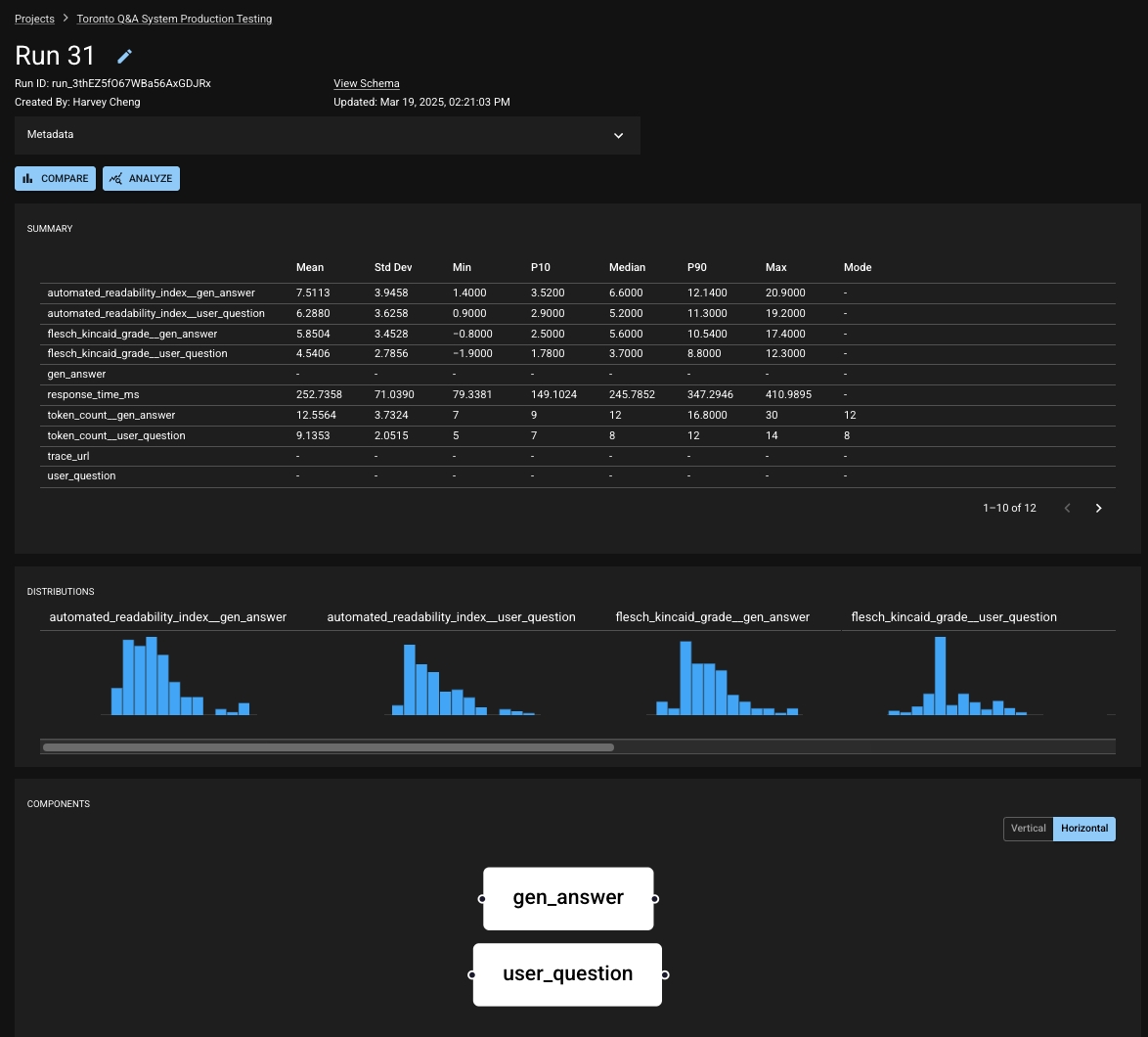

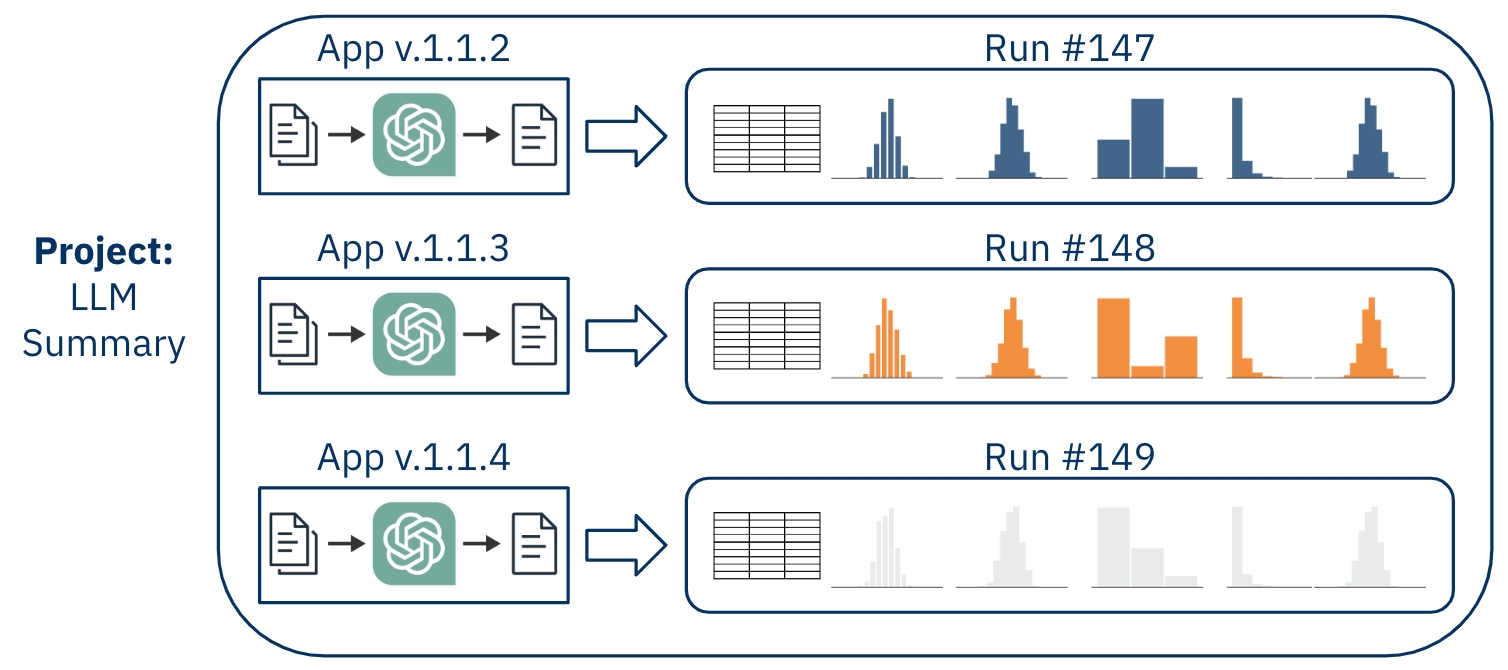

The Run is the core object for recording an application's behavior; when you upload a dataset from usage of your app, it takes the shape of a Run. As such, you can think of a Run as the results from a batch of uses or from a standard example set of uses of your application. When exploring or testing your app's behavior, you will look at the Run in dbnl either in isolation or in comparison to another Run.

A Run contains the following:

a table of results where each row holds the data related to a single app usage (e.g. a single input and output along related metadata),

a set of Run-level values, also known as scalars,

structural information about the components of the app and how they relate, and

user-defined metadata for remembering the context of a run.

Your Project will contain many Runs. As you report Runs into your Project, dbnl will build a picture of how your application is behaving, and you will utilize tests to verify that its behavior is appropriate and consistent. Some common usage patterns would be reporting a Run daily for regular checkpoints or reporting a Run each time you deploy a change to your application.

The structure of a Run is defined by its schema. This informs dbnl about what information will be stored in each result (the columns), what Run-level data will be reported (the scalars), and how the application is organized (the components).

The contains more details on Metrics including some example usage.

See the for a more complete list and description of available metrics.

See the for a more complete list and description of available functions.

Generally, you will use our to report Runs. The data associated with each Run is passed to dbnl as pandas dataframes.

A component is a mechanism for grouping columns based on their role within the app. You can also define an index in your schema to tell dbnl a unique identifier for the rows in your Run results. For more information, see the section on the .

Throughout our application and documentation, you'll often encounter the terms "baseline" and "experiment". These concepts are specifically related to running tests in dbnl. The Baseline Run defines the comparison point when running a test; the Experiment Run is the Run which is being tested against that comparison point. For more information, see the sections on and .

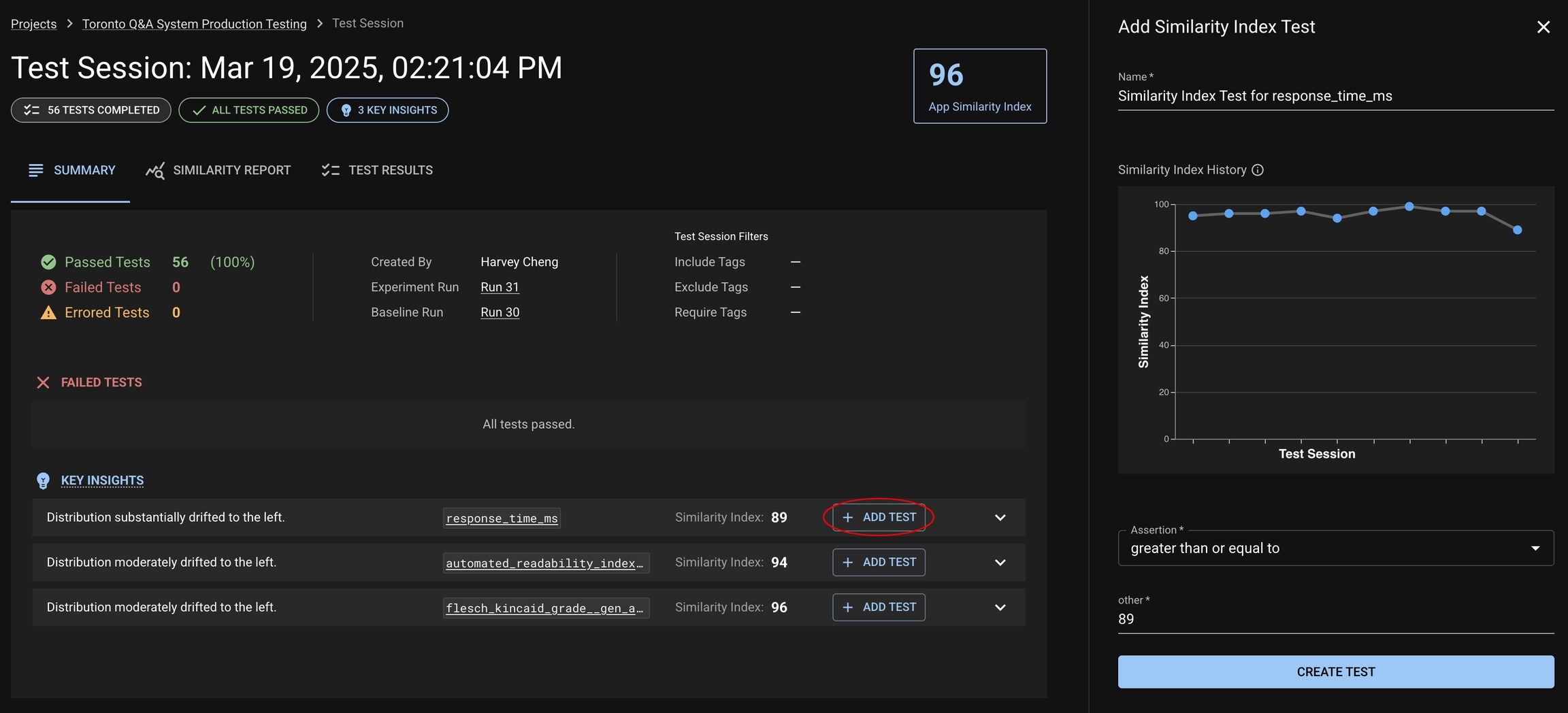

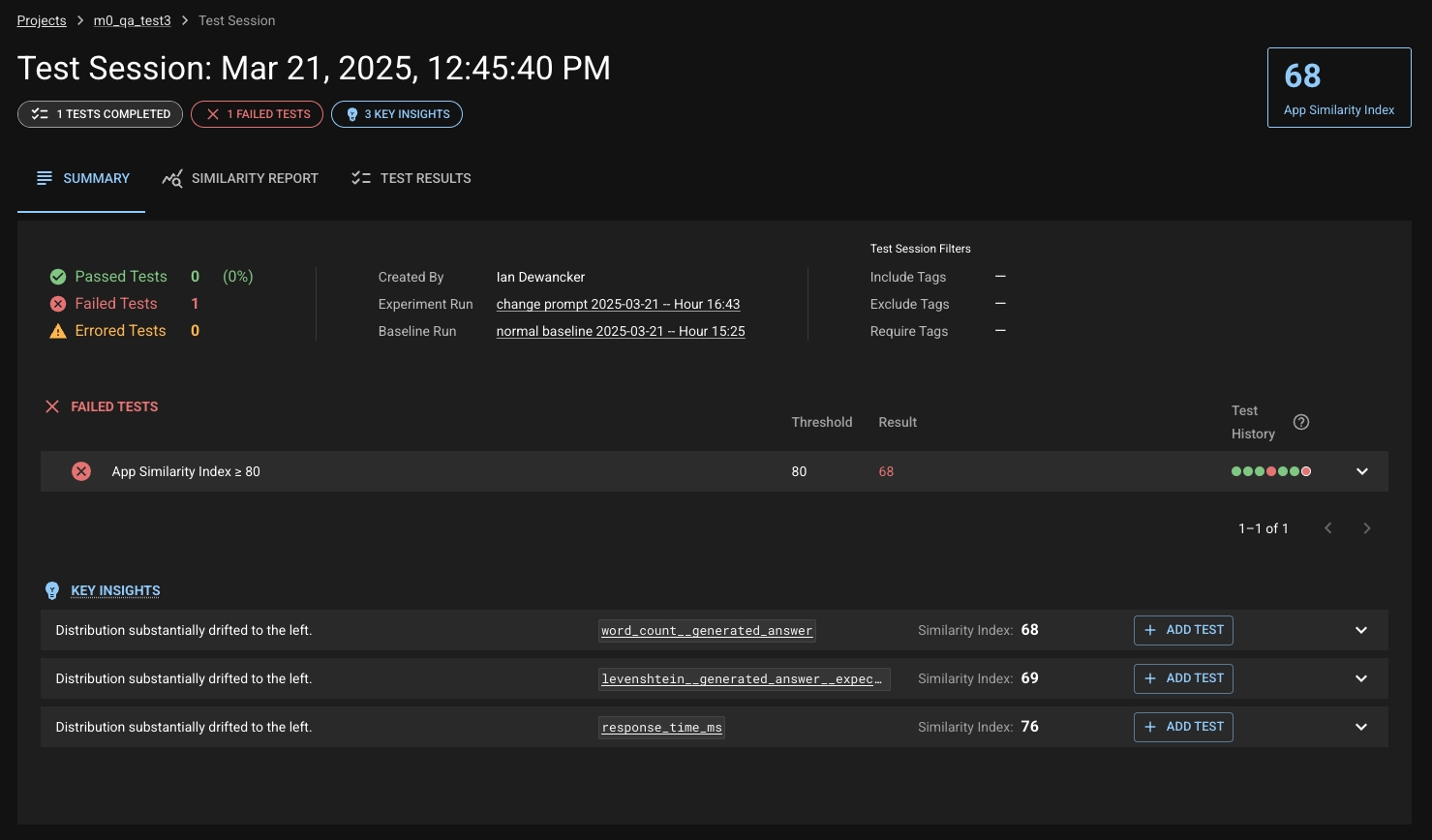

Similarity Index is a single number between 0 and 100 that quantifies how much your application’s behavior has changed between two runs – a Baseline and an Experiment run. It is Distributional’s core signal for measuring application drift, automatically calculated and available in every Test Session.

A lower score indicates a greater behavioral change in your AI application. Each Similarity Index has accompanying Key Insights with a description to help users understand and act on the behavioral drift that Distributional has detected.

Test Session Summary Page — App-level Similarity Index, results of failed tests, and Key Insights

Similarity Report Tab — Breakdown of Similarity Indexes by column and metric

Column Details View — Histograms and statistical comparison for specific metrics

Tests View — History of Similarity Index-based test pass/fail over time

When model behavior changes, you need:

A clear signal that drift occurred

An explanation of what changed

A workflow to debug, test, and act

Similarity Index + Key Insights provides all three.

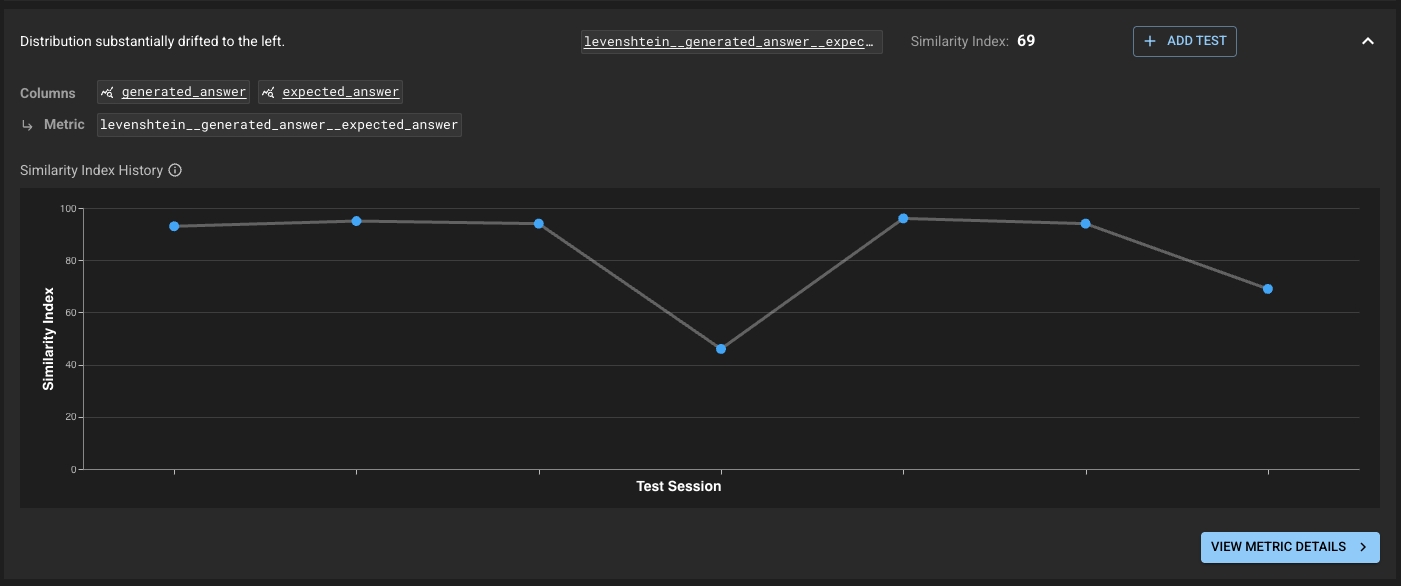

An app’s Similarity Index drops from 93 → 46

Key Insight: “Answer similarity has decreased sharply”

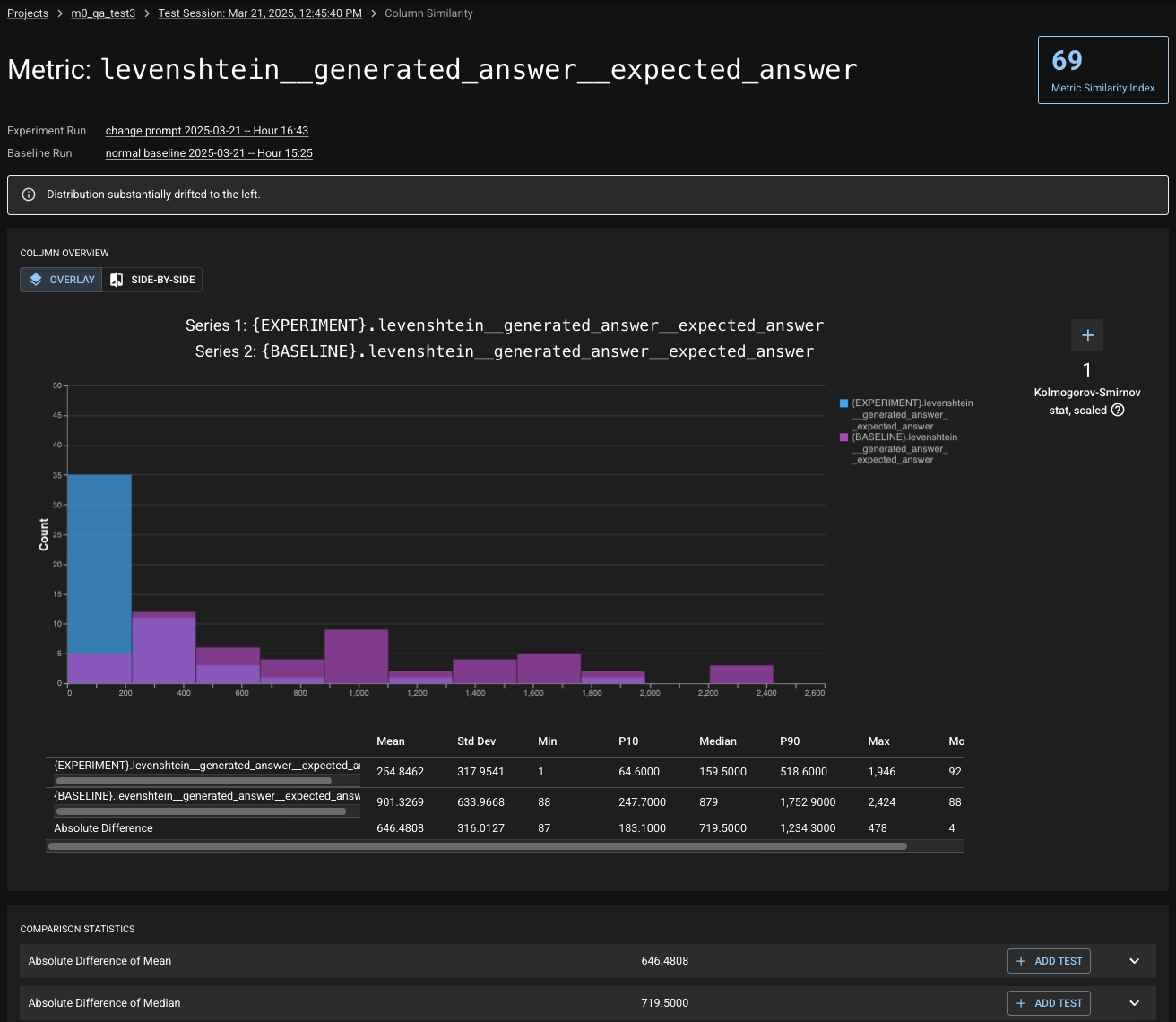

Metric: levenshtein__generated_answer__expected_answer

Result: Investigate histograms, set test thresholds, adjust model

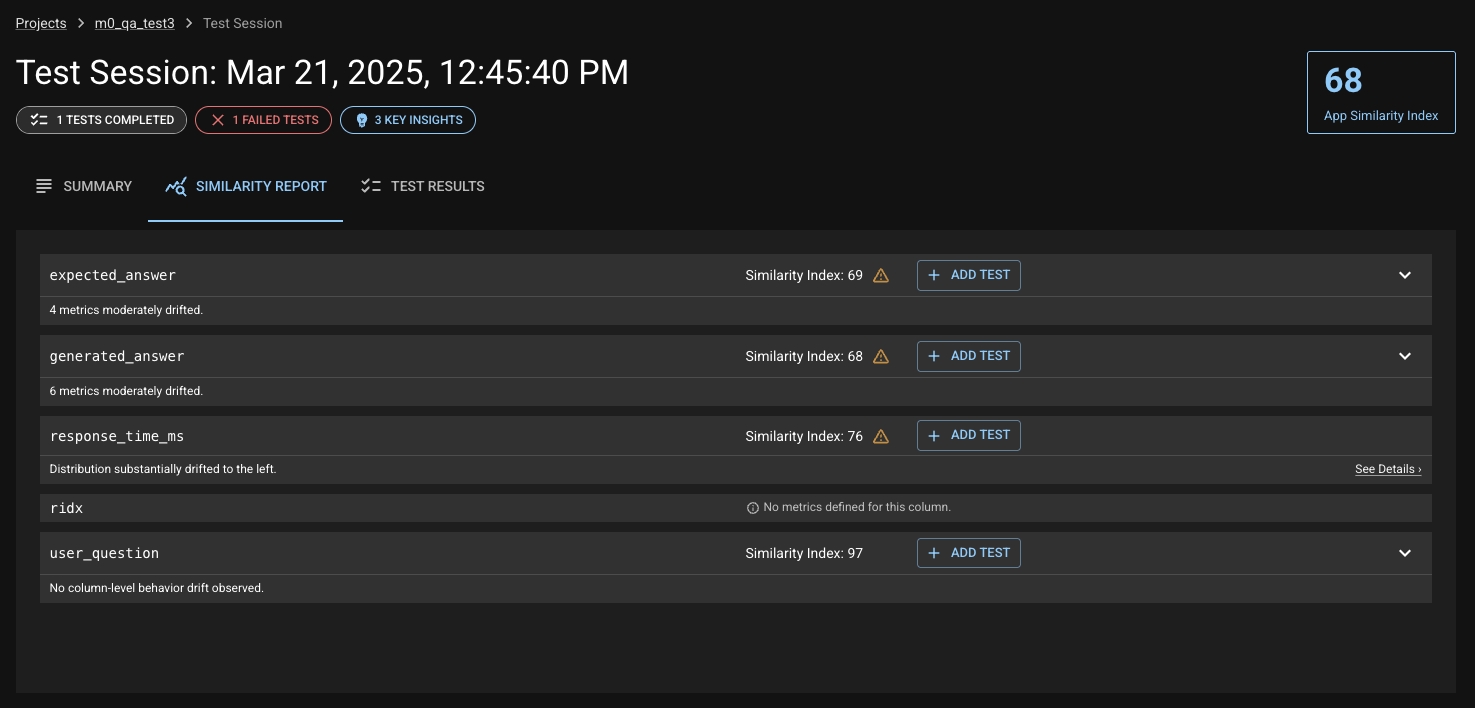

Similarity Index operates at three levels:

Application Level — Aggregates all lower-level scores

Column Level — Individual column-level drift

Metric Level — Fine-grained metric change (e.g., readability, latency, BLEU score)

Each level rolls up into the one above it. You can sort by Similarity Index to find the most impacted parts of your app.

By default, a new DBNL project comes with an Application-level Similarity Index test:

Threshold: ≥ 80

Failure: Indicates meaningful application behavior change

In the UI:

Passed tests are shown in green

Failed tests are shown in red with diagnostic details

All past test runs can be reviewed in the test history.

Key Insights are human-readable interpretations of Similarity Index changes. They answer:

“What changed, and does it matter?”

Each Key Insight includes:

A plain-language summary: “Distribution substantially drifted to the right”

The associated column/metric

The Similarity Index for that metric

Option to add a test on the spot

Distribution substantially drifted to the right.

→ Metric: levenshtein__generated_answer__expected_answer

→ Similarity Index: 46

→ Add Test

Insights are prioritized and ordered by impact, helping you triage quickly.

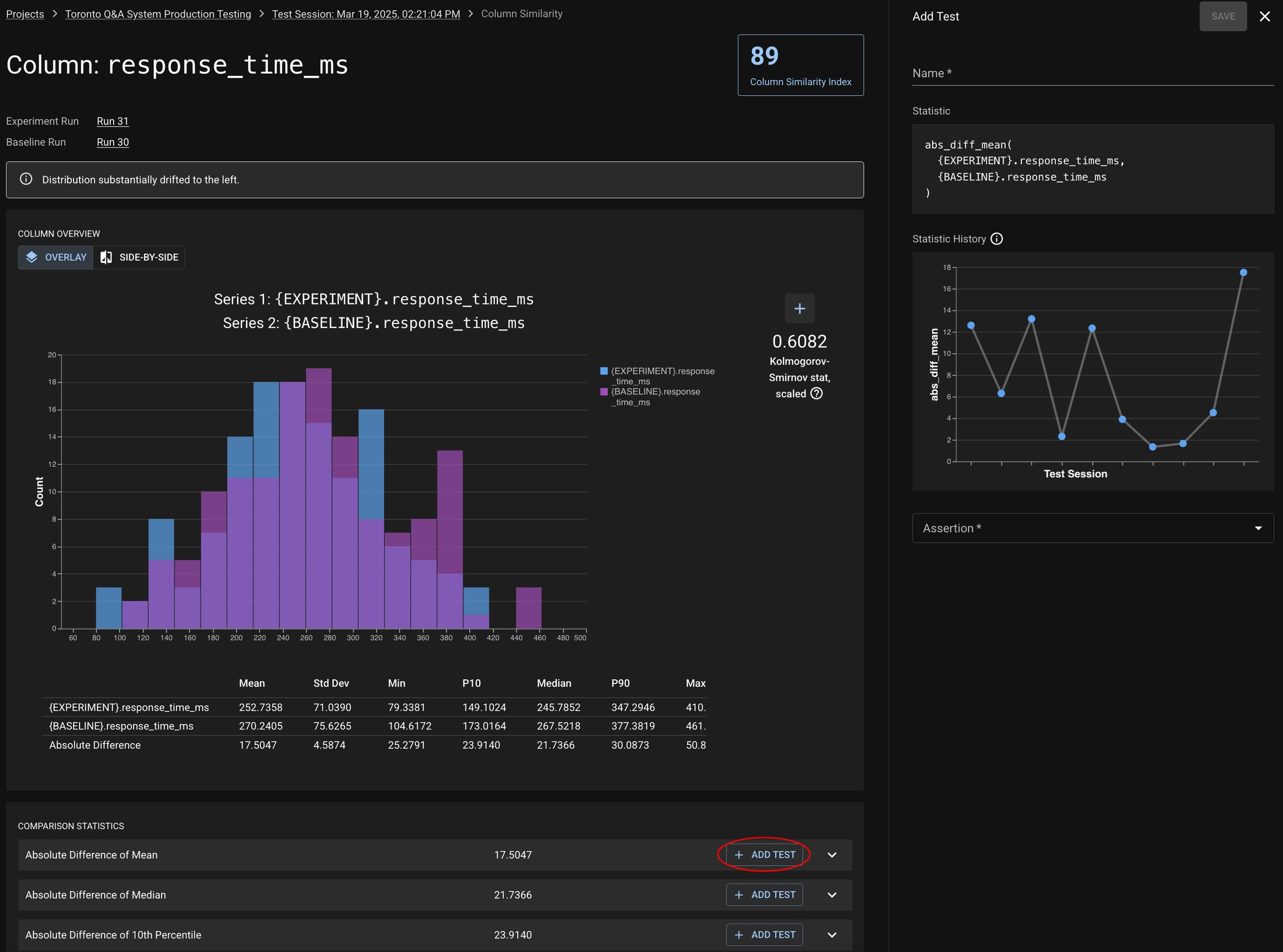

Clicking into a Key Insight opens a detailed view:

Histogram overlays for experiment vs. baseline

Summary statistics (mean, median, percentile, std dev)

Absolute difference of statistics between runs

Links to add similarity or statistical tests on specific metrics

This helps pinpoint whether drift was due to longer answers, slower responses, or changes in generation fidelity.

Run a test session

Similarity Index < 80 → test fails

Review top-level Key Insights

Click into a metric (e.g., levenshtein__generated_answer__expected_answer)

View distribution shift and statistical breakdown

Add targeted test thresholds to monitor ongoing behavior

Adjust model, prompt, or infrastructure as needed

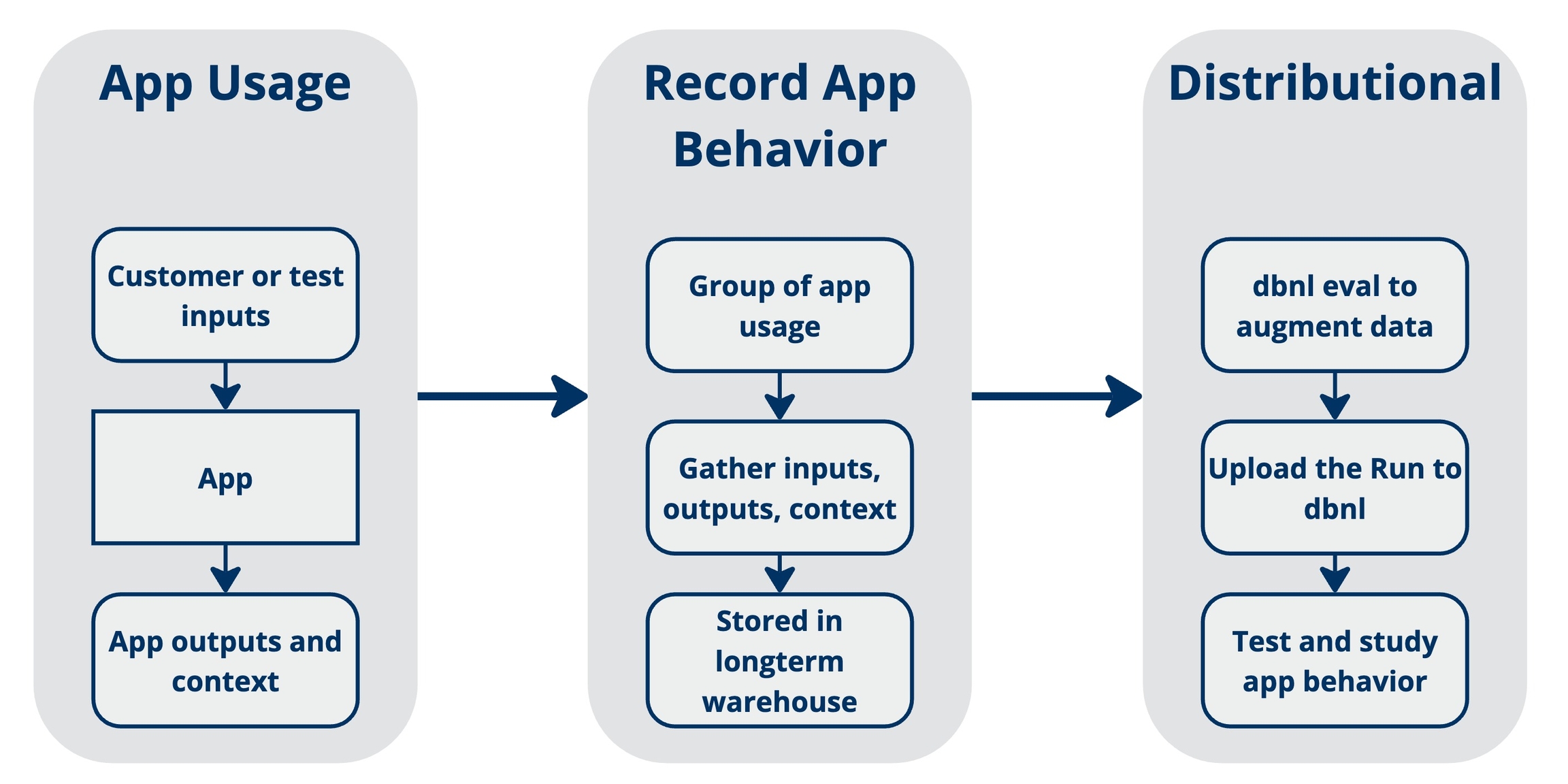

Your data + DBNL testing == insights about your app's behavior

Distributional uses data generated by your AI-powered app to study its behavior and alert you to valuable insights or worrisome trends. The diagram below gives a quick summary of this process:

Each app usage involves input(s), the resulting output(s), and context about that usage

Example: Input is a question from a user; Output is your app’s answer to that question; Context is the time/day that the question was asked.

As the app is used, you record and store the usage in a data store for later review

Example: At 2am every morning, an Airflow job parses all of the previous day’s app usages and sends that info to a data store.

When data is moved to your data store, it is also submitted to DBNL for testing.

Example: The 2am Airflow job is amended to include data augmentation by DBNL Eval and uploading of the resulting Run to trigger automatic app testing.

A Run usually contains many (e.g., dozens or hundreds) rows of inputs + outputs + context, where each row was generated by an app usage. Our insights are statistically derived from the distributions estimated by these rows.

You can read more about the DBNL specific terms . Simply stated, a Run contains all of the data which DBNL will use to test the behavior of your app – insights about your app’s behavior will be derived from this data.

is our library that provides access to common, well-tested GenAI evaluation metrics. You can use DBNL Eval to augment data in your app, such as the inputs and outputs. You are also able to bring your own eval metrics and use them in conjunction with DBNL Eval or standalone. Doing so produces a broader range of tests that can be run, and it allows the platform to produce more powerful insights.

Discover how dbnl manages user permissions through a layered system of organization and namespace roles—like org admin, org reader, namespace admin, writer, and reader.

A user is an individual who can log into a dbnl organization.

Permissions are settings that control access to operations on resources within a dbnl organization. Permissions are made up of two components.

Resource: Defines which resource is being controlled by this permission (e.g. projects, users).

Verb: Defines which operations are being controlled by this permission (e.g. read, write).

For example, the projects.read permission controls access to the read operations on the projects resource. It is required to be able to list and view projects.

A role consists in a set of permissions. Assigning a role to a user gives the user all the permissions associated with the role.

Roles can be assigned at the organization or namespace level. Assigning roles at the namespace level allows for giving users granular access to projects and their related data.

An org role is a role that can be assigned to a user within an organization. Org role permissions apply to resources across all namespaces.

There are two default org roles defined in every organization.

The org admin role has read and write permissions for all org level resources making it possible to perform organization management operations such as creating namespaces and assigning users roles.

The org reader role has read-only permissions to org level resources making it possible to navigate the organization by listing users and namespaces.

To assign a user an org role, go to ☰ > Settings > Admin > Users, scroll to the relevant user and select the an org role from the dropdown in the Org Role column.

A namespace role is a role that can be assigned to a user within a namespace. Namespace role permissions only apply to resources defined within the namespace in which the role is assigned.

There are three default namespace roles defined in every organization.

The namespace admin role has read and write permissions for all namespace level resources within a namespace making it possible to perform namespace management operations such as assigning users roles within a namespace.

The namespace admin role has read and write permissions for all namespace level resources within a namespace except for those resources and operations related to namespace management such as namespace role assignments.

The namespace reader role has read-only permissions for all namespace level resources within a namespace.

This is an experimental role that is available through the API, but is not currently fully supported in the UI.

To assign a user a namespace role within a namespace, go to ☰ > Settings > Admin > Namespaces, scroll and click on the relevant namespace and then click + Add User.

Instructions for self-hosted deployment options

There are two main options to deploy the dbnl platform as a self-hosted deployment:

Helm chart: The dbnl platform can be deployed using a Helm chart to existing infrastructure provisioned by the customer.

Terraform module: The dbnl platform can be deployed using a Terraform module on infrastructure provisioned by the module alongside the platform. This is options is supported on AWS and GCP.

Which option to choose depends on your situation. The Helm chart provides maximum flexibility, allowing users to provision their infrastructure using their own processes, while the Terraform module provides maximum simplicity, reducing the installation to single Terraform command.

Understanding key concepts and their role relative to your app

Adaptive testing for AI applications requires more information than standard deterministic testing. This is because:

AI applications are multi-component systems where changes in one part can affect others in unexpected ways. For instance, a change in your vector database could affect your LLM's responses, or updates to a feature pipeline could impact your machine learning model's predictions.

AI applications are non-stationary, meaning their behavior changes over time even if you don't change the code. This happens because the world they interact with changes - new data comes in, language patterns evolve, and third-party models get updated. A test that passes today might fail tomorrow, not because of a bug, but because the underlying conditions have shifted.

AI applications are non-deterministic. Even with the exact same input, they might produce different outputs each time. Think of asking an LLM the same question twice - you might get two different, but equally valid, responses. This makes it impossible to write traditional tests that expect exact matches.

To account for this, each time you want to measure the behavior of the AI application, you will need to:

Record outcomes at all of the app’s components, and

Push a distribution of inputs through the app to study behavior across the full spectrum of possible app usage.

The inputs, outputs, and outcomes associated with a single app usage are grouped in a Result, with each value in a result described as a Column. The group of results that are used to measure app behavior is called a Run. To determine if an app is behaving as expected, you create a Test, which involves statistical analysis on one or more runs. When you apply your tests to the runs that you want to study, you create a Test Session, which is a permanent record of the behavior of an app at a given time.

Tokens are used for programmatic access to the dbnl platform.

A personal access token is a token that can be used for programmatic access to the dbnl platform through the SDK.

Tokens are not revocable at this time. Please remember to keep your tokens safe.

Token permissions are resolved at use time, not creation time. As such, changing the user permissions after creating a personal access token will change the permissions of the personal access token.

To create a new personal access token, go to ☰ > Personal Access Tokens and click Create Token.

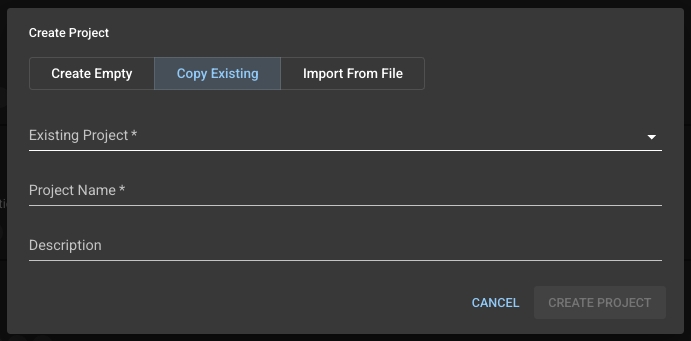

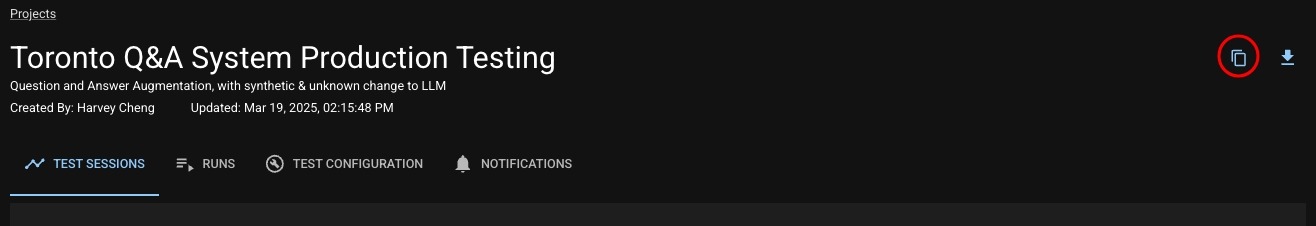

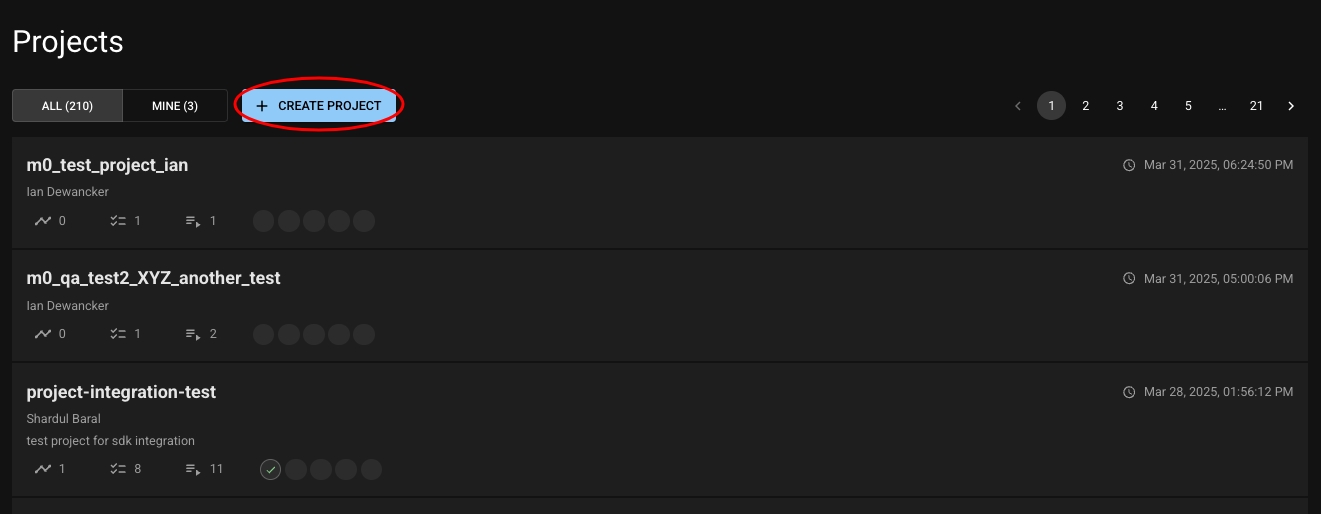

You can create a Project either via the UI or the SDK:

Simply click the "Create Project" button from the Project list view.

You can quickly copy an existing Project to get up and running with a new one. This will copy the following items into your new Project:

Test specifications

Test tags

Notification rules

There are a couple of ways to copy a Project.

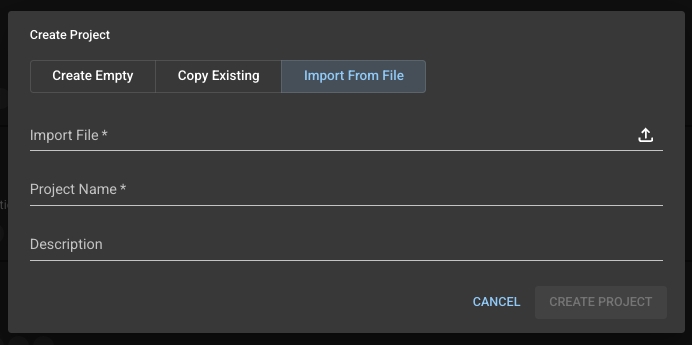

Any Project can be exported to a JSON file; that JSON file can then be adjusted to your liking and imported as a new Project. This is doable both via the UI and the SDK:

To export a Project, simply click the download icon on the Project page, in the header.

This will download the Project's JSON to your computer. There is an example JSON in the expandable section below.

Once you have a Project JSON, you can edit it as you'd like, and then import it by clicking the "Create Project" button on the Project list and then clicking the "Import from File" tab.

Fill out the name and description, click "Create Project", and you're all set!

Exporting and importing a Project is done easily via the SDK functions export_project_as_json and import_project_from_json.

You can also just directly copy a given Project. Again, this can be done via the UI or the SDK:

There are two ways to copy a Project from the UI:

In the Project list, after you click "Create Project", you can navigate to the "Copy Existing" tab and choose a Project from the dropdown.

While viewing a Project, you can click the copy icon in the header to copy it to a new Project.

Copying a Project is done easily via the SDK function copy_project.

The full process of reporting a Run ultimately breaks down into three steps:

Creating the Run, which includes defining its structure and any relevant metadata

Reporting the results of the Run, which include columnar data and scalars

Closing the Run to mark it as complete once reporting is finished

The important parts of creating a run are providing identifying information — in the form of a name and metadata — and defining the structure of the data you'll be reporting to it. As mentioned in the previous section, this structure is called the Run Schema.

A Run schema defines four aspects of the Run's structure:

Columns (the data each row in your results will contain)

Scalars (any Run-level data you want to report)

Index (which column or columns uniquely identify rows in your results)

Components (functional groups to organize the reported results in the form of a graph)

Columns are the only required part of a schema and are core to reporting Runs, as they define the shape your results will take. You report your column schema as a list of objects, which contain the following fields:

name: The name of the column

description: A descriptive blurb about what the column is

component: Which part of your application the column belongs to (see Components below)

Using the index field within the schema, you have the ability to designate Unique Identifiers – specific columns which uniquely identify matching results between Runs. Adding this information facilitates more direct comparisons when testing your application's behavior and makes it easier to explore your data.

Once you've defined the structure of your run, you can upload data to dbnl to report the results of that run. As mentioned above, there are two kinds of results from your run:

The row-level column results (these each represent the data of a single "usage" of your application)

The Run-level scalar results (these represent data that apply to all usages in your Run as a whole)

dbnl expects you to upload your results data in the form of a pandas DataFrame. Note that scalars can be uploaded as a single-row DataFrame or as a dictionary of values.

Now that you understand each step, you can easily integrate all of this into your codebase with a few simple function calls via our SDK:

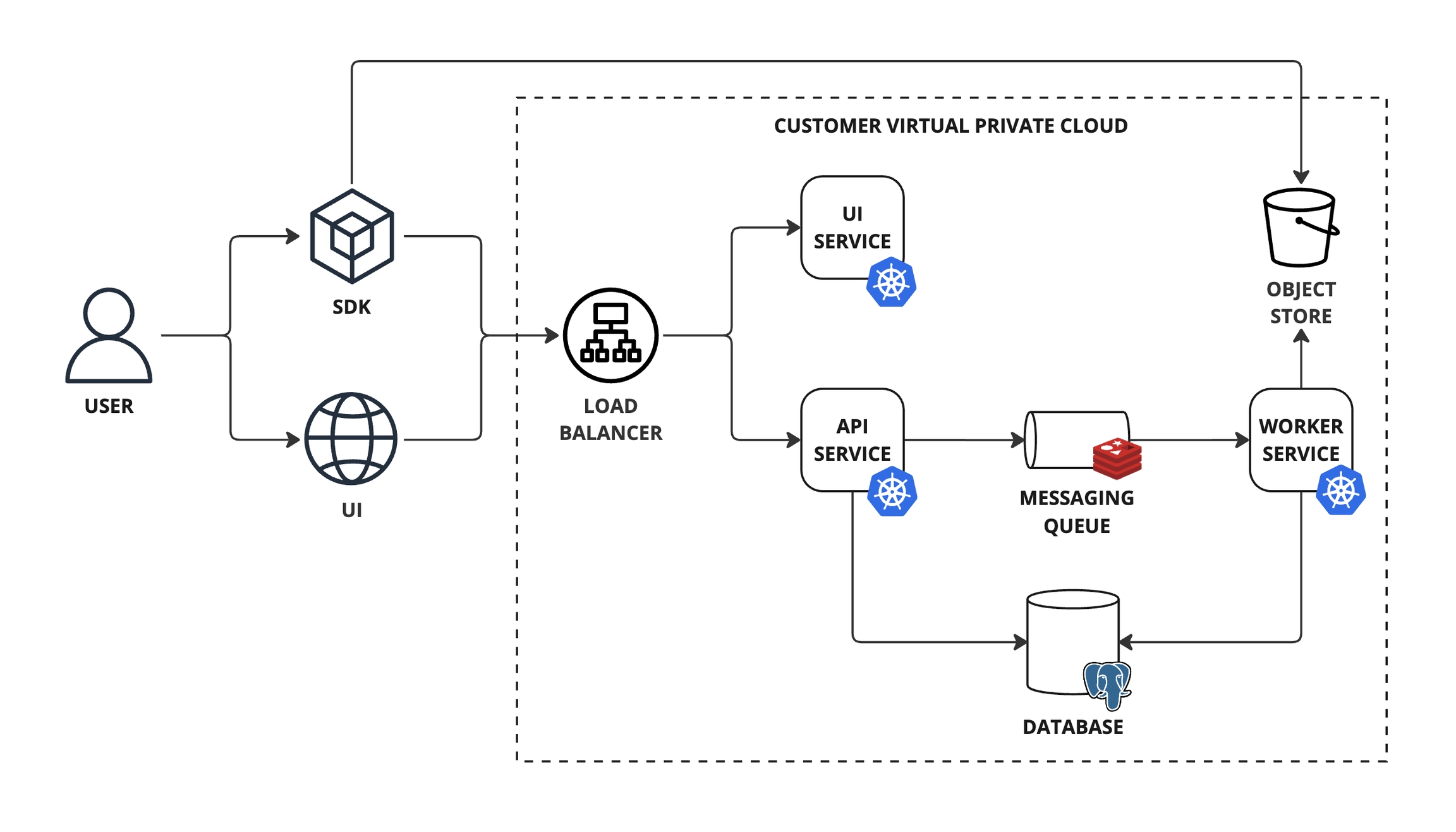

An overview of the architecture for the dbnl platform

The dbnl platform architecture consists of a set of services packaged as Docker images and a set of standard infrastructure components.

The dbnl platform requires the following infrastructure:

A Kubernetes cluster to host the dbnl platform services.

A PostgreSQL database to store metadata.

An object store bucket to store raw data.

A Redis database to serve as a messaging queue.

A load balancer to route traffic to the API or UI service.

The dbnl platform consists in three core services:

The API service (api-srv) serves the dbnl API and orchestrates work across the dbnl platform.

The worker service (worker-srv) processes async jobs scheduled by the API service.

The UI service (ui-srv) serves the dbnl UI assets.

A personal access token has the same permissions as the user that created it. See for more details about permissions.

Personal access tokens are implemented using and are not persisted. Tokens cannot be recovered if lost and a new token will need to be created.

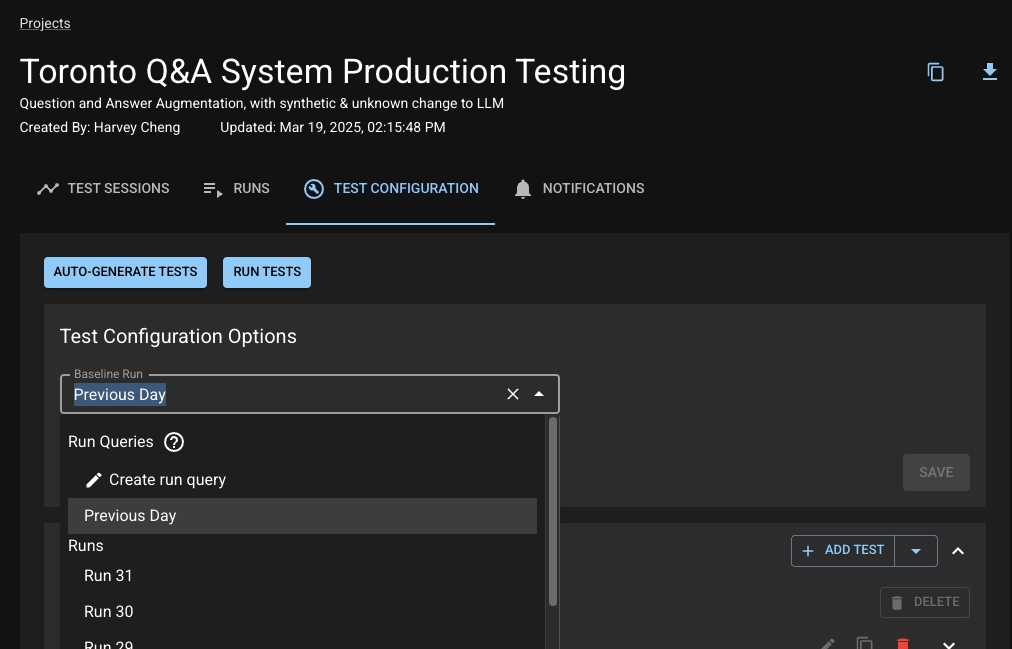

The "Baseline Run" is a core concept in dbnl that, appropriately, refers to the Run used as a baseline when executing a Test Session. Conversely, the Run being tested is called the "Experiment Run". Any that compare statistics will test the values in the experiment relative to the baseline.

Depending on your use case, you may want to make your Baseline Run dynamic. You can use a Run Query for this. Currently, dbnl supports setting a Run Query that looks back a number of previous runs. For example, in a production testing use case, you may want to use the previous Run as the baseline for each Test Session, so you'd create a Run Query that looks back 1 run. See the UI example in the section for information on how to create a Run Query. You can also create a Run Query .

You can choose a Baseline Run at the time of Test Session creation. If you do not provide one, dbnl will use your Project's default Baseline Run. See for more information.

From your Project, click the "Test Configuration" tab. Choose a Run or Run Query from the Baseline Run dropdown.

You can set a Run as baseline via the set_run_as_baseline or set_run_query_as_baseline functions.

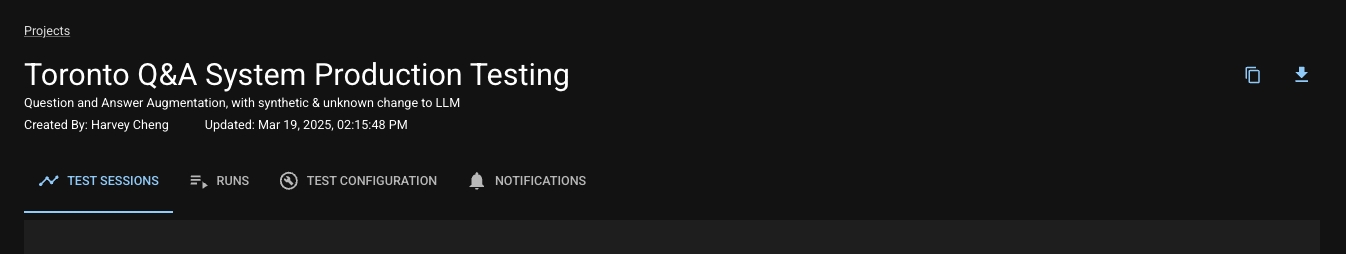

Projects are the main organizational tool in dbnl. Each Project lives within a in your and is accessible by everyone in that Namespace. Generally, you'll create one Project for every AI application that you'd like to test with dbnl. The list of Projects available to you is the default landing page when browsing to the dbnl UI.

Your Project will contain all of your app's — a collection of results from your app — and all of the that you've defined to monitor the behavior of your app. It also has a name and various configurable properties like a and .

Creating a project with the SDK can be done easily with the function.

Each of these steps can be done separately via our , but it can also be done conveniently with a single SDK function call: dbnl.report_run_with_results, which is recommended. See below.

We also have an eval library available that lets you generate useful metrics on your columns and report them to dbnl alongside the Run results. Check out for more information.

In older versions of dbnl, the job of the schema was done by something called the "Run Config". The Run Config has been fully deprecated, and you should check the and update any code you have.

type: The type of the column, e.g. int. For a list of available types, see

Scalars represent any data that live at the Run level; that is, the represent single data points that apply to your entire Run. For example, you may want to calculate an for the entirety of a result set for your model. The scalar schema is also a list of objects, and takes on the same fields as the column schema above.

Components are defined within the components_dag field of the schema. This defines the topological structure of your app as a . Using this, you can tell dbnl which part of your application different columns correspond to, enabling a more granular understanding of your app's behavior.

You can learn more about creating a Run schema in the SDK reference for . There is also a , but we recommend the method shown in the .

Check out the section on to see how dbnl can supplement your results with more useful data.

There are functions to upload and in the SDK, but, again, we recommend the method in the !

Once you're finished uploading results to dbnl for your Run, the run should be closed, to mark it as ready to be used in Test Sessions. Note that reporting results to a Run will overwrite any existing results, and, once closed, the Run can no longer have results uploaded. If you need to close a Run, there is an for it, or you can close an open Run from its page on the UI.

Tests are the key tool within dbnl for asserting the behavior and consistency of Runs. Possible goals during testing can include:

Asserting that your application, holistically or for a chosen column, behaves consistently compared to a baseline.

Asserting that a chosen column meets its minimum desired behavior (e.g., inference throughput);

Asserting that a chosen column has a distribution that roughly matches a baseline reference;

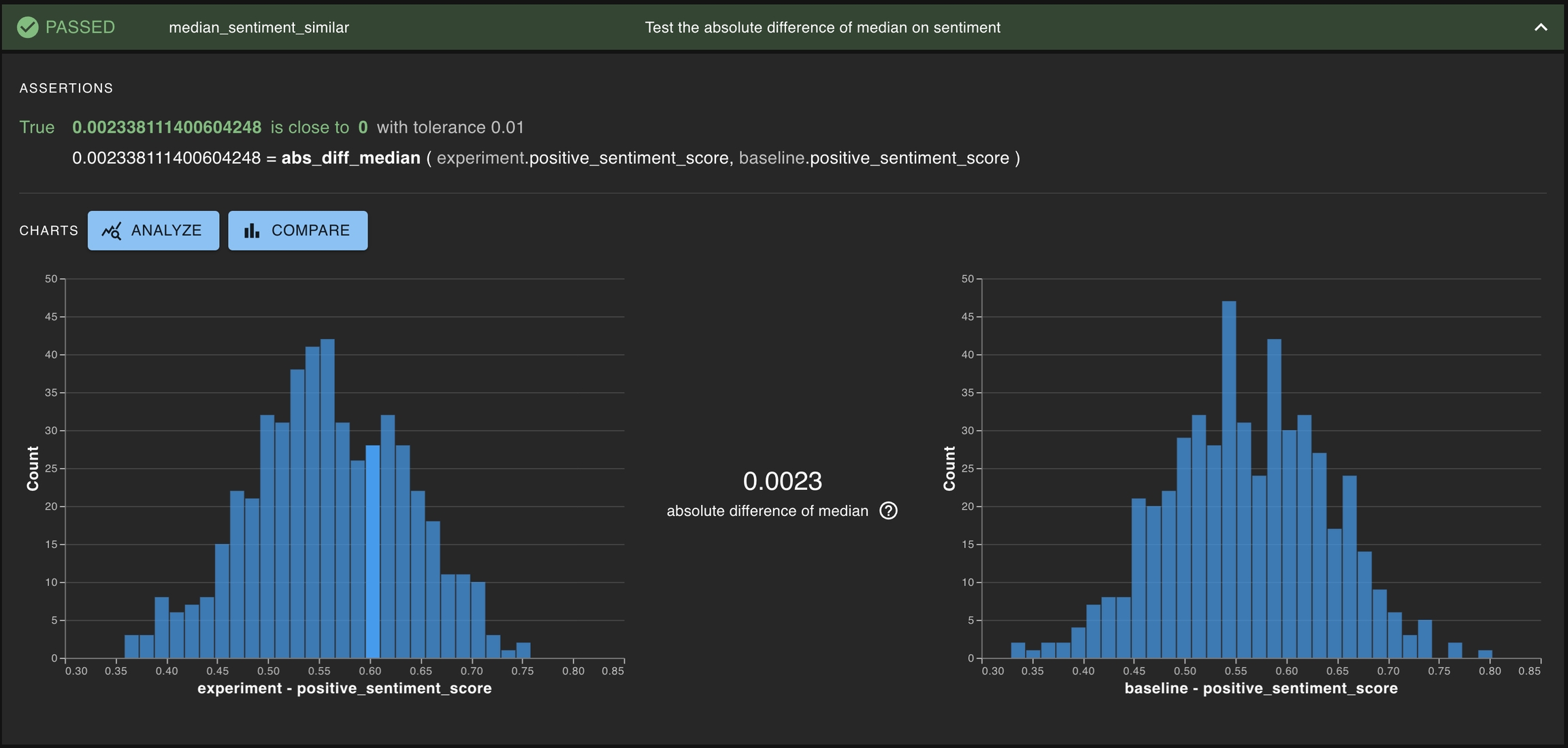

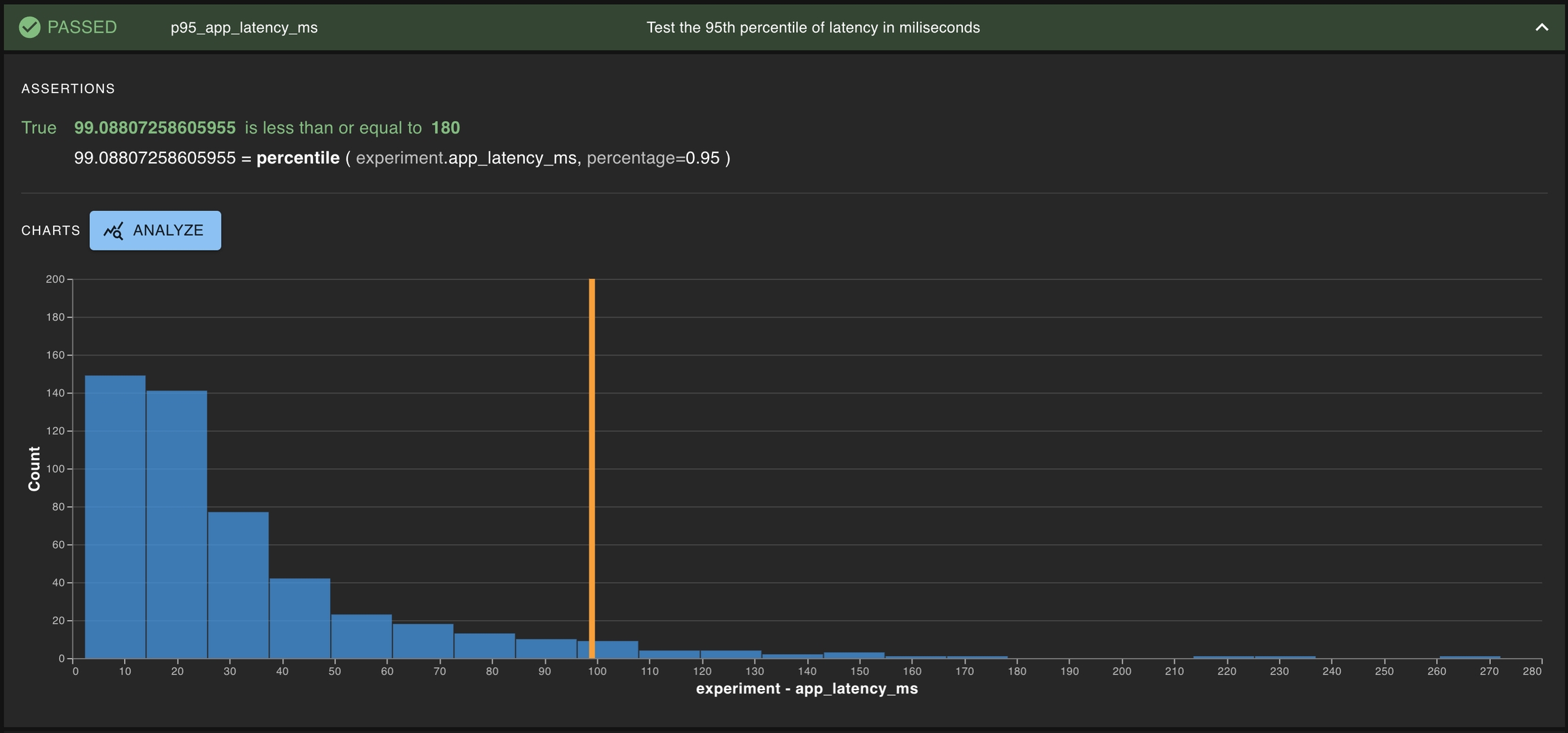

At a high level, a Test is a statistic and an assertion. Generally, the statistic aggregates the data in a column or columns, and the assertion tests some truth about that aggregation. This assertion may check the values from a single Run, or it may check how the values in a Run have changed compared to a baseline. Some basic examples:

Assert the 95th percentile of app_latency_ms is less than or equal to 180

Assert the absolute difference of median of positive_sentiment_score against the baseline is close to 0

In the next sections, we will explore the objects required for testing alongside the methods for creating tests, running tests, reviewing/analyzing tests, and some best practices.

Terraform module installation instructions

The Terraform module option provides maximum simplicity. It provisions all the required infrastructure and permissions in your cloud provider of choice before deploying the dbnl platform Helm chart, removing the need to provision any infrastructure or permission separately.

The following prerequisite steps are required before starting the Terraform module installation.

To configure the Terraform module, you will need:

A domain name to host the dbnl platform (e.g. dbnl.example.com).

An RSA key pair can be generated with:

On the environment from which you are planning to install the module, you will need to:

At a minimum, the user performing the installation needs to be able to provision the following infrastructure:

Soon.

The steps to install the Terraform module using the Terraform CLI are as follows:

Create a dbnl folder and change to it.

Create a modules folder and copy the terraform module to it.

Create a variables.tf file.

Create a main.tf file.

Create a dbnl.tfvars file.

Initialize the Terraform module.

Apply the Terraform module.

Soon.

For more details on all the installation options, see the Terraform module README file and examples folder.

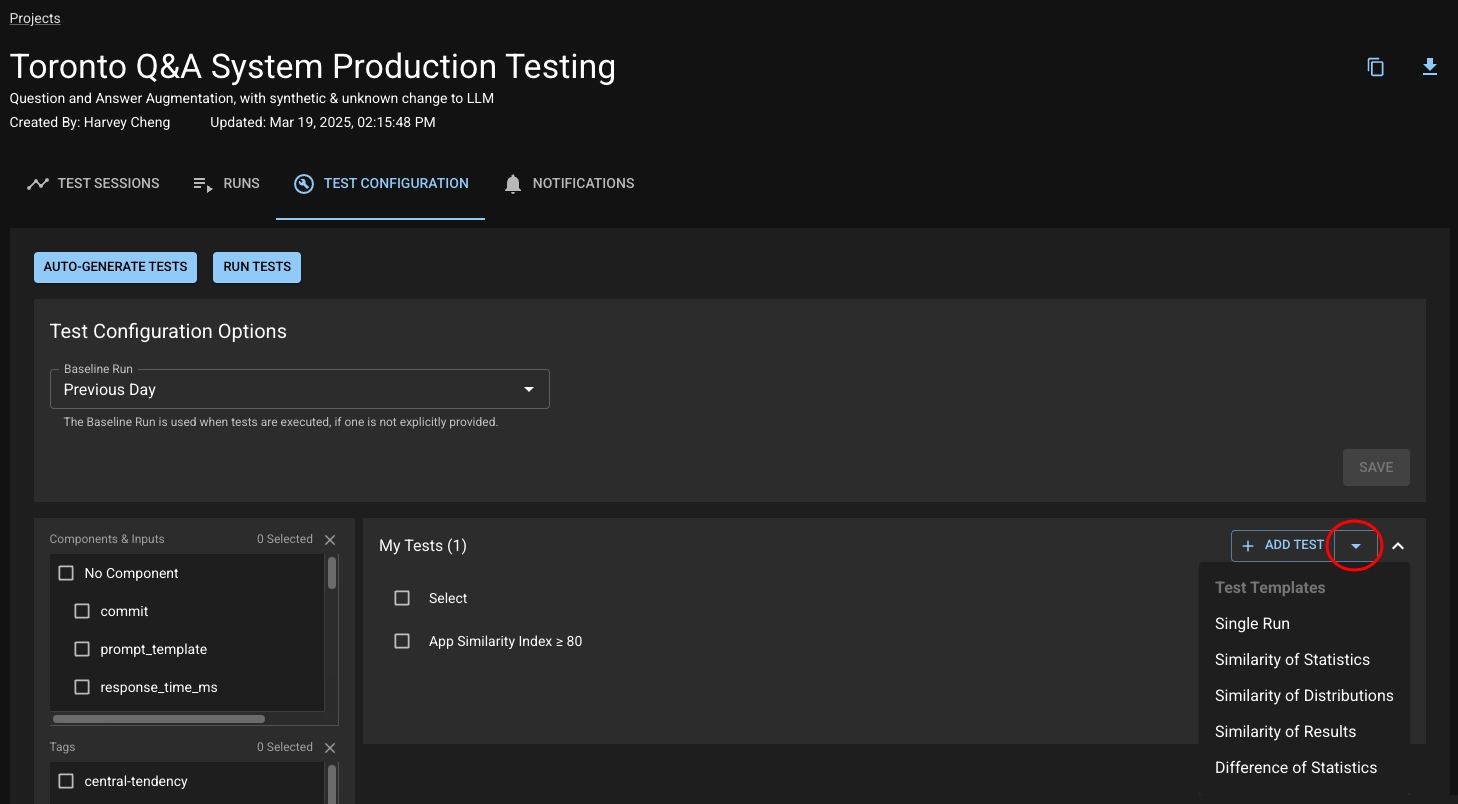

As you become more familiar with the behavior of your application, you may want to build on the default App Similarity Index test with tests that you define yourself. Let's walk through that process.

As you browse the dbnl UI, you will see "+" icons or "+ Add Test" buttons appear. These provide context-aware shortcuts for easily creating relevant tests.

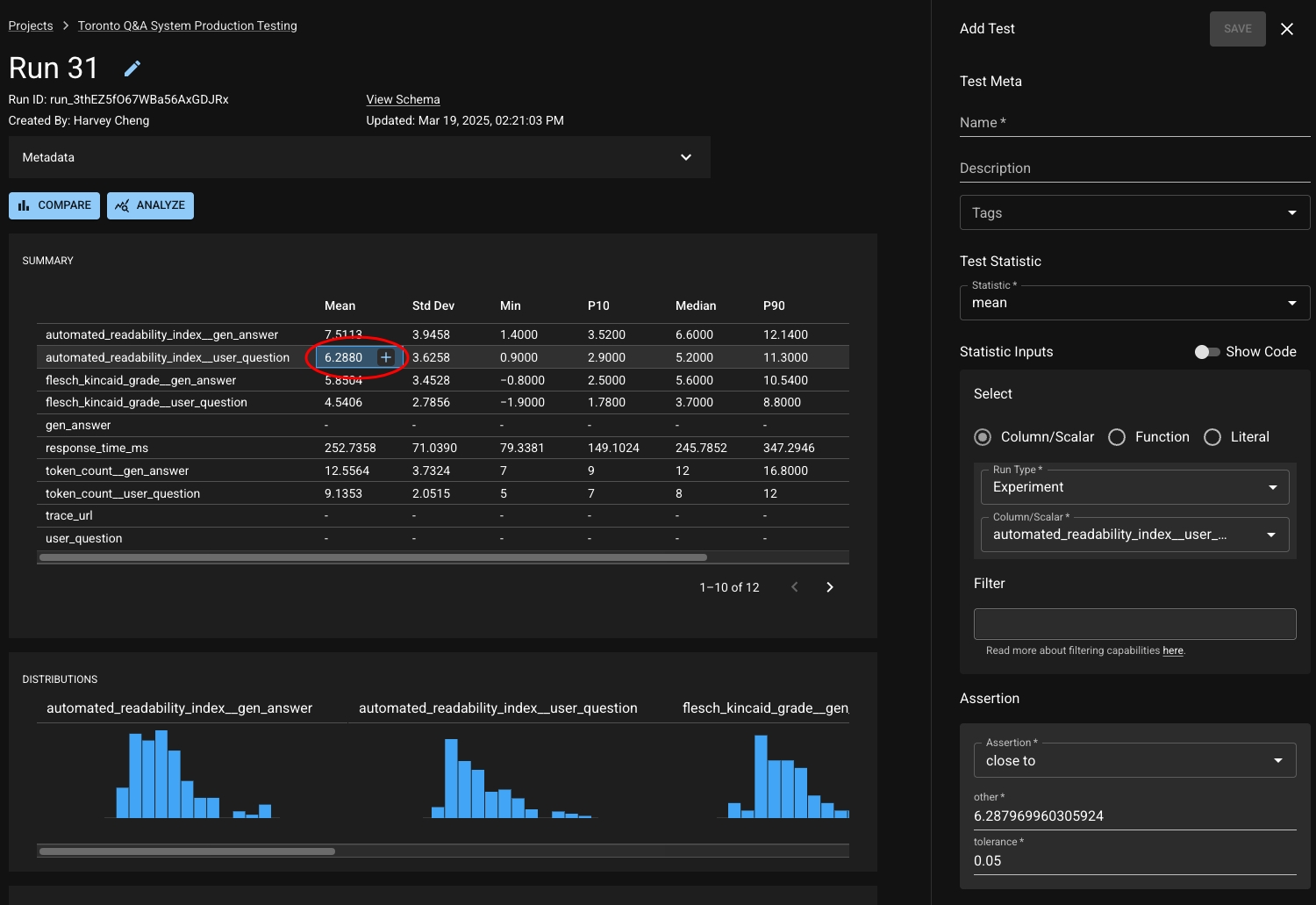

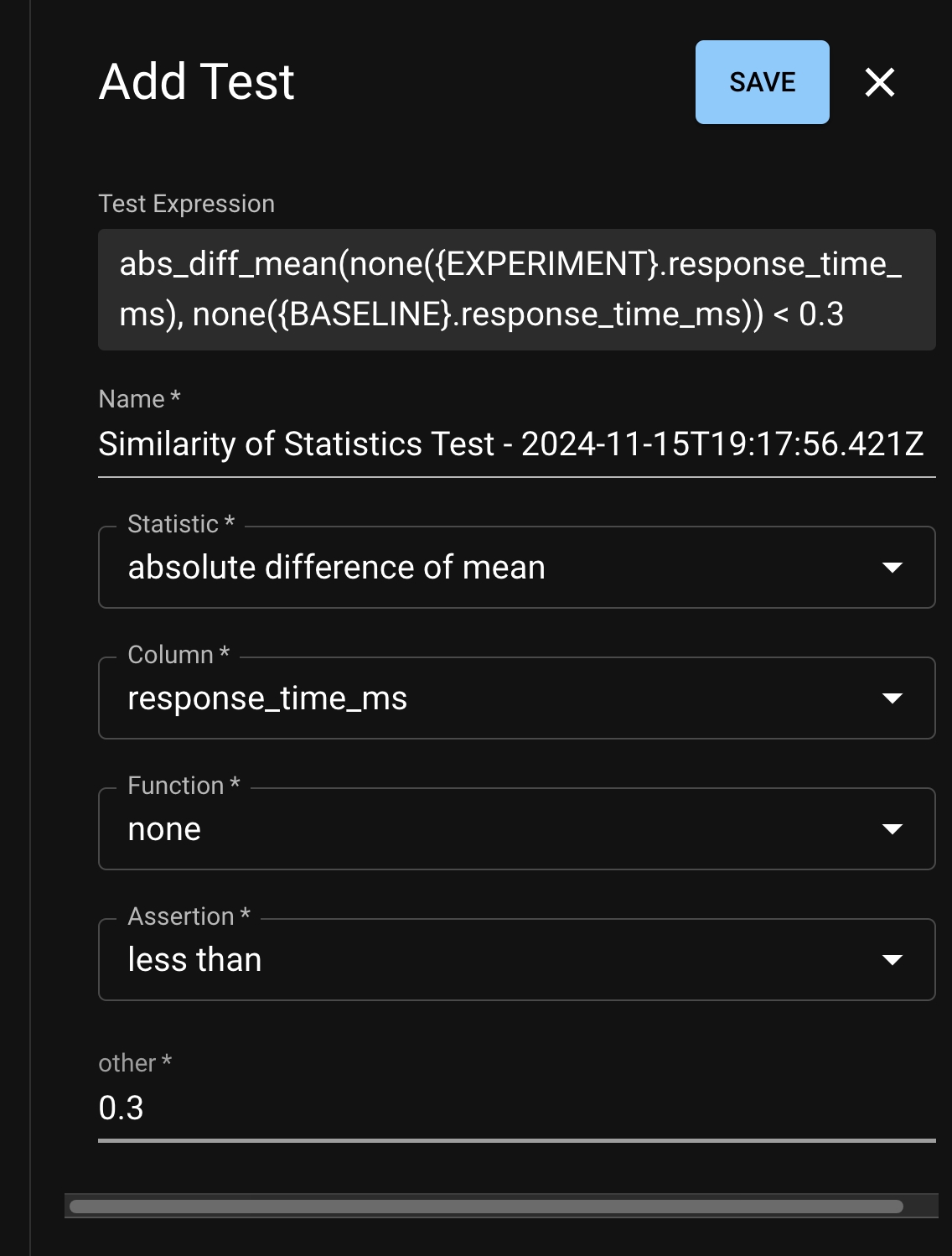

At each of these locations, a test creation drawer will open on the right side of the page with several of the fields pre-populated based on the context of the button, alongside a history of the statistic, if relevant. Here are some of the best places to look for dbnl-assisted test creation:

When inspecting the details of a column or metric from a Test Session, there are several "Add Test" buttons provided to allow you to quickly create a test on a relevant statistic. The Statistic History graph can help guide you on choosing a threshold.

When viewing a Run, each entry in the summary statistics table can be used to seed creation of a test for that chosen statistic.

These shortcuts appear in several other places in the UI as well when you are inspecting your Runs and Test Sessions; keep an eye out for the "+"!

Test templates are macros for basic test patterns recommended by Distributional. It allows the user to quickly create tests from a builder in the UI. Distributional provides five classes of test templates:

From the Test Configuration tab on your Project, click the dropdown next to "Add Test".

Select from one of the five options. A Test Creation drawer will appear and the user can edit the statistic, column, and assertion that they desire. Note that each Test Template has a limited set of statistics that it supports.

If you have a good idea of what you want to test or just want to explore, you can create tests manually from either the UI or via the Python SDK.

Let's say you are building an Q&A chatbot, and you have a column for the length of your bot's responses, word_count. Perhaps you want to ensure that your bot never outputs more than 100 words; in that case, you'd choose:

The statistic max,

The assertion less than or equal to ,

and the threshold 100.

But what if you're not opinionated about the specific length? You just want to ensure that your app is behaving consistently as it runs and doesn't suddenly start being unusually wordy or terse. dbnl makes it easy to test that as well; you might go with:

The statistic absolute difference of mean,

The assertion less than,

and the threshold 20.

Now you're ready to go and create that test, either via the UI or the SDK:

From your Project, click the "Test Configuration" tab.

Next to the "My Tests" header, you can click "Add Test" to open the test creation page, which will enable you to define your test through the dropdown menu on the left side of the window.

By default, your Project will be pre-populated with a test for the first goal above. This is the "App " test which gives you a quick understanding of whether your application's behavior has significantly deviated from a selected baseline.

Terraform modules are available for AWS and GCP. For access to the Terraform module for your cloud provider of choice and to get registry credentials, .

A set of dbnl registry credentials to pull the (e.g. Docker images, Helm charts).

An RSA key pair to sign the .

Install

Install

Install

(IAM)

(VPC)

(EKS)

(ACM)

(ALB)

The Terraform module can be installed using . We recommend using a to manage the Terraform state.

The dbnl platform uses or OIDC for authentication. OIDC providers that are known to work with dbnl include:

Follow the to create a new SPA (single page application).

In Settings > Application URIs, add the dbnl deployment domain to the list of Allowed Callback URLs (e.g. dbnl.mydomain.com).

Navigate to Settings > Basic Information and copy the Client ID as the OIDC clientId option.

Navigate to Settings > Basic Information and copy the Domain and prepend with https:// to use as the OIDC issuer option (e.g. https://my-app.us.auth0.com/).

Follow the to create a custom API.

Use your dbnl deployment domain as the Identifier (e.g. dbnl.mydomain.com).

Navigate to Settings > General Settings and copy the Identifier as the OIDC audience option.

Set the OIDC scopes option to "openid profile email".

Follow the to create a new SPA (single page application) and enable OIDC.

Add the dbnl deployment domain as the callback URL (e.g. dbnl.mydomain.com).

[Optional] Follow the to restrict access to certain users.

Navigate to App Registrations > (Application) > Manage > API permissions and add the Microsoft Graph email, openid and profile permissions to the application.

Navigate to App Registrations > (Application) > Manage > Manifest and set access token version to 2.0 with "accessTokenAcceptedVersion": 2 .

Navigate to App Registrations > (Application) > Manage > Token configuration > Add optional claim > Access > email to add the email optional claim to the access token type.

Navigate to App Registrations > (Application) and copy the Application (client) ID (APP_ID) to be used as the OIDC clientId and OIDC audience options.

Set the OIDC issuer option to https://login.microsoftonline.com/{APP_ID}/v2.0 .

Set the OIDC scopes option to "openid email profile {APP_ID}/.default".

Set the Sign-in redirect URIs to your dbnl domain (e.g. dbnl.mydomain.com)

Navigate to General > Client Credentials and copy the Client ID to be used as the OIDC clientId option.

Navigate to Sign on > OpenID Connect ID Token and copy the Issuer URL to be used as the OIDC issuer and OIDC audience options.

Set the OIDC scopes option to "openid email profile" .

The first step in coming up with a test is determining what behavior you're interested in. As described in , each Run of your application reports its behavior via its results, which are organized into columns (and scalars). Once you've identified the column or scalar you'd like to test on, then you need to determine what you'd like to apply to it and the you'd like to make on that statistic.

This might seem like a lot, but dbnl has your back! While you can define tests , dbnl has several ways of helping you identify what columns you might be interested in and letting you quickly define tests on them.

When creating a test, you can specify tags to apply to it. You can use these tags to filter which tests you want to include or exclude later when . Some of the test creation shortcuts on the UI do not currently allow specifying tests, but you can edit the test and add tags after the fact.

When you're looking at a Test Session, dbnl will provide insights about which columns or metrics have have demonstrated the most drift. These are great candidates to define tests on if you want to be specificially alerted about their behavior. You can click the "Add Test" button to create a test on the of the relevant column. The Similarity Index history graph can help guide you on choosing a threshold.

: These are parametric statistics of a column.

: These test if the absolute difference of a statistic of a column between two runs is less than a threshold.

: These test if the column from two different runs are similarly distributed is using a nonparametric statistic.

: These are tests on the row-wise absolute difference of result

: These tests the signed difference of a statistic of a column between two runs

When creating a test manually, you can also specify filters to apply the test only to specific rows within your Runs. Check out for more information.

On the left side you can configure your test by choosing a statistic and assertion. Note that you can use our builder or build a test spec with raw JSON (you can see some example test spec JSONs ). On the right, you can browse the data of recent Runs to help you figure out what statistics and thresholds are appropriate to define acceptable behavior.

Tests can be using the python SDK. Users must provide a JSON dictionary that adheres to the dbnl Test Spec, which is described in the previous link and has an example provided below.

You can see a full list with descriptions of available statistics and assertions .

Follow the to create a new SPA (single page application) and enable OIDC.

List of networking requirements

The dbnl platform needs to be hosted on a domain or subdomain (e.g. dbnl-example.com or dbnl.example.com). It cannot be hosted on a subpath.

It is recommended that the dbnl platform be served over HTTPS. Support for SSL termination at the load balancer is included.

Currently, the dbnl platform cannot run in an air-gapped environment and requires a few URLs to be accessible via egress.

Artifacts Registry

Required to fetch the dbnl paltform artifacts such as the Helm chart and Docker images.

https://us-docker.pkg.dev/dbnlai/

Object Store

Required for services to access the object store.

https://{BUCKET}.s3.amazonaws.com/ (if using S3)

https://storage.googleapis.com/{BUCKET} (if using GCS)

OIDC

Required to validate OIDC tokens.

https://login.microsoftonline.com/{APP_ID}/v2.0/ (if using Microsoft EntraID)

https://{ACCOUNT}.okta.com/ (if using Okta)

Integrations

Required to use some integrations.

https://events.pagerduty.com/v2/enqueue (if using PagerDuty)

https://hooks.slack.com/services/ (if using Slack)

Returns the absolute value of the input.

Syntax

Adds the two inputs.

Syntax

Logical and operation of two or more boolean columns.

Syntax

Returns the ARI (Automated Readability Index) which outputs a number that approximates the grade level needed to comprehend the text. For example if the ARI is 6.5, then the grade level to comprehend the text is 6th to 7th grade.

Syntax

Computes the BLEU score between two columns.

Syntax

Returns the number of characters in a text column.

Syntax

Aliases

num_chars

Divides the two inputs.

Syntax

Computes the element-wise equal to comparison of two columns.

Syntax

Aliases

eq

Filters a column using another column as a mask.

Syntax

Returns the Flesch-Kincaid Grade of the given text. This is a grade formula in that a score of 9.3 means that a ninth grader would be able to read the document.

Syntax

Computes the element-wise greater than comparison of two columns. input1 > input2

Syntax

Aliases

gt

Computes the element-wise greater than or equal to comparison of two columns. input1 >= input2

Syntax

Aliases

gte

Returns true if the input string is valid json.

Syntax

Computes the element-wise less than comparison of two columns. input1 < input2

Syntax

Aliases

lt

Computes the element-wise less than or equal to comparison of two columns. input1 <= input2

Syntax

Aliases

lte

Returns Damerau-Levenshtein distance between two strings.

Syntax

Returns True if the list has duplicated items.

Syntax

Returns the length of lists in a list column.

Syntax

Most common item in list.

Syntax

Multiplies the two inputs.

Syntax

Returns the negation of the input.

Syntax

Logical not operation of a boolean column.

Syntax

Computes the element-wise not equal to comparison of two columns.

Syntax

Aliases

neq

Logical or operation of two or more boolean columns.

Syntax

Returns the rouge1 score between two columns.

Syntax

Returns the rouge2 score between two columns.

Syntax

Returns the rougeL score between two columns.

Syntax

Returns the rougeLsum score between two columns.

Syntax

Returns the number of sentences in a text column.

Syntax

Aliases

num_sentences

Subtracts the two inputs.

Syntax

Returns the number of tokens in a text column.

Syntax

Returns the number of words in a text column.

Syntax

Aliases

num_words

Instructions for managing a dbnl Sandbox deployment.

The dbnl sandbox deployment bundles all of the dbnl services and dependencies into a single self-contained Docker container. This container replicates a full scale dbnl deployment by creating a Kubernetes cluster in the container and using Helm to deploy the dbnl platform and its dependencies (postgresql, redis and minio).

The sandbox deployment is not suitable for production environments.

The sandbox container needs access to the following two registries to pull the containers for the dbnl platform and its dependencies.

us-docker.pkg.dev

docker.io

The sandbox container needs sufficient memory and disk space to schedule the k3d cluster and the containers for the dbnl platform and its dependencies.

Although the sandbox image can be deployed manually using Docker, we recommend using the dbnl CLI to manage the sandbox container. For more details on the sandbox CLI options, run:

To start the dbnl Sandbox, run:

This will start the sandbox in a Docker container named dbnl-sandbox. It will also create a Docker volume of the same name to persist data beyond the lifetime of the sandbox container.

To stop the dbnl sandbox, run:

This will stop and remove the sandbox container. It does not remove the Docker volume and the next time the sandbox is started, it will remount the existing volume, persisting the data beyond the lifetime of the Sandbox container.

To get the status of the dbnl sandbox, run:

To tail the dbnl sandbox logs, run:

To execute a command in the dbnl sandbox, run:

This will execute COMMAND within the dbnl sandbox container. This is a useful tool for debugging the state of the containers running within the sandbox containers. For example:

To get a list of all Kubernetes resources, run:

To get the logs for a particular pod, run:

This is an irreversible action. All the sandbox data will be lost forever.

To delete the sandbox data, run:

The sandbox deployment uses username and password authentication with a single user. The user credentials are:

Username: admin

Password: password

The sandbox persists data in a Docker volume named dbnl-sandbox. This volume is persisted even if the sandbox is stopped, making it possible to later resume the sandbox without losing data.

If deploying and hosting the sandbox on a remote host, such as on EC2 or Compute Engine, the sandbox --base-url option needs to be set on start.

For example, if hosting the sandbox on http://example.com:8080, the sandbox needs to be started with:

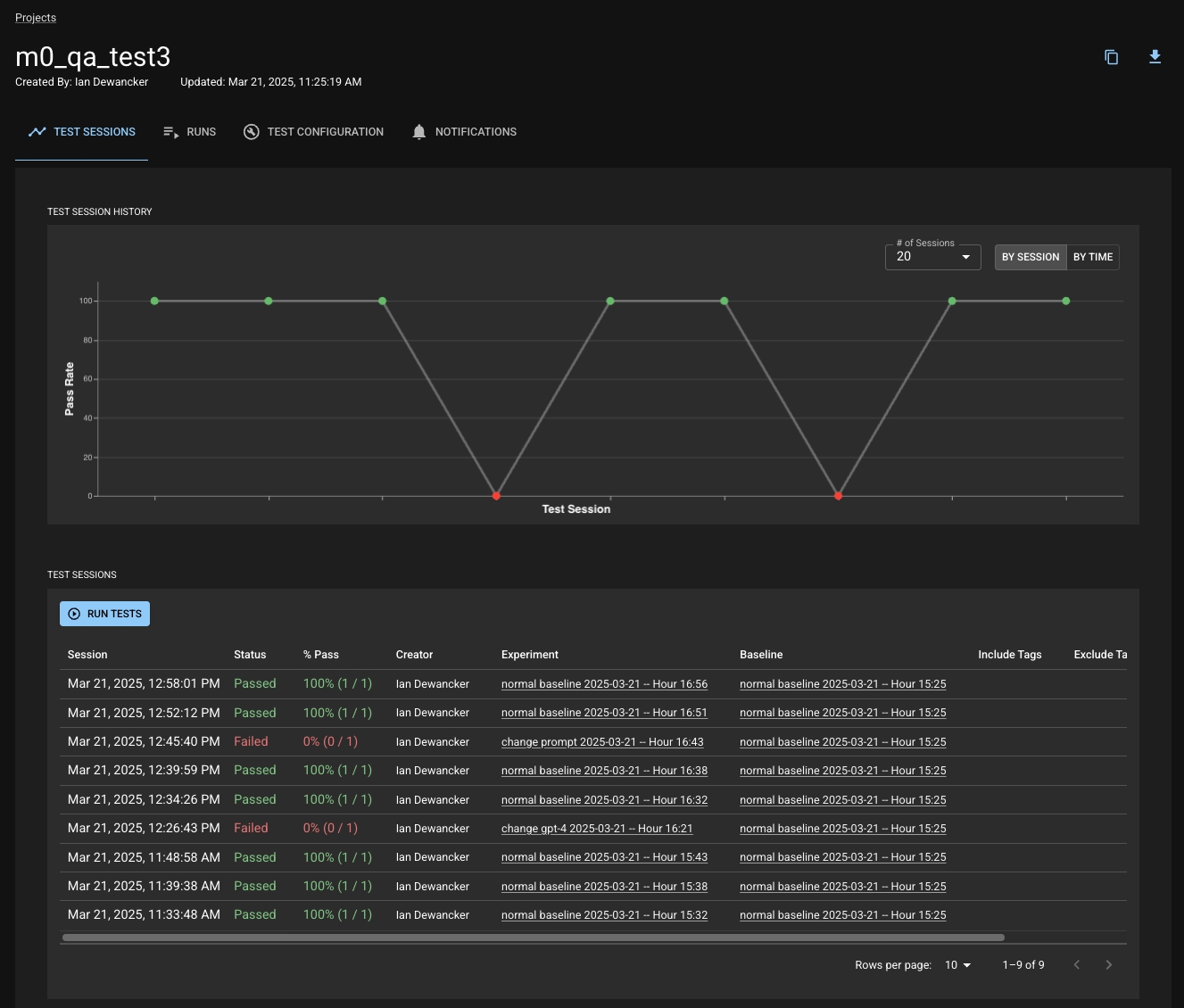

The Test Sessions section in your Project is a record of all the Test Sessions you've created. You can view a line chart of the pass rate of your Test Sessions over time or view a table with each row representing a Test Session You can click on a point in the chart or a row in the table to navigate to the corresponding Test Session's detail page to dig into what happened within that session.

When you first open a Test Session's page, you will land on the Summary tab. This tab provides you with summary information about the session such as the App Similarity Index, which tests have failed, and key insights about the session. There are also tabs to see the Similarity Report (more information below) or to view all the test results within the session.

On the Summary tab, you'll notice a list of key insights that dbnl has discovered about your Test Session. The key insights will tell you at a glance which columns or metrics have had the most significant change in your Experiment Run when compared to the baseline. If you are particularly interested in the column or metric going forward, you can quickly add a test for its Similarity Index.

Expanding one of these will allow you to view some additional information such as a history of the Similarity Index for the related column or metric; if you are viewing a metric, it will also tell you the lineage of which columns the metric is derived from.

The Similarity Report gives you an overview of all the columns your Experiment Run, providing the relevant Similarity Indexes, the ability to quickly create tests from them, and the option to deep-dive into a column. Expanding one of the rows for a column for show you all the metrics calculated for that column, with their own respective Similarity Indexes and details.

If you click on the "See Details" link on any of these rows (or from the Key Insights view), you'll be taken to a view that lets you explore the respective column or metric in detail.

From this view, you can easily compare the changes in the column/metric with graphs and summary statistics. Expanding of the comparison statistics will give you even more information to dig into! Click "Add Test" to quickly create a test on the related statistic.

You can run any tests you've created (or just the default App Similarity Index test) to investigate the behavior of your application.

When you run a Test Session, you are running your tests against a given Experiment Run.

Tests are run within the context of a Test Session, which is effectively just a collection of tests run against an Experiment Run with a Baseline Run. You can create a Test Session, which will immediately run the tests, via the UI or the SDK:

absolute difference of max

-

absolute difference of mean

-

absolute difference of median

-

absolute difference of min

-

absolute difference of percentile

Requires percentage as a parameter.

absolute difference of standard deviation

-

absolute difference of sum

-

Category Rank Discrepancy

Computes the absolute difference in the proportion of the specified category between the experiment and baseline runs. The category is specified by its rank in the baseline run.

Requires rankas a parameter: can be one of [most_common, second_most_common, not_top_two].

Chi-squared stat, scaled

Kolmogorov-Smirnov stat, scaled

max

-

mean

-

median

-

min

-

mode

-

Null Count

Computes the number of None values in a column.

Null Percentage

Computes the fraction of None values in a column.

percentile

Requires percentage as a parameter.

scalar

signed difference of max

-

signed difference of mean

-

signed difference of median

-

signed difference of min

-

signed difference of percentile

Requires percentage as a parameter.

signed difference of standard deviation

-

signed difference of sum

-

standard deviation

-

sum

-

between

between or equal to

close to

equal to

greater than

greater than or equal to

less than

less than or equal to

not equal to

outside

outside or equal to

An overview of the dbnl Query Language

The dbnl Query Language is a SQL-like language that allows for querying data in runs for the purpose of drawing visualizations, defining metrics or evaluating tests.

An expression is a combination of literals, values, operators, and functions. Expressions can evaluate to scalar or columnar values depending on their types and inputs. There are three types of expressions that can be composed into arbitrarily complex expressions.

Literal expressions are constant-valued expressions.

Column and scalar expressions are references to columns or scalar values in a run. They use dot-notation to reference a column or scalar within a run.

For example, a column named score in a run with id run_1234 can be referenced with the expression:

Function expressions are functions evaluated over zero or more other expressions. They make it possible to compose simple expressions into arbitrarily complex expressions.

For example, the word_count function can be used to compute the word count of the text column in a run with id run_1234 with the expression:

Operators are aliases for function expressions that enhance readability and ease of use. Operator precedence is the same as that of most SQL dialect.

Arithmetic operators

Arithmetic operators provide support for basic arithmetic operations.

Comparison operators

Comparison operators provide support for common comparison operations.

Logical operators

Logical operators provide support for boolean comparisons.

The dbnl Query Language follows the null semantics of most SQL dialect. With a few exception, when a null value is used as an input to a function or operator, the result is null.

One exception to this is boolean functions and operators where ternary logic is used similar to most SQL dialects.

Install .

Install , the dbnl CLI and Python SDK.

Within the sandbox container, is used in conjunction with to schedule the containers for the dbnl platform and its dependencies.

The dbnl sandbox image and the dbnl platform images are stored in a private registry. For access, .

Once ready, the dbnl UI will be accessible at .

To use the dbnl Sandbox, set your API URL to , either through or through the .

This will tail the logs from the container. This does not include the logs from the services that run on the Kubernetes cluster within the container. For this, you will need to use the .

Once you've , you can check it out in the UI!

Across the Test Session page, you will see Similarity Indexes at both an "App" level as well as on each of your columns and metrics. This is a special summary score that dbnl calculates for you to help you quickly and easily understand how much your app has changed between the Experiment and Baseline Runs within the session, both holistically and at a granular level. You can define tests on any of the indexes — at the app level or on a specific metric or column. For more information, see the section "".

If you haven't already, take a look at the documentation on . All the methods for running a test will allow you to choose a Baseline Run at the time of Test Session creation, but you can also .

You can choose to run the tests associated with a Project by clicking on the "Run Tests" button on your Project. This button will open up a modal that allows you to specify the Baseline and Experiment Runs, as well as the tags of the tests you would like to include or exclude from the test session.

Tests can be run via the SDK function . Most likely, you will want to create a Test Session shortly after you've reported and closed a Run. See for more information.

Continue onto for how to look at and interpret the results from your Test Session.

Computes a scaled and normalized statistic between two nominal distributions.

Computes a scaled and normalized statistic between two ordinal distributions.

Special function for using in tests. Returns the input as a scalar value if it is a scalar and returns an error otherwise.

Below is a basic working example that highlights the SDK workflow. If you have not yet installed the SDK, follow .

boolean

true

int

42

float

1.0

string

'hello world'

-a

negate(a)

Negate an input.

a * b

multiply(a, b)

Multiply two inputs.

a / b

divide(a, b)

Divide two inputs.

a + b

add(a, b)

Add two inputs.

a - b

subtract(a, b)

Subtract two inputs.

a = b

eq(a, b)

Equal to.

a != b

neq(a, b)

Not equal to.

a < b

lt(a, b)

Less than.

a <= b

lte(a, b)

Less than or equal to.

a > b

gt(a, b)

Greater than.

a >= b

gte(a, b)

Greater than or equal to

not b

not(a, b)

Logical not of input.

a and b

and(a, b)

Logical and of two inputs.

a or b

or(a, b)

Logical or of two inputs.

4 > null

null

null = null

null

null + 2

null

word_count(null)

null

true

null

true

null

false

false

null

null

false

true

null

true

true

null

null

null

false

null

false

null

null

null

null

null

null

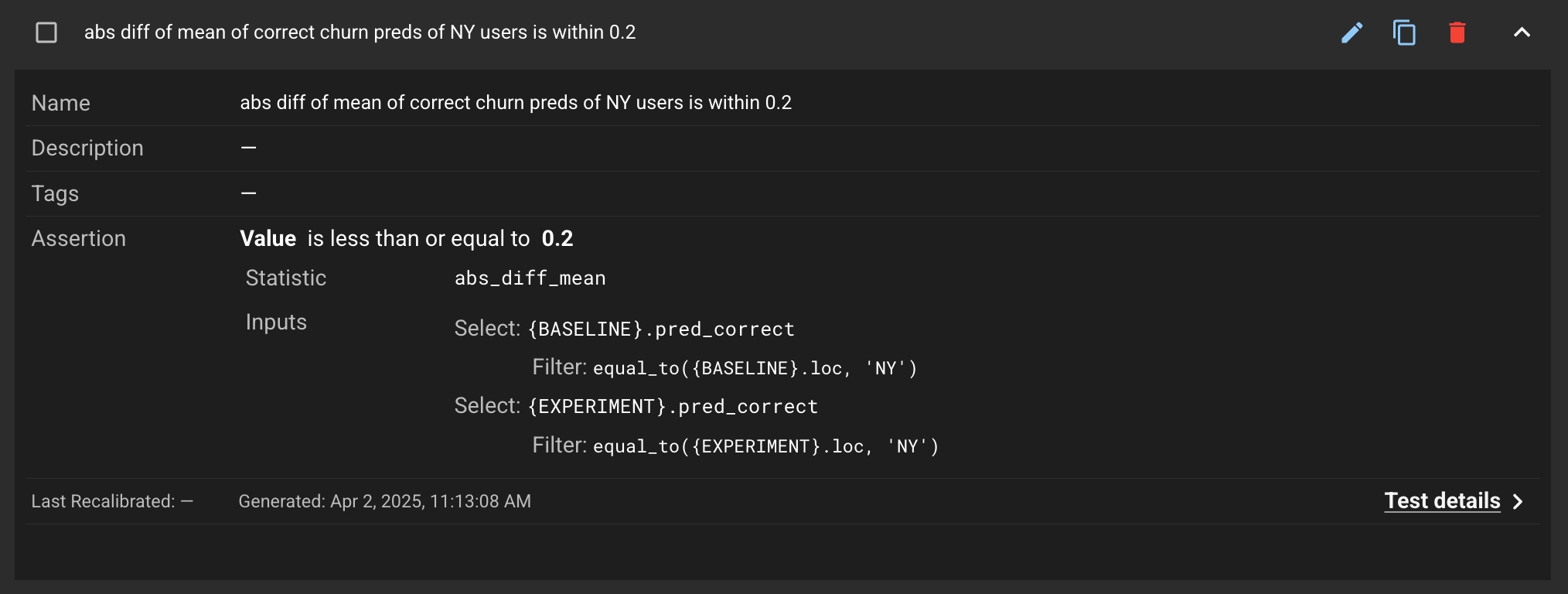

Filters can be used to specify a sub-selection of rows in Runs you would like to be tested.

For example, you might want to create a test that asserts that the absolute difference of means of the correct churn predictions is <= 0.2 between Baseline and Experiment Runs, only for rows where the loc column is NY.

Once you've used one of the methods above, you can now see the new test in the Test Configuration tab of your Project.

When a Test Session is created, this test will use the defined filters to sub-select for the rows that have the loc column equal to NY.

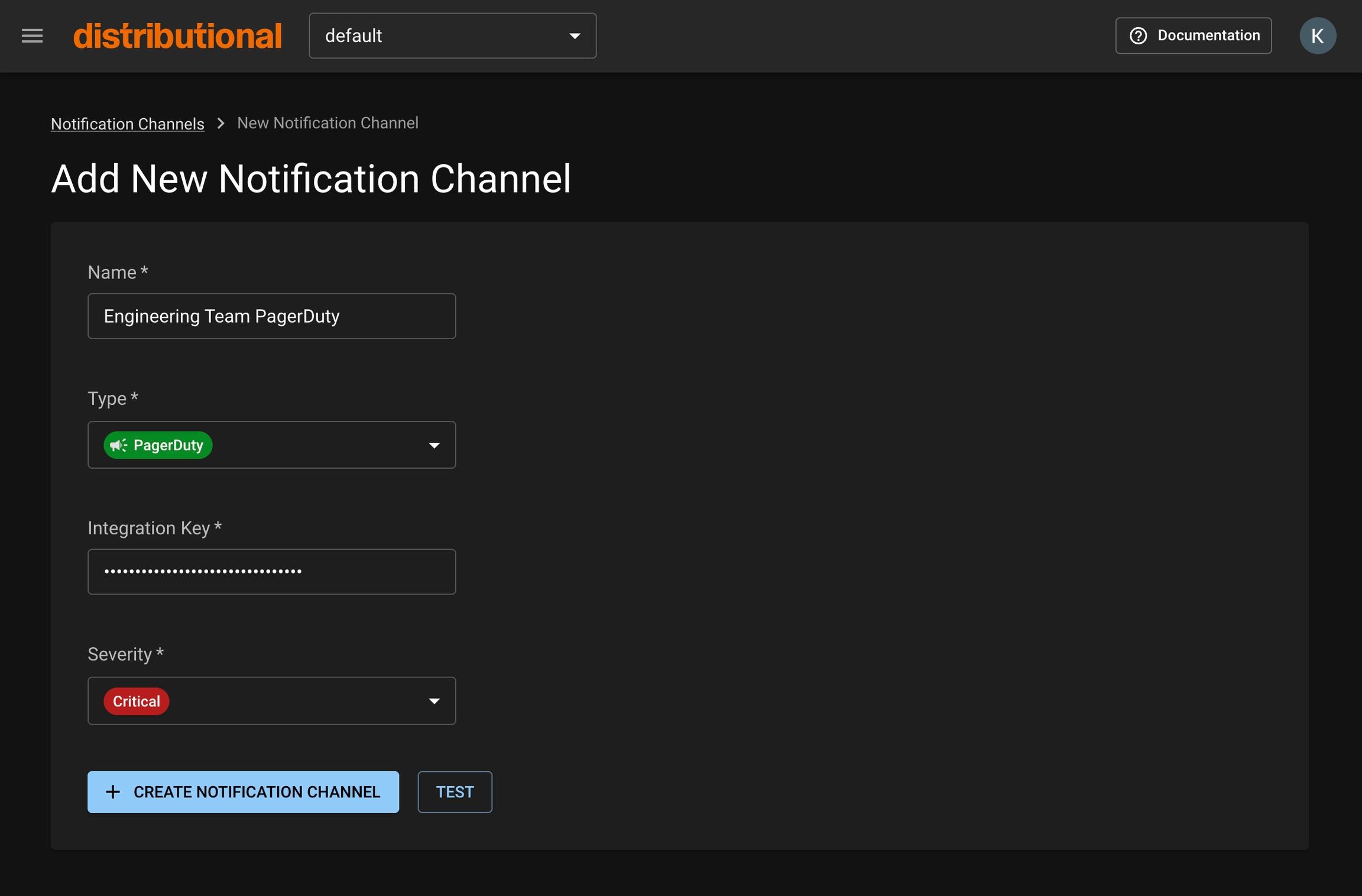

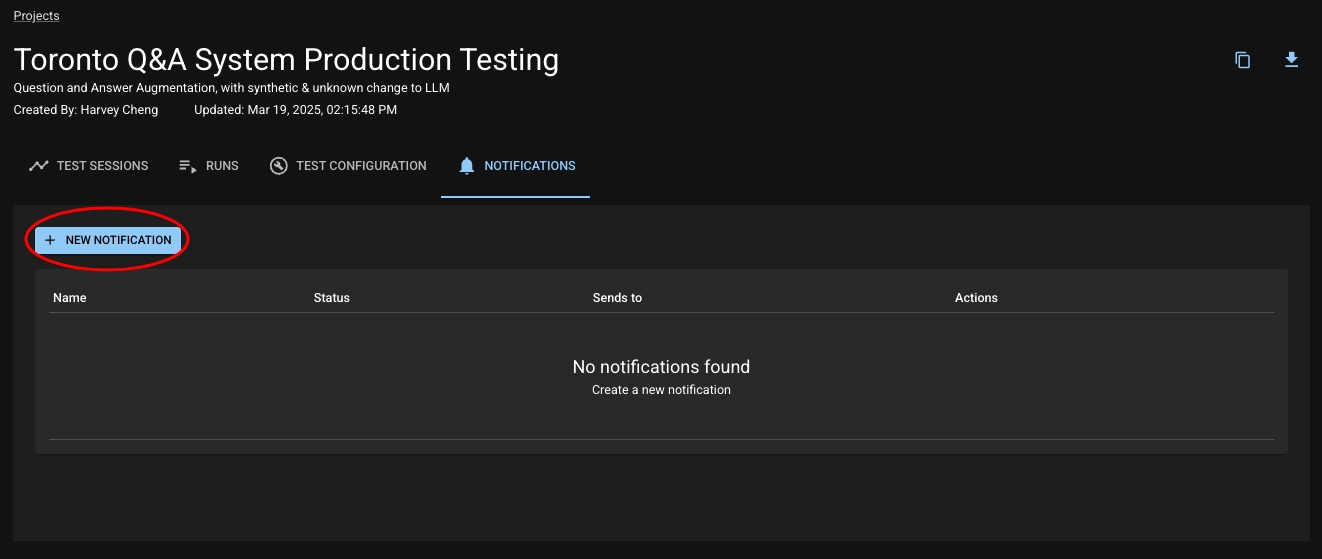

Notifications provide a way for users to be automatically notified about critical test events (e.g., failures or completions) via third-party tools like PagerDuty and Slack.

With Notifications you can:

Add critical test failure alerting to your organization’s on-call

Create custom notifications for specific feature tests

Stay informed when a new test session has started

A Notification is composed of two major elements:

The Notification Channel — this contains the metadata for how and where a Notification will be sent

The Notification Criteria — this defines the rules for when a Notification will be generated

Before setting up a Notification in your project, you must have a Notification Channel set up in your Namespace. A Notification Channel describes who will be notified and how. A Notification Channel in a Namespace can be used by Notifications across all Projects belonging to that Namespace.

In your desired Namespace, choose Notification Channels in the menu sidebar. Note: you must be a Namespace admin in order to do this.

Click the New Notification Channel button to navigate to the creation form.

Fill out the appropriate fields.

Optional: If you’d like to test that your Notification Channel is set up correctly, click the Test button. If it is correctly set up, you should receive a notification through the integration you’ve selected.

Click the Create Notification Channel button. Your channel will now be available when setting up your Notification.

Note: More coming up in the product roadmap!

Navigate to your Project and click the Notifications tab.

Click the "New Notification" button to navigate to the creation form.

Click the "Create Notification" button. Your Notification will now notify you when your specified criteria are met!

Trigger Event

The trigger event describes when your Notification is initiated. Trigger events are based on Test Session outcomes.

Tags Filtering

Filtering by Tags allows you to define which tests in the Test Session you care to be notified about.

There are three types of Tags filters you can provide:

Include: Must have ANY of the selected

Exclude: Must not have ANY of the selected

Require: Must have ALL of the selected

When multiple types are provided, all filters are combined using ‘AND’ logic, meaning all conditions must be met simultaneously.

Note: This field only pertains to the ‘Test Session Failed’ trigger event

Condition

The condition describes the threshold at which you care to be notified. If the condition is met, your Notification will be sent.

Note: This field only pertains to the ‘Test Session Failed’ trigger event

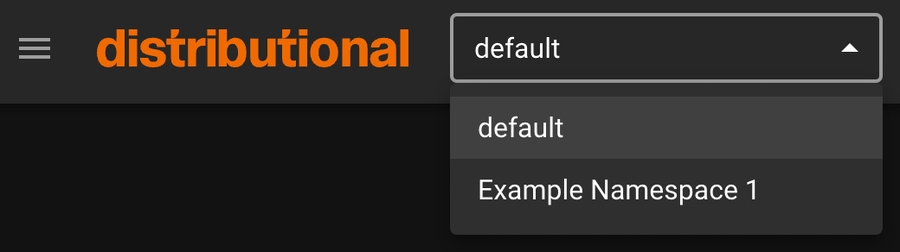

Resources in the dbnl platform are organized using organizations and namespaces.

An organization, or org for short, corresponds to a dbnl deployment.

Some resources, such as users, are defined at the organization level. Those resources are sometimes referred to as organization resources or org resources.

A namespace is a unit of isolation within a dbnl organization.

Most resources, including projects and their related resources, are defined at the namespace level. Resources defined within a namespace are only accessible within that namespace providing isolation between namespaces.

All organizations include a namespace named default. This namespace cannot be modified or deleted.

To switch namespace, use the namespace switcher in the navigation bar.

To create a namespace, go to ☰ > Settings > Admin > Namespaces and click the + Create Namespace button.

The following section introduces the concepts used to control access to the dbnl platform.

An overview of the self-hosted deployment options

The self-hosted deployment option allows you to deploy the dbnl platform directly in your cloud or on-premise environment.

Navigate to the and create the test with the filter specified on the baseline and experiment run.

Filter for the baseline Run:

Filter for the experiment Run:

In adding your Notification Channel, you will be able to select which you'd like to be notified through.

Set your Notification’s name, , and Notification Channels.

See .

You can create a test with filters in the SDK via the function:

Gets the unconnected components DAG from a list of column schemas. If there are no components, returns None. The default components dag is of the form {

“component1”: [], “component2”: [], …}

Parameters:column_schemas – list of column schemas

Returns: dictionary of components DAG or None

Create a TestSessionInput object from a Run or a RunQuery. Useful for creating TestSessions right after closing a Run.

Parameters:

run – The Run to create the TestSessionInput from

run_query – The RunQuery to create the TestSessionInput from

run_alias – Alias for the Run, must be ‘EXPERIMENT’ or ‘BASELINE’, defaults to “EXPERIMENT”

Raises:DBNLInputValidationError – If both run and run_query are None

Returns: TestSessionInput object

Helm chart installation instructions

The Helm chart option separates the infrastructure and permission provisioning process from the dbnl platform deployment process, allowing you to manage the infrastructure, permissions and Helm chart using their existing processes.

The following prerequisite steps are required before starting the Helm chart installation.

To successfully deploy the dbnl Helm chart, you will need the following infrastructure:

To configure the dbnl Helm chart, you will need:

A hostname to host the dbnl platform (e.g. dbnl.example.com).

A set of dbnl registry credentials to pull the dbnl artifacts (e.g. Docker images, Helm chart).

An RSA key pair can be generated with:

To install the dbnl Helm chart, you will need:

For the services deployed by the Helm chart to work as expected, they will need the following permissions and network accesses:

api-srv

Network access to the database.

Network access to the Redis database.

Permission to read, write and generate pre-signed URLs on the object store bucket.

worker-srv

Network access to the database.

Network access to the Redis database.

Permission to read and write to the object store bucket.

The steps to install the Helm chart using the Helm CLI are as follows:

Create an image pull secret with the your dbnl registry credentials.

Create a minimal values.yaml file.

Log into the dbnl Helm registry.

Install the Helm chart.

For more details on all the installation options, see the Helm chart README and values.yaml files. The chart can be inspected with:

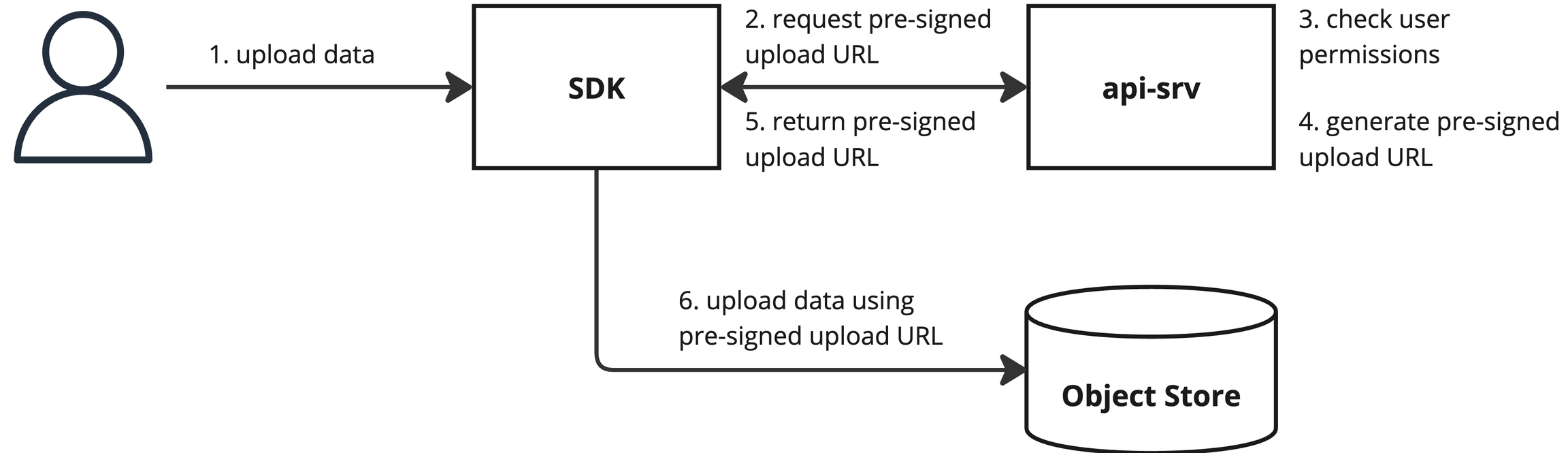

An overview of data access controls.

Data for a run is split between the object store (e.g. S3, GCS) and the database.

Metadata (e.g. name, schema) and aggregate data (e.g. summary statistics, histograms) are stored in the database.

Raw data is stored in the object store.

Database access is always done through the API with the API enforcing access controls to ensure users only access data for which they have permission.

When uploading or downloading data for a run, the SDK first sends a request for a pre-signed upload or download URL to the API. The API enforces access controls, returning an error if the user is missing the necessary permissions. Otherwise, it returns a pre-signed URL which the SDK then uses to upload or download the data.

Returns the column schema for the metric to be used in a run config.

Returns: _description_

Returns the description of the metric.

Returns: Description of the metric.

Evaluates the metric over the provided dataframe.

Parameters:df – Input data from which to compute metric.

Returns: Metric values.

Returns the expression representing the metric (e.g. rouge1(prediction, target)).

Returns: Metric expression.

If true, larger values are assumed to be directionally better than smaller once. If false, smaller values are assumged to be directionally better than larger one. If None, assumes nothing.

Returns: True if greater is better, False if smaller is better, otherwise None.

Returns the input column names required to compute the metric. :return: Input column names.

Returns the metric name (e.g. rouge1). :return: Metric name.

Returns the fully qualified name of the metric (e.g. rouge1__prediction__target).

Returns: Metric name.

Returns the column schema for the metric to be used in a run config.

Returns: _description_

Returns the type of the metric (e.g. float)

Returns: Metric type.

An enumeration.

Computes the accuracy of the answer by evaluating the accuracy score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_accuracy available in dbnl.eval.metrics.prompts.

Parameters:

input – input column name

context – context column name

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: accuracy metric

Returns answer correctness metric.

This metric is generated by an LLM using a specific specific prompt named llm_answer_correctness available in dbnl.eval.metrics.prompts.

Parameters:

input – input column name

prediction – prediction column name

target – target column name

eval_llm_client – eval_llm_client

Returns: answer correctness metric

Returns answer similarity metric.

This metric is generated by an LLM using a specific specific prompt named llm_answer_similarity available in dbnl.eval.metrics.prompts.

Parameters:

input – input column name

prediction – prediction column name

target – target column name

eval_llm_client – eval_llm_client

Returns: answer similarity metric

Computes the coherence of the answer by evaluating the coherence score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_coherence available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: coherence metric

Computes the commital of the answer by evaluating the commital score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_commital available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: commital metric

Computes the completeness of the answer by evaluating the completeness score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_completeness available in dbnl.eval.metrics.prompts.

Parameters:

input – input column name

prediction – prediction column

eval_llm_client – eval_llm_client

Returns: completeness metric

Computes the contextual relevance of the answer by evaluating the contextual relevance score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_contextual_relevance available in dbnl.eval.metrics.prompts.

Parameters:

input – input column name

context – context column name

eval_llm_client – eval_llm_client

Returns: contextual relevance metric

Returns faithfulness metric.

This metric is generated by an LLM using a specific specific prompt named llm_faithfulness available in dbnl.eval.metrics.prompts.

Parameters:

input – input column name

context – context column name

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: faithfulness metric

Computes the grammar accuracy of the answer by evaluating the grammar accuracy score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_grammar_accuracy available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: grammar accuracy metric

Returns a set of metrics which evaluate the quality of the generated answer. This does not include metrics that require a ground truth.

Parameters:

input – input column name (i.e. question)

prediction – prediction column name (i.e. generated answer)

context – context column name (i.e. document or set of documents retrieved)

eval_llm_client – eval_llm_client

Returns: list of metrics

Computes the originality of the answer by evaluating the originality score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_originality available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: originality metric

Returns relevance metric with context.

This metric is generated by an LLM using a specific specific prompt named llm_relevance available in dbnl.eval.metrics.prompts.

Parameters:

input – input column name

context – context column name

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: answer relevance metric with context

Returns a list of metrics relevant for a question and answer task.

Parameters:

prediction – prediction column name (i.e. generated answer)

eval_llm_client – eval_llm_client

Returns: list of metrics

Computes the reading complexity of the answer by evaluating the reading complexity score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_reading_complexity available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: reading complexity metric

Computes the sentiment of the answer by evaluating the sentiment assessment score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_sentiment_assessment available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: sentiment assessment metric

Computes the text fluency of the answer by evaluating the perplexity of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_text_fluency available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: text fluency metric

Computes the toxicity of the answer by evaluating the toxicity score of the answer using a language model.

This metric is generated by an LLM using a specific specific prompt named llm_text_toxicity available in dbnl.eval.metrics.prompts.

Parameters:

prediction – prediction column name

eval_llm_client – eval_llm_client

Returns: toxicity metric

Returns the Automated Readability Index metric for the text_col_name column.

Calculates the Automated Readability Index (ARI) for a given text. ARI is a readability metric that estimates the U.S. school grade level necessary to understand the text, based on the number of characters per word and words per sentence.

Parameters:text_col_name – text column name

Returns: automated_readability_index metric

Returns the bleu metric between the prediction and target columns.

The BLEU score is a metric for evaluating a generated sentence to a reference sentence. The BLEU score is a number between 0 and 1, where 1 means that the generated sentence is identical to the reference sentence.

Parameters:

prediction – prediction column name

target – target column name

Returns: bleu metric

Returns the character count metric for the text_col_name column.

Parameters:text_col_name – text column name

Returns: character_count metric

Returns the context hit metric.

This boolean-valued metric is used to evaluate whether the ground truth document is present in the list of retrieved documents. The context hit metric is 1 if the ground truth document is present in the list of retrieved documents, and 0 otherwise.

Parameters:

ground_truth_document_id – ground_truth_document_id column name

retrieved_document_ids – retrieved_document_ids column name

Returns: context hit metric

Returns a set of metrics relevant for a question and answer task.

Parameters:text_col_name – text column name

Returns: list of metrics

Returns the Flesch-Kincaid Grade metric for the text_col_name column.

Calculates the Flesch-Kincaid Grade Level for a given text. The Flesch-Kincaid Grade Level is a readability metric that estimates the U.S. school grade level required to understand the text. It is based on the average number of syllables per word and words per sentence.

Parameters:text_col_name – text column name

Returns: flesch_kincaid_grade metric

Returns a set of metrics relevant for a question and answer task.

Parameters:

prediction – prediction column name (i.e. generated answer)

target – target column name (i.e. expected answer)

Returns: list of metrics

Returns a set of metrics relevant for a question and answer task.

Parameters:

ground_truth_document_id – ground_truth_document_id column name

retrieved_document_ids – retrieved_document_ids column name

Returns: list of metrics

Returns the inner product metric between the ground_truth_document_text and top_retrieved_document_text columns.

This metric is used to evaluate the similarity between the ground truth document and the top retrieved document using the inner product of their embeddings. The embedding client is used to retrieve the embeddings for the ground truth document and the top retrieved document. An embedding is a high-dimensional vector representation of a string of text.

Parameters:

ground_truth_document_text – ground_truth_document_text column name

top_retrieved_document_text – top_retrieved_document_text column name

embedding_client – embedding client

Returns: inner product metric

Returns the inner product metric between the prediction and target columns.

This metric is used to evaluate the similarity between the prediction and target columns using the inner product of their embeddings. The embedding client is used to retrieve the embeddings for the prediction and target columns. An embedding is a high-dimensional vector representation of a string of text.

Parameters:

prediction – prediction column name

target – target column name

embedding_client – embedding client

Returns: inner product metric

Returns the levenshtein metric between the prediction and target columns.