What's in a test?

Tests are the core mechanism for asserting acceptable app behavior within Distributional. In this section, we introduce the necessary tools and explain how they can be used -- either with guidance from Distributional or by users based on unique needs.

At its core, a Test is a combination of a Statistic, derived from a run, which defines some behavior of the run, and an Assertion which enumerates the acceptable values of that statistic (and, thus, the acceptable behavior of a run). The run under consideration in a test is referred to as the Experiment run; often, there will also be a Baseline run used for comparison.

Test Tags can be created and applied to tests to group them together for, among other purposes, signaling the shared purpose of several tests (e.g., tests for text sentiment).

When a group of tests is executed on a chosen Experiment and Baseline Run, the output is a Test Session containing which assertions Pass and Fail.

Incorporate your expertise alongside our automated tests

While we expect everyone to use our automated Production test creation capabilities, we also recognize that many users bring significant expertise to their testing experience. Distributional provides you a suite of test creation tools and patterns that match your needs.

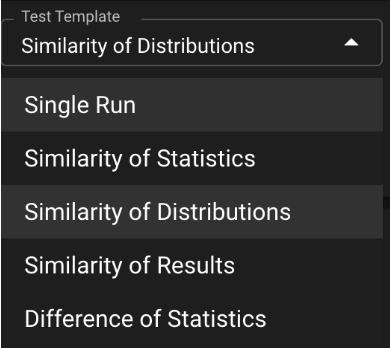

Most tests in dbnl are statistically-motivated to better measure and study fundamentally nondeterministic AI-powered apps. Means, percentiles, Kolmogorov-Smirnov, and other statistical entities are provided to allow you to study the behavior of your app as you would like. We provide templates to guide you through our suggested testing strategies.

The manual test creation process can be configured in any of three locations: in the main web UI test configuration page, through shortcuts to the test drawer scattered throughout the web UI, or through the SDK. This gives you the flexibility to systematically control test generation, or quickly respond to insights for which you would like to test in the future.

The core testing capability is supplemented by the ability to define filters on tests. These filters empower you to test for consistency within subsets of your user base. Filtering also allows you to test for bias in your app and help triage cases of undesired behavior.

Learn more about how to create your own tests here.

Distributional can automate your Production testing process

Production testing is the core tool in the Distributional toolkit – it provides the ability to continually state whether your app is performing as desired.

To make Production testing easy to start using, we have developed some automated Production test creation capabilities. Using sample data that you upload to our system, we can craft tests which help determine if there has been a shift in behavior for your app.

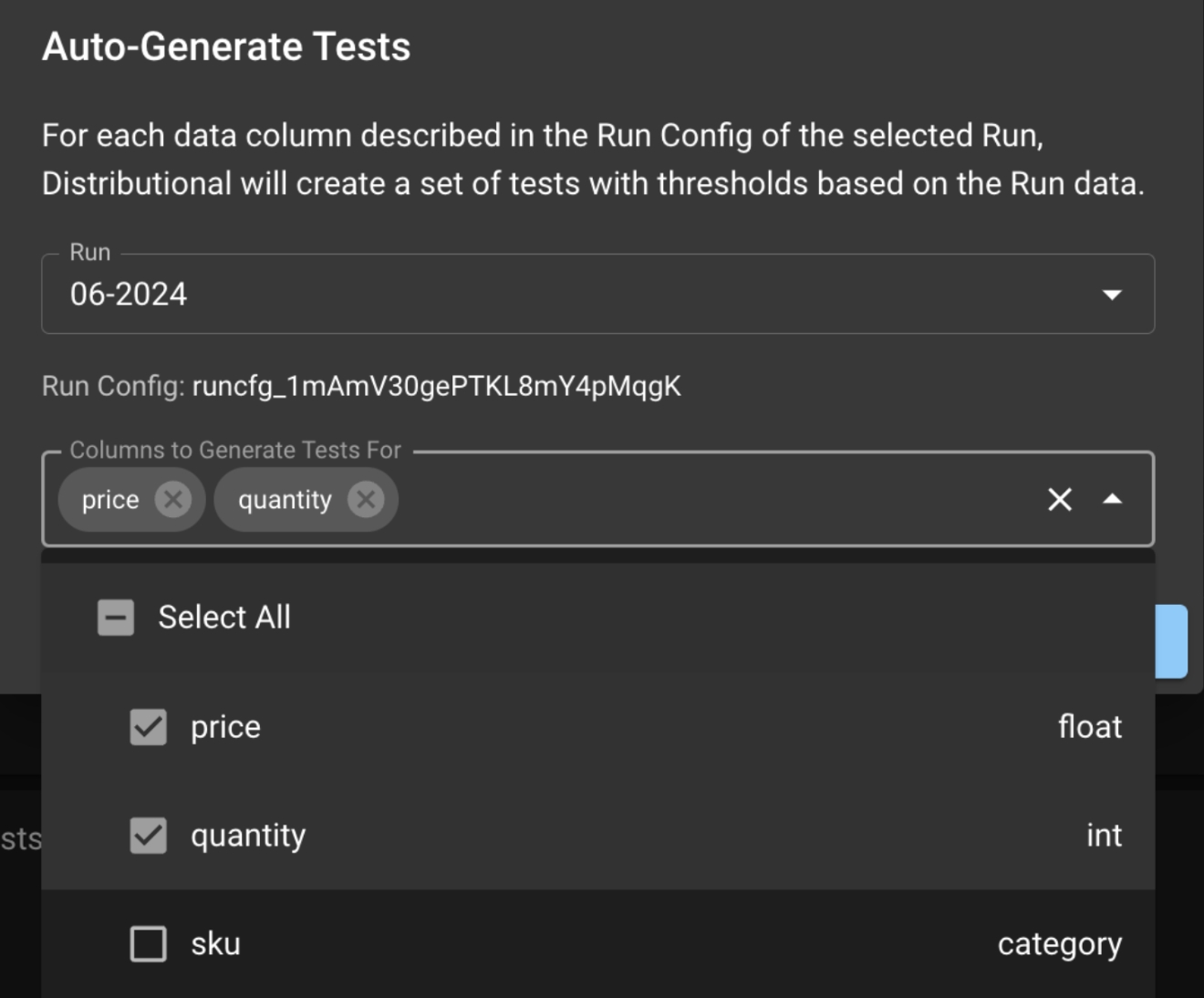

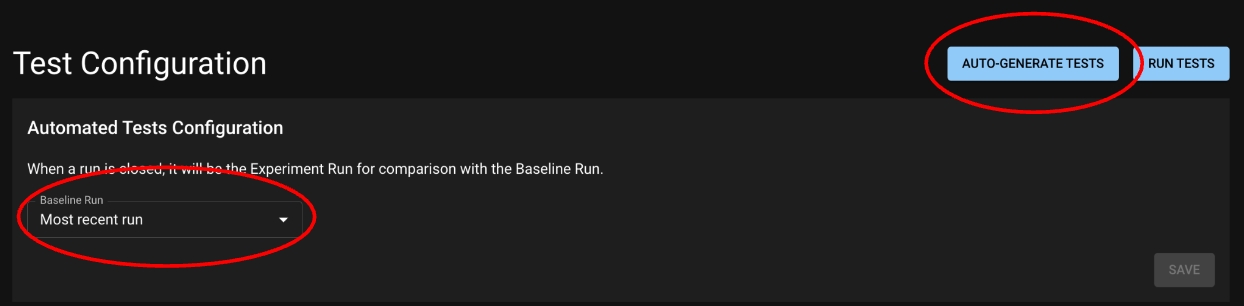

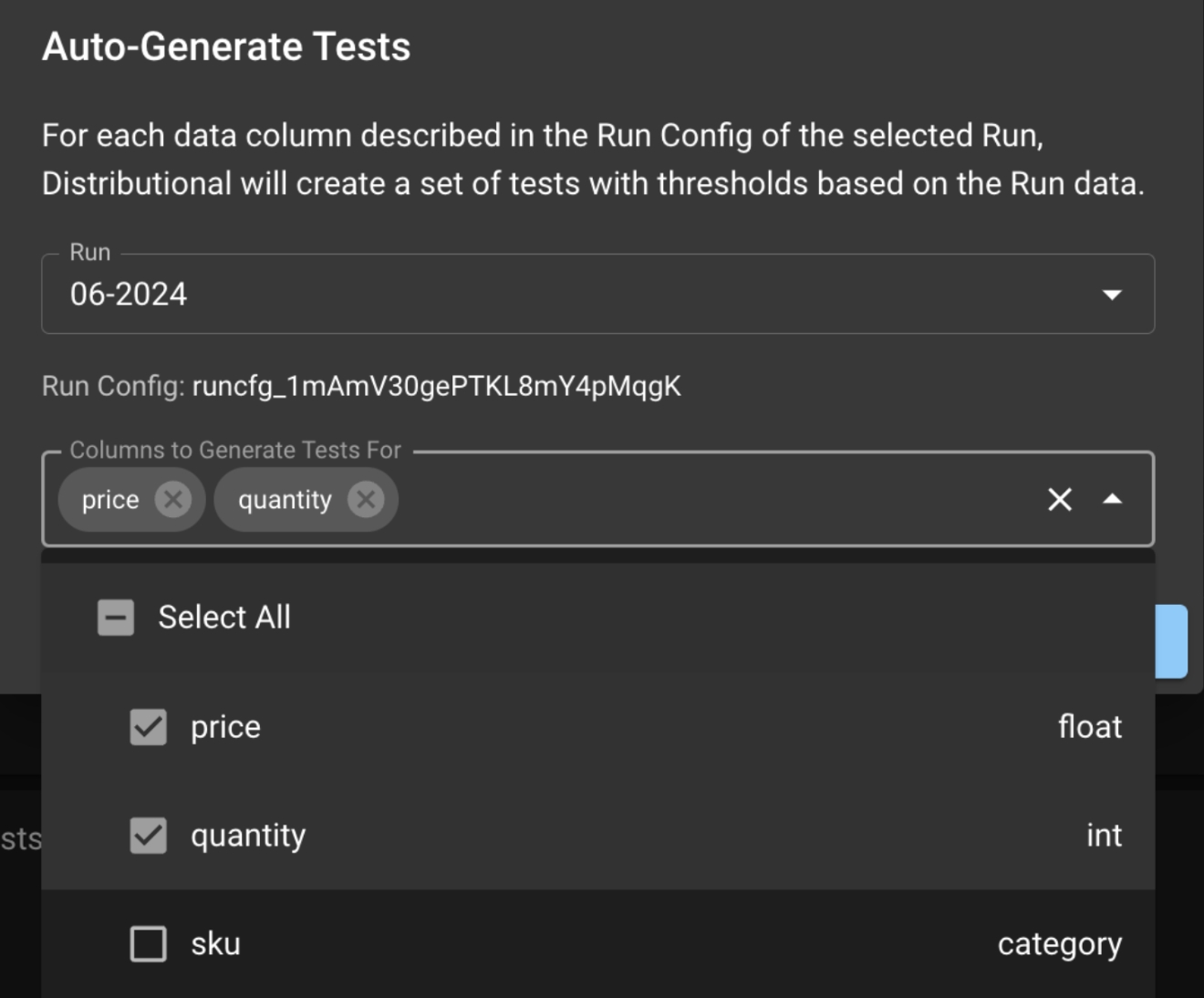

The Auto-Generate Tests button takes you to the modal below, which allows you to choose the columns on which you want to test for app consistency.

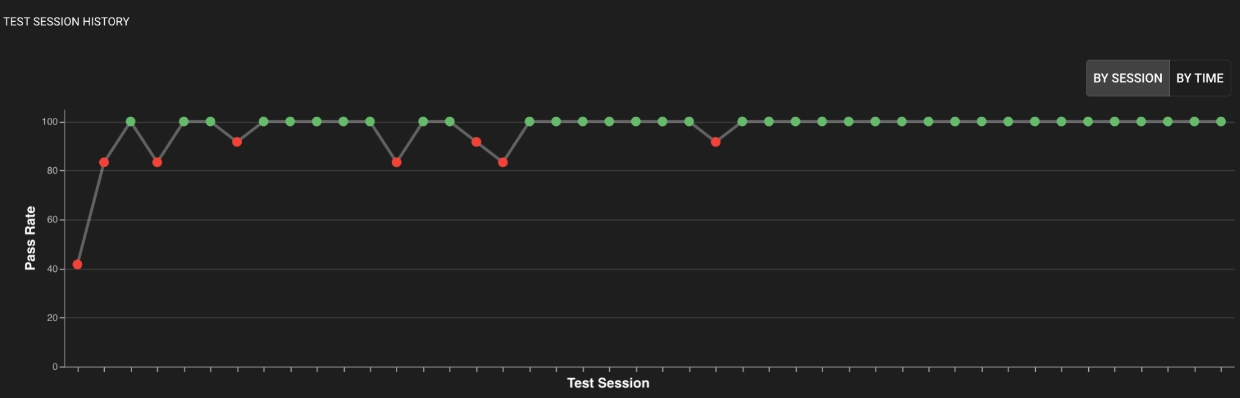

You also have the opportunity to set a Baseline Run Query, which gives you the freedom to define how far into the past you want to look to define “consistency”. After setting the Baseline, your tests will utilize it by default; you can override it at test creation time. Over time, your project will graphically render the health of your app, as shown below.

You may later deactivate or any of the automatically generated tests as you feel the desire to adapt these tests to meet your needs. In particular, we recommend that you review the first several test sessions in a new Project and recalibrate based on observed behavior. If you believe that no significant difference exists between tested runs, you should recalibrate the generated tests to pass.

This is how you test when you are a dbnl expert

When you start work with Distributional (dbnl), you should focus on creating and executing to ask and answer the question "Is my AI-powered app behaving as desired?" As you gain more confidence using dbnl, the full pattern of standard dbnl usage looks as follows:

Execute Production testing at a regular interval (e.g., nightly) on recent app usages

Execute Deployment testing at a regular interval (e.g., weekly) on a fixed dataset

Review Production test sessions to start triage of any concerning app behavior

This could be triggered by test failures, DBNL-automated guidance, or manual investigation

As concerning app behavior is identified, trigger Deployment tests to help diagnose any app component nonstationarity

If nonstationarity is present, start Development testing on the affected 3rd party components

If the components are stationary, start Development testing on the observed app examples which show unsatisfactory behavior

Once suitable app behavior is recorded in Development testing, record a new baseline for future Deployment testing on the fixed dataset

Push the new app version into production and update the Deployment + Production testing process