When Distributional automatically generates the production tests, the thresholds are estimated from a single Run’s data. As a consequence, some of these thresholds may not reflect the user’s actual working condition. As the user continues to upload Runs and triggers new Test Sessions, they may want to adjust these thresholds. The recalibration feature offers a simple option to adjust these thresholds.

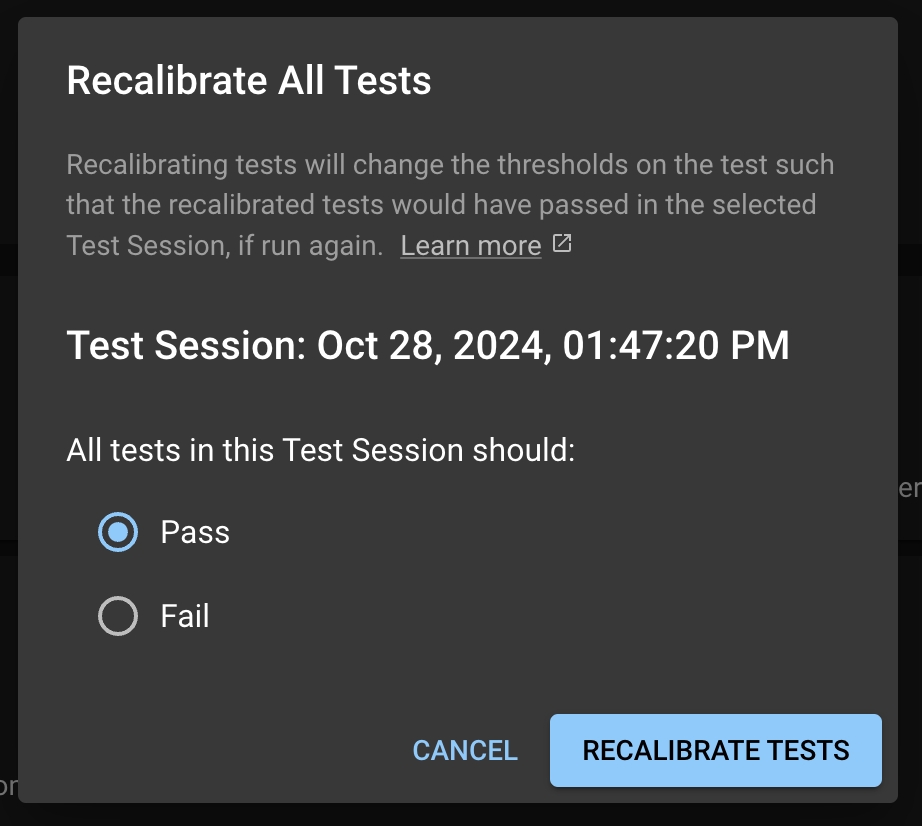

Recalibration solicits feedback from the user and adjusts the test thresholds. If a user wants a particular test or set of tests to pass in the future, the test thresholds will be relaxed to increase the likelihood of the test passing with similar statistics.

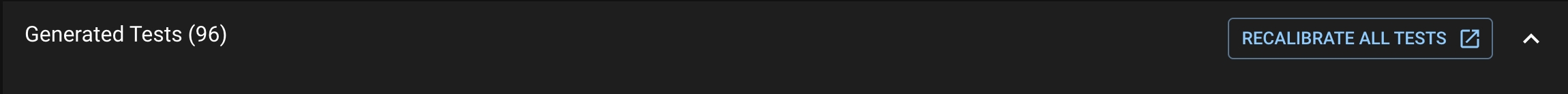

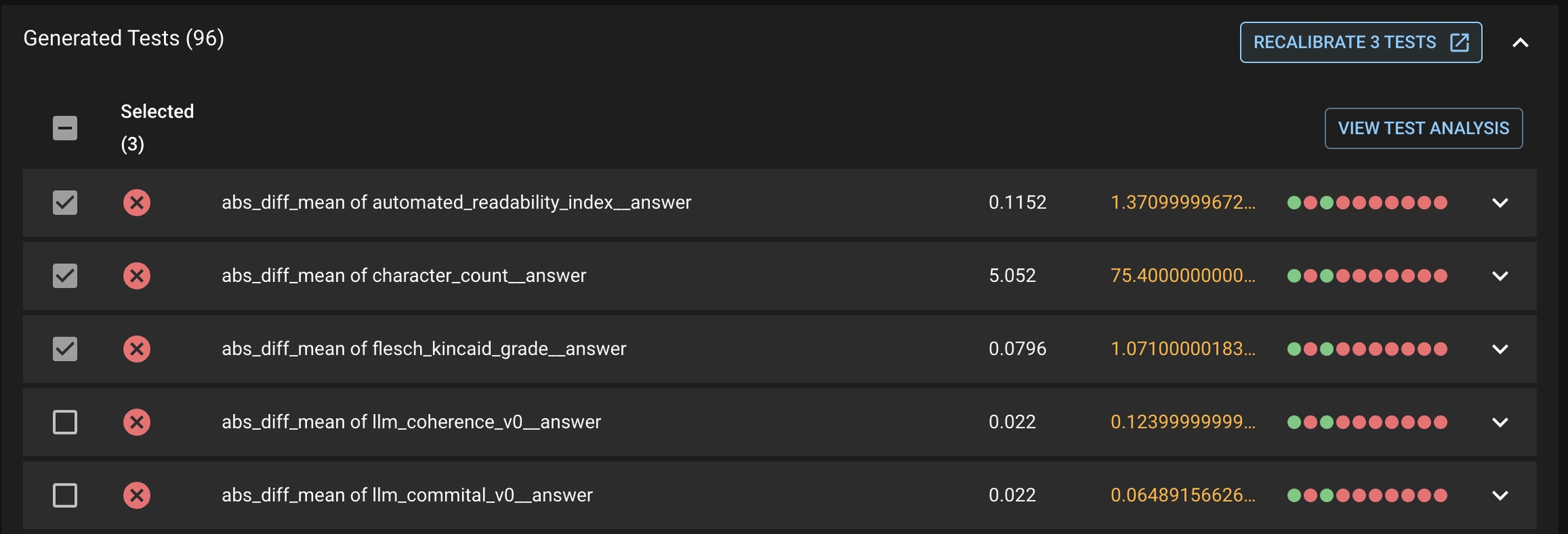

Click the RECALIBRATE ALL TESTS button at the top right of the Generated Tests table in the Test Session page.

This will take you to the Test Configuration page, where it will prompt you with a modal for the you to select to have all the tests to pass or fail in the future.

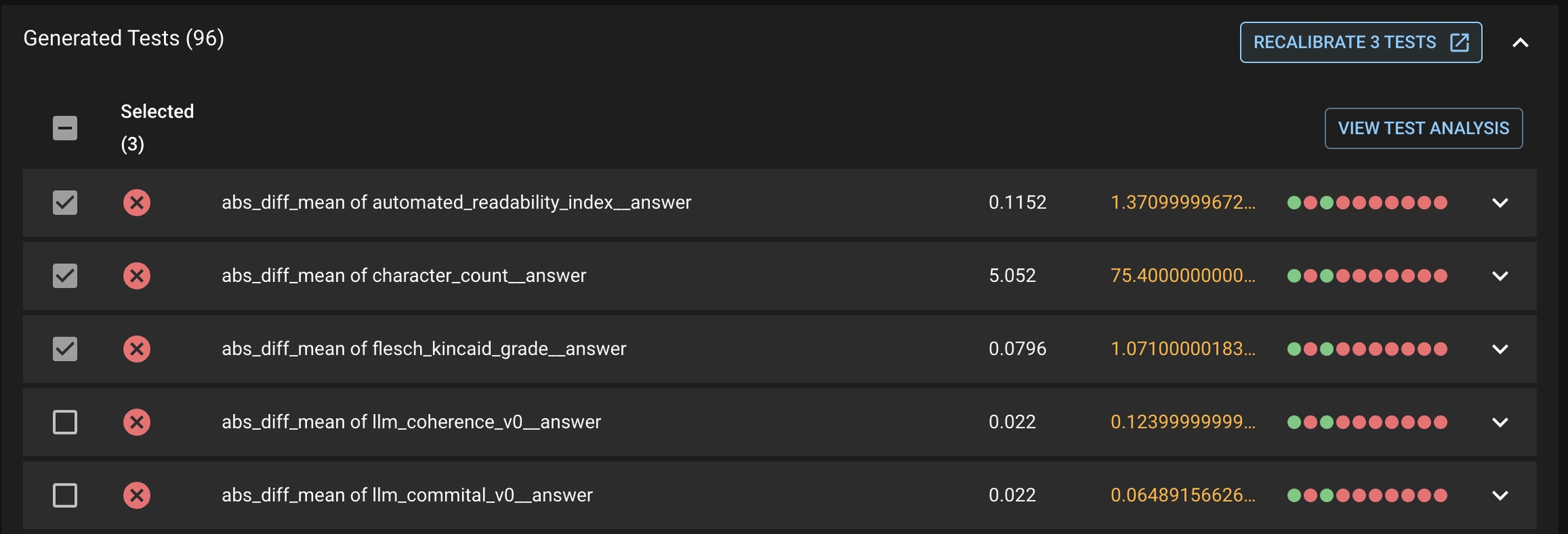

First select the tests you want to recalibrate, then click the RECALIBRATE <#> TESTS button under the Generated Tests tab. This will prompt the same modal for the user to select to have these tests pass or fail in the future.

Production testing focuses on the need to regularly check in on the health of an app as it has behaved in the real world. Users want to detect changes in either their AI app behavior or the environment that it is operated in. At Distributional, we help users to confidently answer these questions.

In the case of production testing, Distributional recommends testing the similarity of distributions of the data related to the AI app between the current Run and a baseline Run. Users can start with the auto-test generation feature to let Distributional generate the necessary production tests for a given Run.

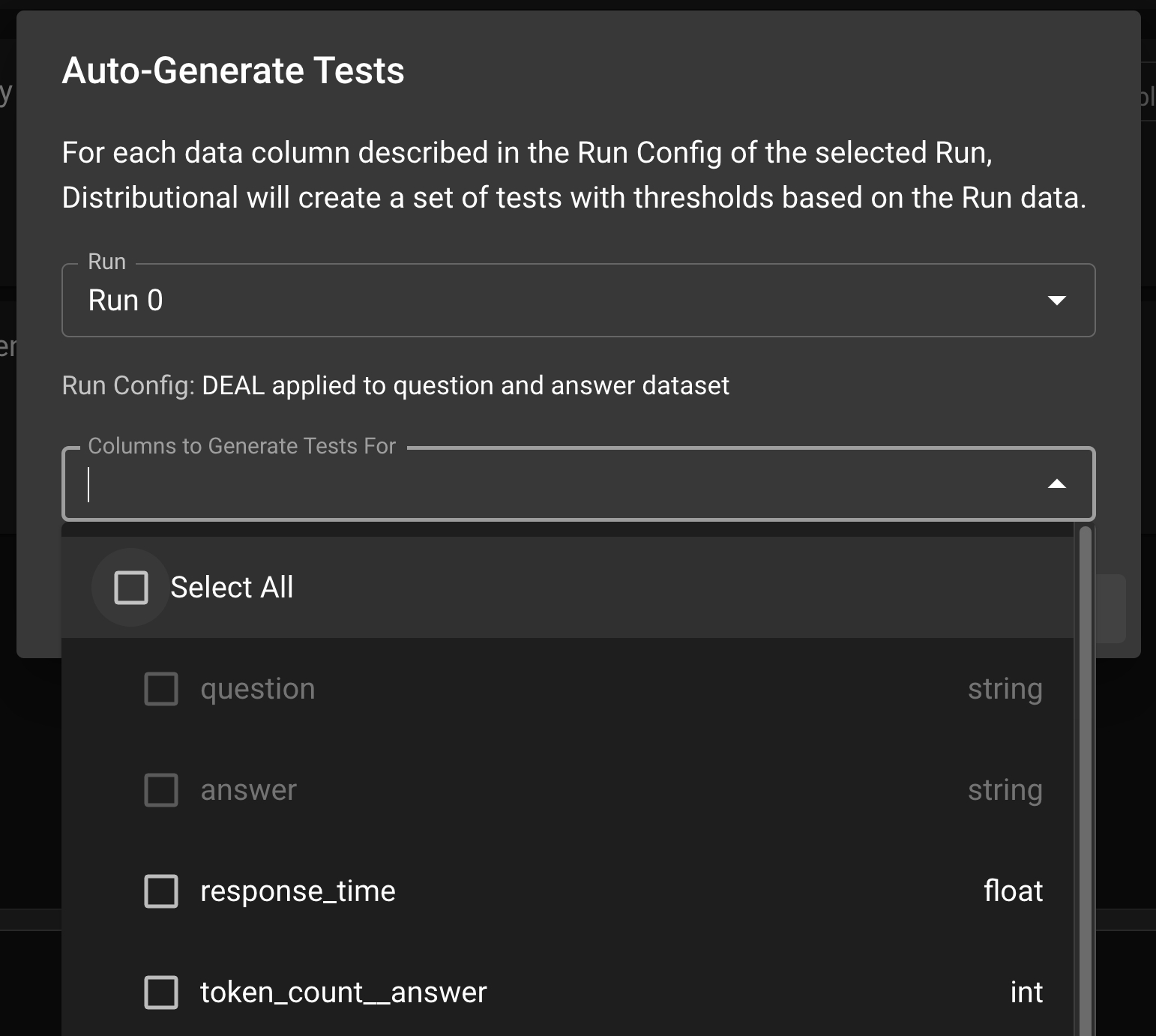

In order to facilitate production testing, Distributional can automatically generate a suite of Tests based on the user’s uploaded data. Distributional studies the Run results data and create the appropriate thresholds for the Tests.

In the Test Config page, click the AUTO-GENERATE TESTS button; this will prompt a modal where the user can select the Run which the tests will be based on. Click Select All to generate tests for all columns or optionally sub-select the columns to generate tests. Click GENERATE TESTS to generate the production tests.

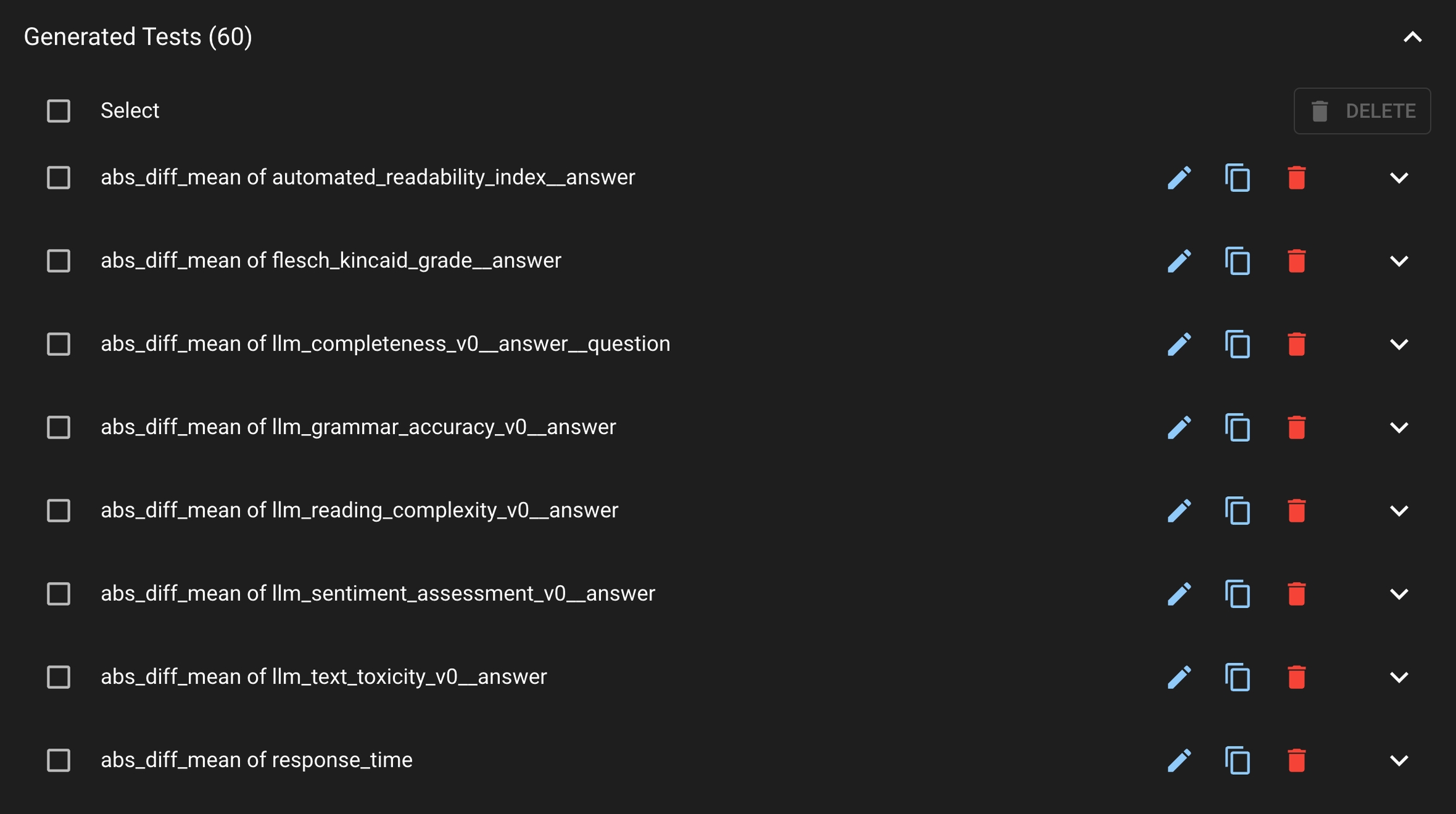

Once the Tests are generated, you can view them under the Generated Tests section.

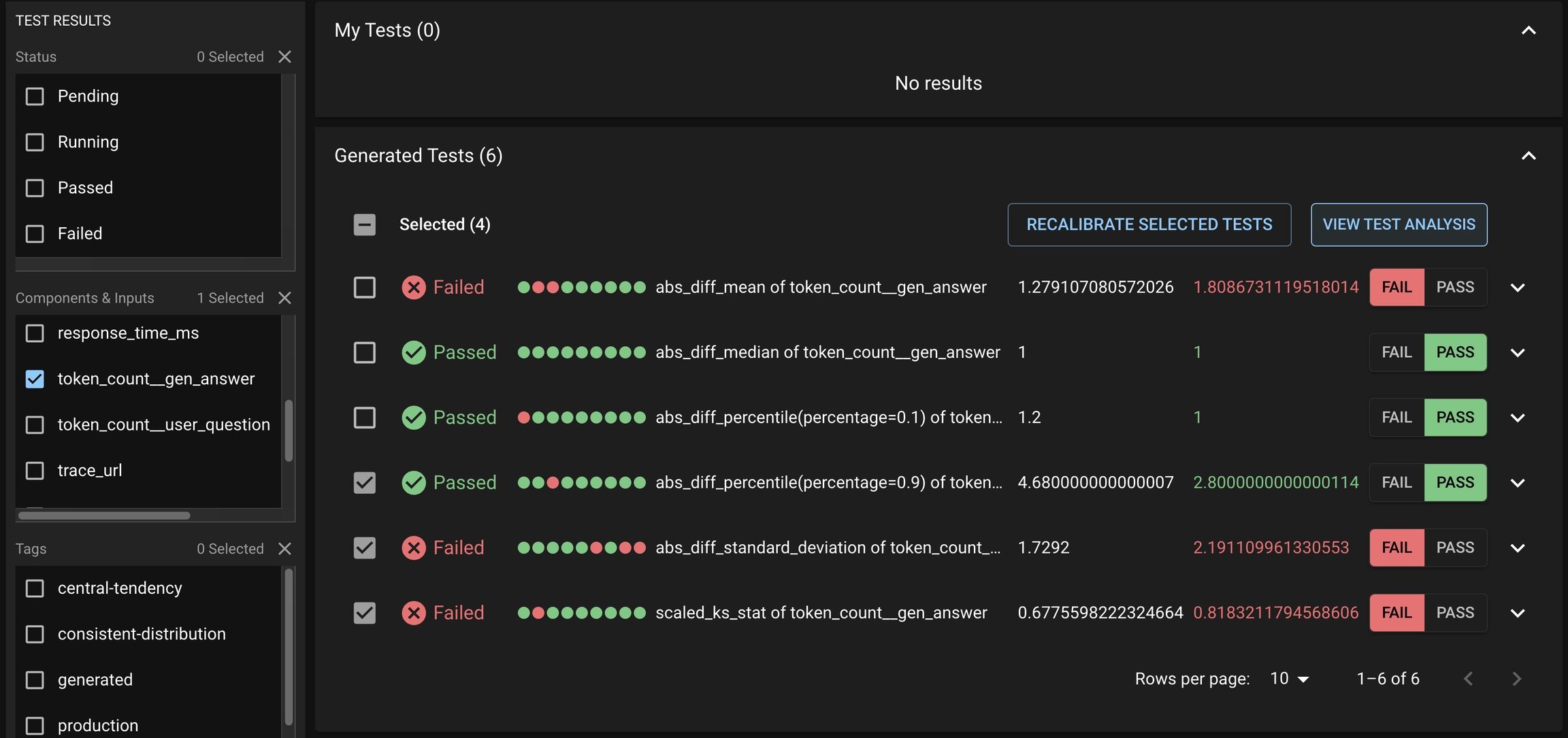

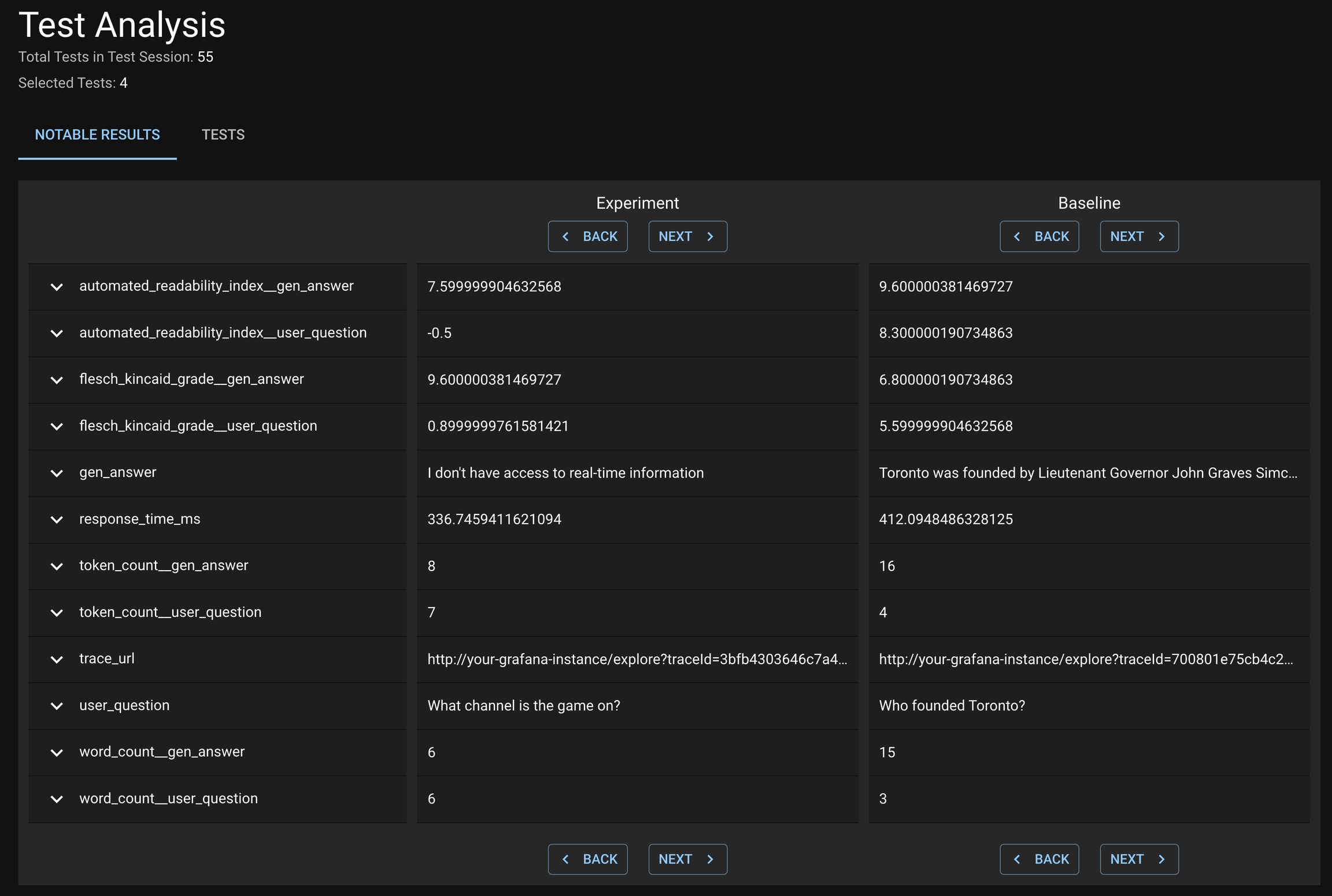

After a Test Session is executed, users might want to introspect on which Tests passed and failed. In addition, they want to understand what caused a Test or a group of Tests to fail; in particular, which subset of from the Run likely caused the Tests to fail.

Notable results are only presented for Distributional

To review the notable results, first select the desirable set of Generated Tests that you want to study from the Test Session details page. This may include both failed and passed Tests.

Click VIEW TEST ANALYSIS button to enter the Test Analysis page. On this page, you can review the notable results for the Experiment Run and Baseline Run under the Notable Results tab.

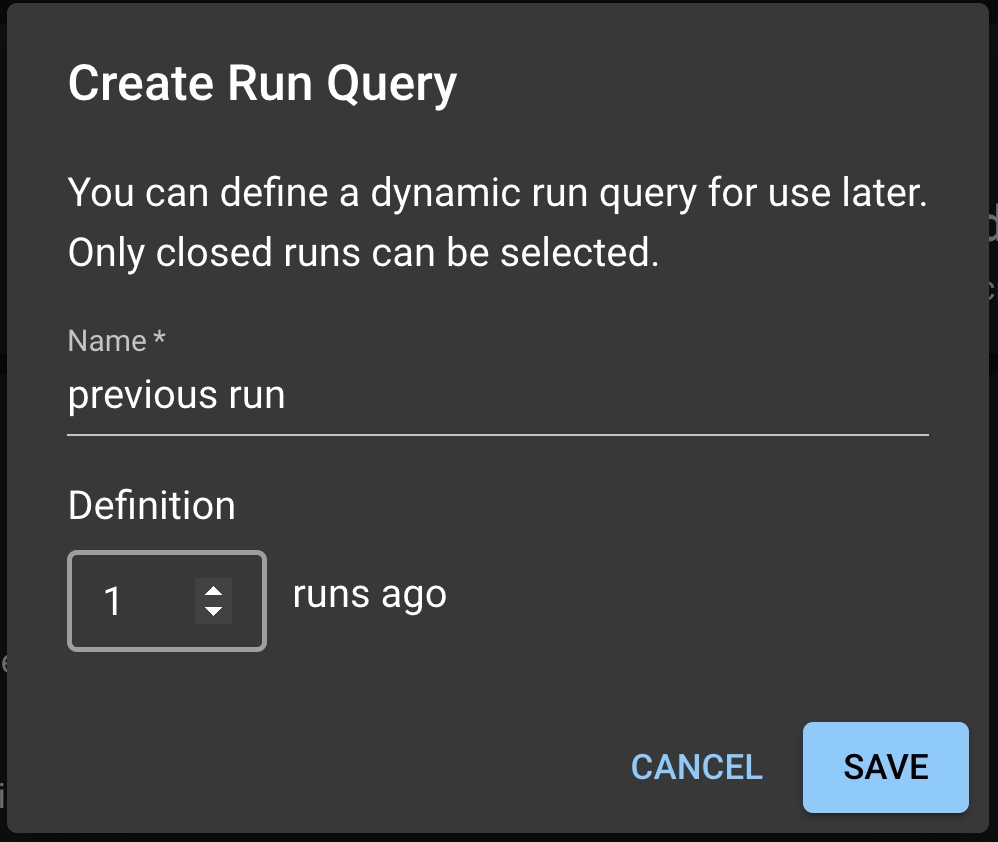

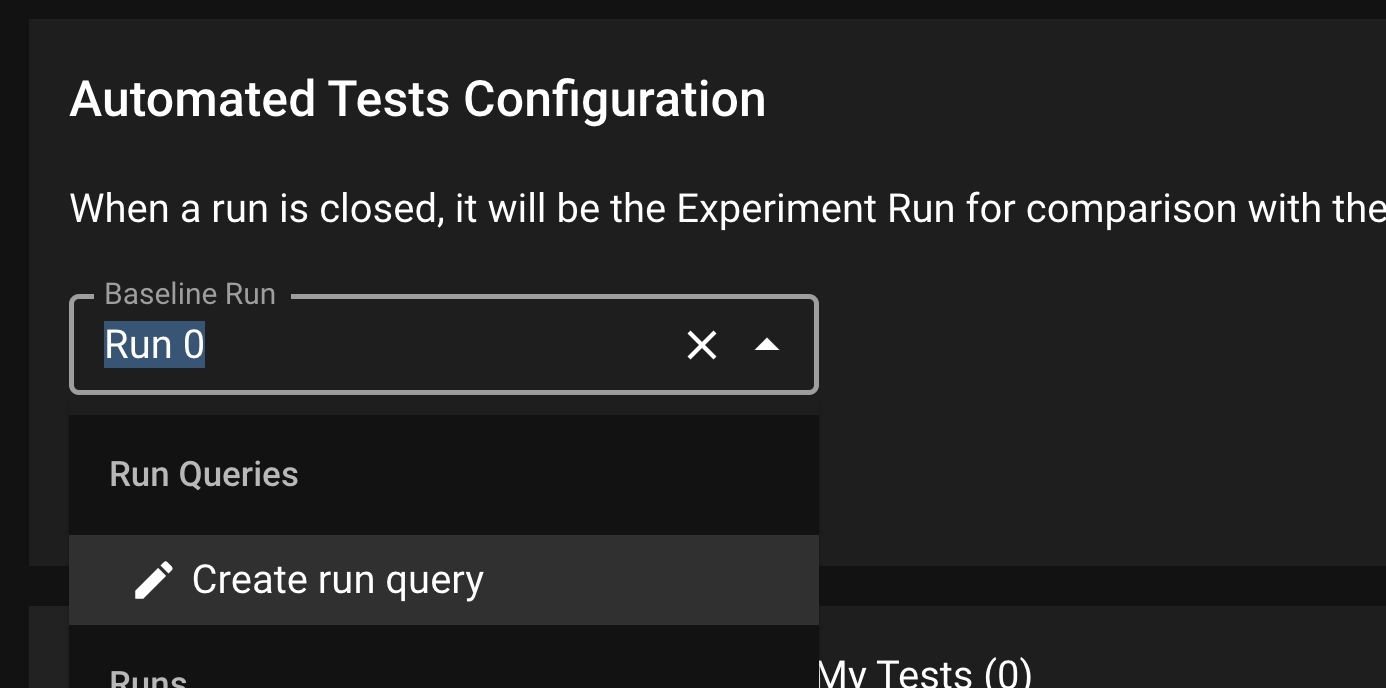

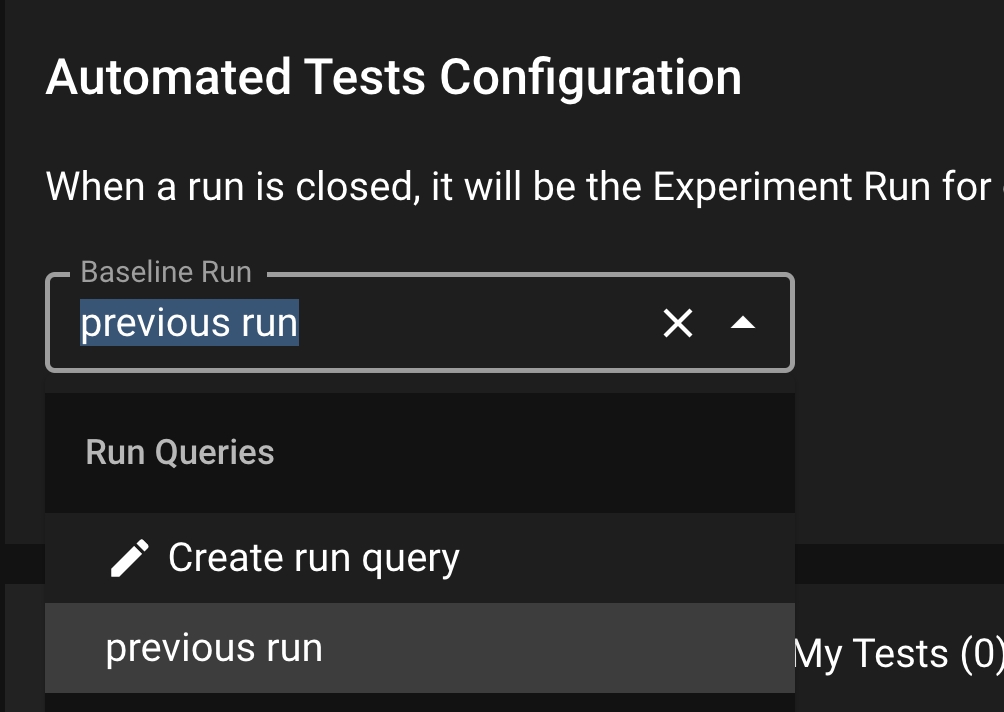

Instead of comparing the newest Run to a fixed Baseline Run, a user might want to dynamically shift the baseline. For example, one might want to compare the new Run to the most recently completed Run. To enable dynamic baseline, user needs to create a Run Query from the Test Config page.

Under the Baseline Run dropdown, select Create run query; this will prompt a Run Query modal. In the model, you can write the name of the Run Query and select the offsetting Run to be used for the dynamic baseline. For example: 1 implies each new Run is tested against the previous uploaded Run.

Click SAVE to save this Run Query. Back in the Test Config page, select the Run Query from the dropdown and click SAVE to save this setting as the dynamic baseline.